Background to the issue

Locally-run LLMs are often hardware-limited and may suffer from performance bottlenecks when processing complex tasks.Lemon AI provides multiple optimization paths.

prescription

- Model selection optimization: Select the appropriate model according to the hardware configuration, e.g. Qwen-7B is recommended for 8G RAM devices instead of a larger model.

- Hybrid deployment model: API access to cloud models (GPT/Claude) for high complexity tasks and local models for routine tasks.

- Task decomposition techniques: Utilize the ReAct model to break down large tasks into multiple smaller tasks to be executed incrementally.

Performance Tuning Recommendations

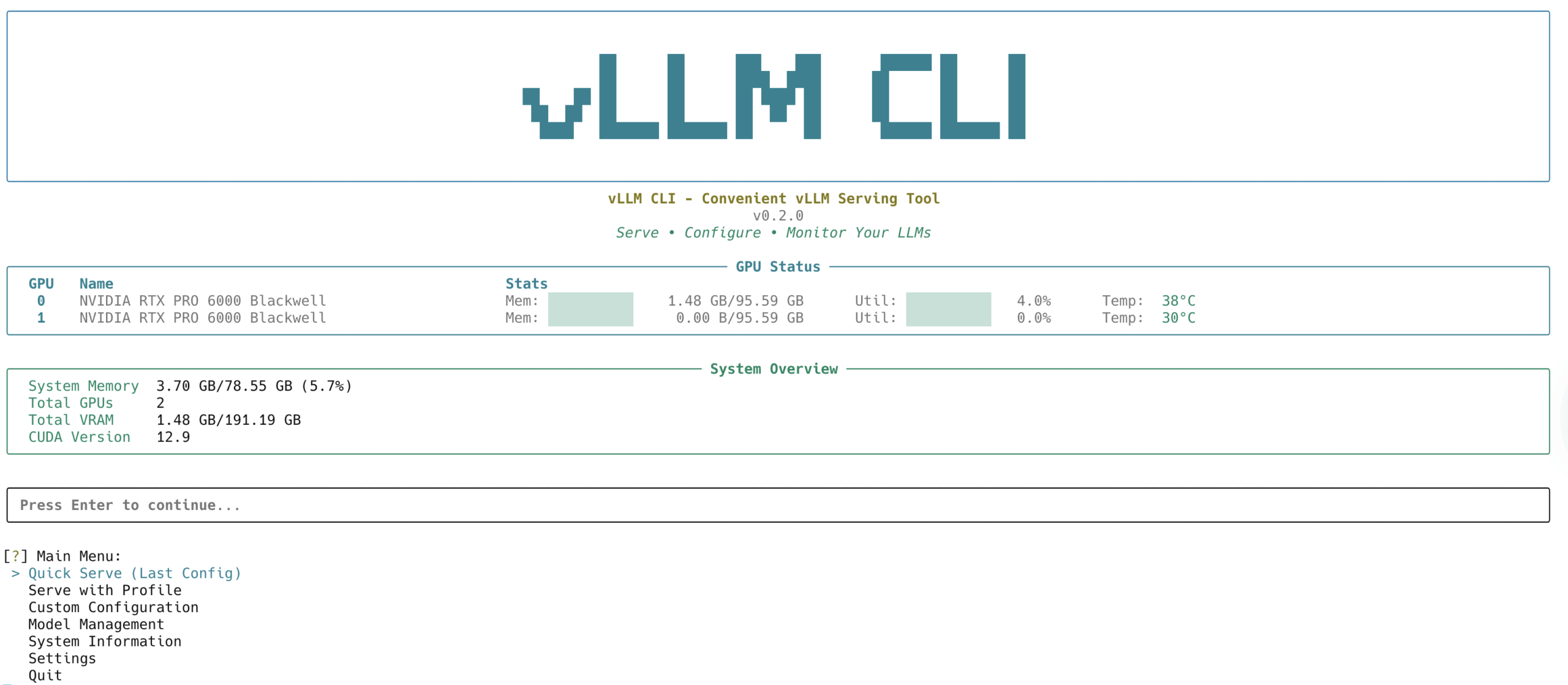

1. Set appropriate GPU acceleration parameters in Ollama

2. Allocate more computing resources to Docker containers

3. Regularly clean the model cache to improve response time

Options

Consider if you continue to experience performance issues:

- Upgrade hardware configuration (especially recommended to increase memory)

- Reduced computational requirements using quantized versions of models

- Adoption of a distributed deployment architecture

This answer comes from the articleLemon AI: A Locally Running Open Source AI Intelligence Body FrameworkThe