A phenomenon is common: even though RAG The system uses the strongest LLM, and Prompt has been tuned over and over again, and the quiz still doesn't work well, with answers that are either contextually incomplete or contain factual errors.

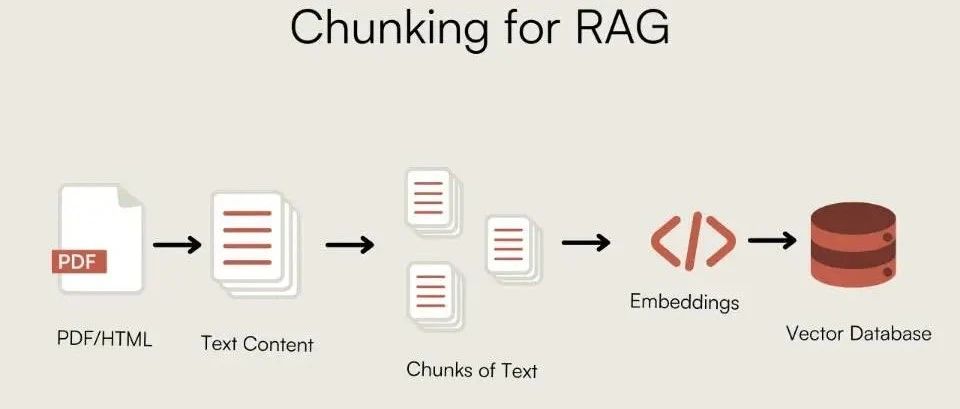

Engineers examined the retrieval algorithm and optimized the Embedding models, but often overlooks a critical step before the data enters the vector library: document chunking.

Inappropriate chunking is tantamount to feeding the model a bunch of "bad data" with fragmented information. No matter how strong the reasoning ability of the model is, it will not be able to piece together a complete answer from the fragmented knowledge. The quality of chunking directly determines the lower limit of RAG system performance.

Instead of talking about vague theories, this article focuses on real-world code and engineering experience with various chunking strategies to create a solid foundation for RAG systems.

Why the chunking?

The need for chunking stems from two core limitations:

- Model Context Window: The Large Language Model (LLM) cannot process text of infinite length at once. Chunking is the process of slicing a long document into pieces that the model can process.

- Search Efficiency and Noise: During retrieval, if a block of text contains too much irrelevant information (noise), it dilutes the core signal, making it difficult for the retriever to accurately match user intent.

The ideal chunking is incontextual integrity与information densityFinding the balance between.chunk_size 和 chunk_overlap is the underlying parameter that regulates this equilibrium.chunk_overlap Semantic continuity across block boundaries is ensured by retaining some of the repeated text between neighboring blocks.

basic chunking strategy

Fixed length chunking

This is the most straightforward method, cutting at a preset number of characters. It does not take into account any logical structure of the text and is simple to implement, but tends to destroy semantic integrity.

- core idea: by fixed number of characters

chunk_sizeSliced text. - Applicable Scenarios: plain text with weak structure, or preprocessing stages with low semantic requirements.

from langchain_text_splitters import CharacterTextSplitter

sample_text = (

"LangChain was created by Harrison Chase in 2022. It provides a framework for developing applications "

"powered by language models. The library is known for its modularity and ease of use. "

"One of its key components is the TextSplitter class, which helps in document chunking."

)

text_splitter = CharacterTextSplitter(

separator=" ", # Split on spaces

chunk_size=100, # Size of each chunk

chunk_overlap=20, # Overlap between chunks

length_function=len,

)

docs = text_splitter.create_documents([sample_text])

for i, doc in enumerate(docs):

print(f"--- Chunk {i+1} ---")

print(doc.page_content)

Recursive character chunking

LangChain The recommended generalized policy. It is set by a predefined list of characters (e.g. ["\n\n", "\n", " ", ""]) performs recursive segmentation and tries to prioritize the retention of logical units such as paragraphs and sentences.

- core idea: Recursive slicing by hierarchical list of separators.

- Applicable Scenarios: The preferred generic strategy for the vast majority of text types.

from langchain_text_splitters import RecursiveCharacterTextSplitter

# Using the same sample_text from the previous example

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=100,

chunk_overlap=20,

# Default separators are ["\n\n", "\n", " ", ""]

)

docs = text_splitter.create_documents([sample_text])

for i, doc in enumerate(docs):

print(f"--- Chunk {i+1} ---")

print(doc.page_content)

parameter tuning: for fixed-length and recursive chunking, thechunk_size 和 chunk_overlap The setup is crucial.

chunk_size: Determine the size of each block. Too small a block and there is not enough contextual information; too large a block and it introduces too much noise and increases theAPIThe cost of the call. This value is usually based on theEmbeddingInputs to the modeltokenLimitations to choose from, common256,512,1024equivalence, precisely to fit theBERTisomorphic512tokenContext window.chunk_overlap: Determines the number of overlapping characters between neighboring blocks. Set a reasonable overlap (e.g.chunk_sizeof 10%-20%) can effectively prevent cutting off complete semantic units at block boundaries, which is the key to ensure semantic continuity.

Sentence-based chunking

The combination of sentences as the smallest unit ensures the most basic semantic integrity.

- core idea: Split text into sentences and then aggregate sentences into chunks.

- Applicable Scenarios: Scenarios that require a high degree of sentence completeness, such as legal documents and news reports.

import nltk

try:

nltk.data.find('tokenizers/punkt')

except nltk.downloader.DownloadError:

nltk.download('punkt')

from nltk.tokenize import sent_tokenize

def chunk_by_sentences(text, max_chars=500, overlap_sentences=1):

sentences = sent_tokenize(text)

chunks = []

current_chunk = ""

for i, sentence in enumerate(sentences):

if len(current_chunk) + len(sentence) <= max_chars:

current_chunk += " " + sentence

else:

chunks.append(current_chunk.strip())

# Create overlap

start_index = max(0, i - overlap_sentences)

current_chunk = " ".join(sentences[start_index:i+1])

if current_chunk:

chunks.append(current_chunk.strip())

return chunks

long_text = "This is the first sentence. This is the second sentence, which is a bit longer. Now we have a third one. The fourth sentence follows. Finally, the fifth sentence concludes this paragraph."

chunks = chunk_by_sentences(long_text, max_chars=100)

for i, chunk in enumerate(chunks):

print(f"--- Chunk {i+1} ---")

print(chunk)

take note of: When dealing with Chinesenltk.tokenize.sent_tokenize The default English model will fail. Chinese-appropriate segmentation methods must be used, such as those based on Chinese punctuation marks (.!?) ), or using a regular expression loaded with the Chinese model of the spaCy 或 HanLP etc. library.

Structure-aware chunking

Utilizing the inherent structural information of the document (e.g., headings, lists) as chunk boundaries, this approach is logical and better preserves context.

Markdown text chunking

- core idea: Based on

Markdownof the title hierarchy to define the boundaries of the block. - Applicable Scenariosformalized

MarkdownDocuments such asGitHubREADME, technical documentation.

from langchain_text_splitters import MarkdownHeaderTextSplitter

markdown_document = """

# Chapter 1: The Beginning

## Section 1.1: The Old World

This is the story of a time long past.

## Section 1.2: A New Hope

A new hero emerges.

# Chapter 2: The Journey

## Section 2.1: The Call to Adventure

The hero receives a mysterious call.

"""

headers_to_split_on = [

("#", "Header 1"),

("##", "Header 2"),

]

markdown_splitter = MarkdownHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

md_header_splits = markdown_splitter.split_text(markdown_document)

for split in md_header_splits:

print(f"Metadata: {split.metadata}")

print(split.page_content)

print("-" * 20)

dialogic chunking

- core idea: Chunking based on speakers or rounds of dialog.

- Applicable Scenarios: Customer service conversations, interview transcripts, meeting minutes.

dialogue = [

"Alice: Hi, I'm having trouble with my order.",

"Bot: I can help with that. What's your order number?",

"Alice: It's 12345.",

"Alice: I haven't received any shipping updates.",

"Bot: Let me check... It seems your order was shipped yesterday.",

"Alice: Oh, great! Thank you.",

]

def chunk_dialogue(dialogue_lines, max_turns_per_chunk=3):

chunks = []

for i in range(0, len(dialogue_lines), max_turns_per_chunk):

chunk = "\n".join(dialogue_lines[i:i + max_turns_per_chunk])

chunks.append(chunk)

return chunks

chunks = chunk_dialogue(dialogue)

for i, chunk in enumerate(chunks):

print(f"--- Chunk {i+1} ---")

print(chunk)

Semantic and thematic chunking

This type of approach goes beyond the physical structure of the text and slices and dices the content based on its semantics.

semantic chunking

- core idea: Calculate the vector similarity of neighboring sentences or paragraphs, and slice at positions where there is a sudden change in semantics (low similarity).

- Applicable Scenarios: Documents that require high-precision semantic cohesion, such as knowledge bases and research papers.

import os

from langchain_experimental.text_splitter import SemanticChunker

from langchain_huggingface import HuggingFaceEmbeddings

os.environ["TOKENIZERS_PARALLELISM"] = "false"

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

# LangChain's SemanticChunker offers different threshold types:

# "percentile": Threshold based on the percentile of similarity score differences. Good for adaptability.

# "standard_deviation": Threshold based on standard deviation of similarity scores.

# "interquartile": Uses the interquartile range, robust to outliers.

# "gradient": Looks for sharp changes in similarity, useful for detecting abrupt topic shifts.

text_splitter = SemanticChunker(

embeddings,

breakpoint_threshold_type="percentile",

breakpoint_threshold_amount=95 # A higher percentile means it only breaks on very significant semantic shifts.

)

long_text = (

"The Wright brothers, Orville and Wilbur, were two American aviation pioneers "

"generally credited with inventing, building, and flying the world's first successful motor-operated airplane. "

"They made the first controlled, sustained flight of a powered, heavier-than-air aircraft on December 17, 1903. "

"In the following years, they continued to develop their aircraft. "

"Switching topics completely, let's talk about cooking. "

"A good pizza starts with a perfect dough, which needs yeast, flour, water, and salt. "

"The sauce is typically tomato-based, seasoned with herbs like oregano and basil. "

"Toppings can vary from simple mozzarella to a wide range of meats and vegetables. "

"Finally, let's consider the solar system. "

"It is a gravitationally bound system of the Sun and the objects that orbit it. "

"The largest objects are the eight planets, in order from the Sun: Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune."

)

docs = text_splitter.create_documents([long_text])

for i, doc in enumerate(docs):

print(f"--- Chunk {i+1} ---")

print(doc.page_content)

print()

parameter tuning:SemanticChunker The effectiveness of the program is highly dependent on the breakpoint_threshold_amountThis threshold controls the "semantic change sensitivity". This threshold controls the "semantic change sensitivity". A low threshold produces a large number of small, cohesive chunks, while a high threshold cuts only when there is a significant shift in topic. Experimentation needs to be repeated based on the content of the document.

Theme-based chunking

- core idea: Utilizing thematic models (e.g.

LDA) or clustering algorithms that slice and dice documents as their macro-themes shift. - Applicable Scenarios: Long, multi-subject reports or books.

import numpy as np

import re

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import nltk

from nltk.corpus import stopwords

try:

stopwords.words('english')

except LookupError:

nltk.download('stopwords')

def lda_topic_chunking(text: str, n_topics: int = 3) -> list[str]:

# 1. Preprocessing: Treat each paragraph as a "document"

paragraphs = [p.strip() for p in text.split('\n\n') if p.strip()]

if len(paragraphs) <= 1:

return [text]

cleaned_paragraphs = [re.sub(r'[^a-zA-Z\s]', '', p).lower() for p in paragraphs]

# 2. Bag of Words + Stopword Removal

vectorizer = CountVectorizer(min_df=1, stop_words=stopwords.words('english'))

X = vectorizer.fit_transform(cleaned_paragraphs)

if X.shape == 0:

return paragraphs

# 3. LDA Topic Modeling

lda = LatentDirichletAllocation(n_components=n_topics, random_state=42)

lda.fit(X)

# 4. Determine dominant topic for each paragraph

topic_dist = lda.transform(X)

dominant_topics = np.argmax(topic_dist, axis=1)

# 5. Chunking based on topic changes

chunks = []

current_chunk_paragraphs = []

current_topic = dominant_topics

for i, paragraph in enumerate(paragraphs):

if dominant_topics[i] == current_topic:

current_chunk_paragraphs.append(paragraph)

else:

chunks.append("\n\n".join(current_chunk_paragraphs))

current_chunk_paragraphs = [paragraph]

current_topic = dominant_topics[i]

chunks.append("\n\n".join(current_chunk_paragraphs))

return chunks

take note of: Topic-based chunking is very sensitive to text length, topic differentiation and preprocessing steps, and requires a preset number of topics. This method is more suitable as an exploratory tool on long documents with clear topic boundaries.

Advanced chunking strategy

Small-to-Big

- core idea: High-precision retrieval using small chunks (e.g., sentences), and then feeding the original chunk (e.g., paragraph) containing the chunk as a context to the

LLM. It combines the high retrieval accuracy of small chunks with the rich context of large chunks. - Applicable Scenarios: Complex Q&A scenarios requiring high retrieval accuracy and rich generation context.

在 LangChain Middle.ParentDocumentRetriever implements this idea. It manages two parallel processing flows in the background:

- Split the document into large "parent blocks".

- Each parent block is further divided into smaller "child blocks".

- Create vector indexes only for sub-blocks.

- The search is performed by first finding the relevant sub-block and then by a separate

docstoreExtracting their corresponding parent blocks is returned to theLLM。

# from langchain.embeddings import OpenAIEmbeddings

# from langchain_text_splitters import RecursiveCharacterTextSplitter

# from langchain.retrievers import ParentDocumentRetriever

# from langchain_community.document_loaders import TextLoader

# from langchain_chroma import Chroma

# from langchain.storage import InMemoryStore

# Assume 'docs' are loaded documents

# parent_splitter = RecursiveCharacterTextSplitter(chunk_size=2000)

# child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

# vectorstore = Chroma(embedding_function=OpenAIEmbeddings(), collection_name="split_parents")

# store = InMemoryStore() # This store holds the parent documents

# retriever = ParentDocumentRetriever(

# vectorstore=vectorstore,

# docstore=store,

# child_splitter=child_splitter,

# parent_splitter=parent_splitter,

# )

# retriever.add_documents(docs)

# sub_docs = vectorstore.similarity_search("query") # Retrieves small chunks

# retrieved_docs = retriever.get_relevant_documents("query") # Retrieves large parent chunks

# print(retrieved_docs.page_content)

Agentic Chunking

- core idea: Utilizing a

LLMAgentto simulate the human reading comprehension process and dynamically determine chunk boundaries. For example, the cueLLMBreaking down a text into multiple "self-contained chunks of knowledge". - Applicable Scenarios: Experimental projects, or dealing with highly complex, unstructured text. Extremely costly, stability to be proven.

import textwrap

# from langchain_openai import ChatOpenAI

from langchain.prompts import PromptTemplate

from langchain_core.output_parsers import PydanticOutputParser

from pydantic import BaseModel, Field

from typing import List

class KnowledgeChunk(BaseModel):

chunk_title: str = Field(description="A concise title for this knowledge chunk.")

chunk_text: str = Field(description="A self-contained text extracted and synthesized from the original paragraph.")

representative_question: str = Field(description="A typical question that can be answered by this chunk.")

class ChunkList(BaseModel):

chunks: List[KnowledgeChunk]

parser = PydanticOutputParser(pydantic_object=ChunkList)

prompt_template = """

[ROLE]: You are a top-tier document analyst. Your task is to decompose complex text into a set of core, self-contained "Knowledge Chunks".

[TASK]: Read the provided text, identify the distinct core concepts, and create a knowledge chunk for each.

[RULES]:

1. Self-Contained: Each chunk must be understandable on its own.

2. Single Concept: Each chunk should focus on only one core idea.

3. Extract and Restructure: Pull all relevant sentences for a concept and combine them into a coherent paragraph.

4. Follow Format: Strictly adhere to the JSON format instructions below.

{format_instructions}

[TEXT TO PROCESS]:

{paragraph_text}

"""

prompt = PromptTemplate(

template=prompt_template,

input_variables=["paragraph_text"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

# The following part is a simulation, as it requires a running LLM model.

# model = ChatOpenAI(model="gpt-4", temperature=0.0)

# chain = prompt | model | parser

# result = chain.invoke({"paragraph_text": document_text})

Mixed chunking: balancing efficiency and quality

In practice, it is difficult to deal with all situations with a single strategy. Mixed chunking is a very practical technique.

- core idea: Coarse-grained slicing with one macro strategy (e.g., structured chunking) followed by secondary slicing of oversized chunks using a finer strategy (e.g., recursive chunking).

- Applicable Scenarios: Handles documents with complex structures and uneven content density.

from langchain_text_splitters import MarkdownHeaderTextSplitter, RecursiveCharacterTextSplitter

from langchain_core.documents import Document

markdown_document = """

# Chapter 1: Company Profile

Our company was founded in 2017...

## 1.1 Development History

The company has experienced rapid growth...

# Chapter 2: Core Technology

This chapter describes our core technologies in detail. Our framework is based on advanced distributed computing concepts... (A very long paragraph with multiple sentences describing different technical aspects like CNNs, Transformers, data pipelines, etc.)

## 2.1 Technical Principles

Our principles combine statistics, machine learning...

# Chapter 3: Future Outlook

Looking ahead, we will continue to invest in AI...

"""

def hybrid_chunking(

markdown_document: str,

coarse_chunk_threshold: int = 400,

fine_chunk_size: int = 100,

fine_chunk_overlap: int = 20

) -> list[Document]:

# 1. Coarse-grained splitting by structure

headers_to_split_on = [("#", "Header 1"), ("##", "Header 2")]

markdown_splitter = MarkdownHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

coarse_chunks = markdown_splitter.split_text(markdown_document)

# 2. Fine-grained recursive splitter for oversized chunks

fine_splitter = RecursiveCharacterTextSplitter(

chunk_size=fine_chunk_size,

chunk_overlap=fine_chunk_overlap

)

final_chunks = []

for chunk in coarse_chunks:

if len(chunk.page_content) > coarse_chunk_threshold:

# If a chunk is too large, split it further

finer_chunks = fine_splitter.split_documents([chunk])

final_chunks.extend(finer_chunks)

else:

final_chunks.append(chunk)

return final_chunks

final_chunks = hybrid_chunking(markdown_document)

for i, chunk in enumerate(final_chunks):

print(f"--- Final Chunk {i+1} (Length: {len(chunk.page_content)}) ---")

print(f"Metadata: {chunk.metadata}")

print(chunk.page_content)

print("-" * 80)

How to choose the best chunking strategy?

In the face of a multitude of strategies, it is more important to choose a rational path than to try them one by one. It is recommended that the following hierarchical decision-making framework be followed.

Step 1: Start with a baseline strategy

- default option:

RecursiveCharacterTextSplitter. This is the safest place to start, no matter what kind of text is being processed. Use it to establish a performance baseline.

Step 2: Examine structured features

- Priority options: Structure-aware chunking. If the document has an explicit structure (

MarkdownTitle,HTMLtab), switch to theMarkdownHeaderTextSplitterand other methods. This is the optimization with the lowest cost and most obvious benefits.

Step 3: When accuracy becomes the bottleneck

- Advanced Options: Semantic chunking or small-large chunking. If the retrieval results of the basic and structured strategies are still unsatisfactory, it indicates the need for higher dimensional semantic information.

SemanticChunker: For scenarios that require a high degree of semantic consistency within a block.ParentDocumentRetriever(small-large chunks): suitable for both search accuracy and the need to provide a clearer understanding for theLLMComplex Q&A scenarios that provide complete context.

Step 4: Dealing with Extremely Complex Documents

- Advanced Practice: Hybrid chunking. For documents with complex structures and uneven content density, hybrid chunking is the best practice for balancing cost and effectiveness.

The following table summarizes all the discussed chunking strategies.

| chunking strategy | Core logic | vantage | Disadvantages and Costs |

|---|---|---|---|

| Fixed length chunking | by a fixed number of characters or token part of a number (e.g. decimal or Roman numeral) |

Simple and fast to implement | Easily destroys semantics and is the least effective |

| recursive chunking | Recursive cuts by predefined separators (paragraphs, sentences) | Highly versatile, better preservation of structure | Fairly effective on irregular documents |

| Sentence-based chunking | Sentences as the smallest unit, then combined into chunks | Ensuring sentence integrity | Single-sentence context may be insufficient, need to deal with long sentences |

| Structured chunking | Utilizing the inherent structure of the document (e.g., headings) to slice and dice | Logical and clear in context | Strong dependency on document format, not generic |

| semantic chunking | Slicing based on local semantic similarity changes | High cohesion of concepts within a block, high retrieval accuracy | High computational costs (Embedding calculations), relying on model quality |

| Theme-based chunking | Slicing by global topic boundaries using topic models | The information within the block is highly correlated | Complex to implement, sensitive to data and parameters, unstable results |

| hybrid chunking | Macro-crude + micro-segmentation | Balancing efficiency and quality with practicality | More complex implementation logic |

| small-large chunks | Small blocks for retrieval, large blocks for generation | Combines high-precision search with rich context | Complex pipeline, need to manage two sets of indexes, double the storage cost |

| Proxy chunking | AI Agent Dynamics Analysis and Slicing Documentation |

Theoretically optimal | Experimental and extremely costly (API call) with a large delay |