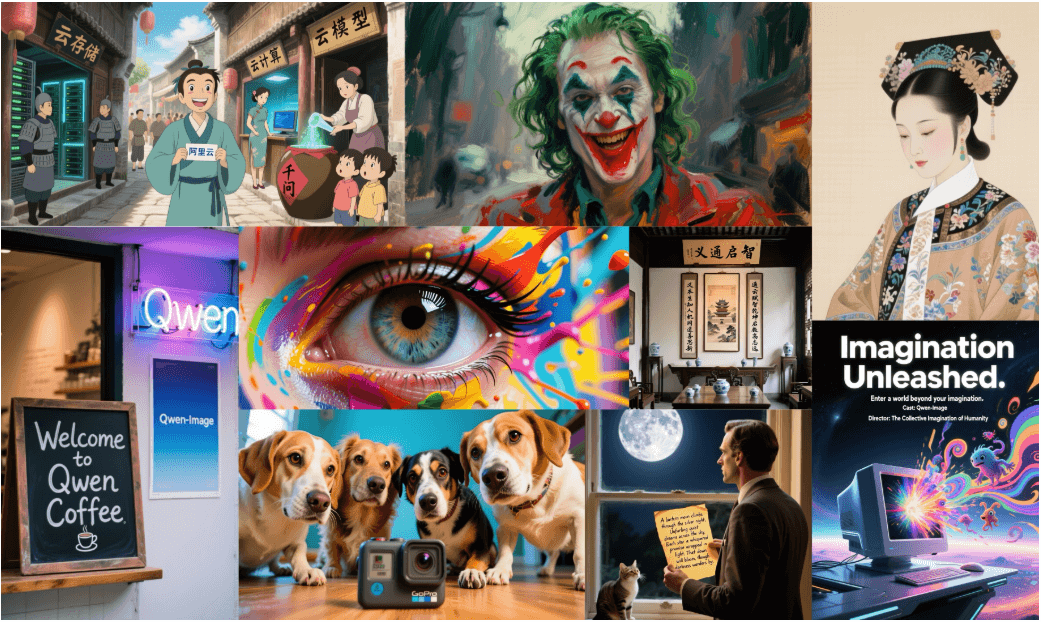

Qwen-Image is a 20B parametric multimodal diffusion model (MMDiT) developed by the Qwen team, specializing in high-fidelity image generation and accurate text rendering. It excels in complex text processing (especially Chinese and English) and image editing. The model supports a wide range of art styles, such as realistic, anime, and high-definition posters, and can handle multilingual typography and layout-sensitive scenarios. The model can be customized by combining it with ComfyUI With the native integration of Qwen-Image, users can easily use Qwen-Image in their local workflow to generate content such as advertising posters, magazine covers or pixel art. The model is available under the Apache 2.0 license and is open for artists, designers and developers.

Function List

- High-fidelity image generation: support realistic, anime, pixel art and other styles to generate high-resolution images.

- Complex Text Rendering: Accurately render multi-language text in English and Chinese, maintaining typographic consistency and visual harmony.

- Image editing capabilities: support for style conversion, object addition and deletion, text modification and detail enhancement.

- Image understanding functions: including target detection, semantic segmentation, depth estimation and super-resolution.

- Multi-resolution support: Provides 1:1, 16:9, 9:16, 4:3, 3:4 aspect ratios.

- ComfyUI Integration: Runs in a native workflow and supports modular operations and custom workflows.

- Prompt Optimization: Enhance multilingual prompts with Qwen-Plus to improve generation quality.

- Multi-platform support: Compatible with Hugging Face, ModelScope, WaveSpeedAI and LiblibAI.

Using Help

Installation process

To use Qwen-Image in ComfyUI, complete the following installation steps:

- Download or update ComfyUI:

Visit the ComfyUI website (https://www.comfy.org/download) to download the latest version, or update an existing installation. Make sure that Python 3.8 or later is installed on your system. - Installation of dependencies:

Install the necessary Python libraries, includingdiffusersand PyTorch:pip install git+https://github.com/huggingface/diffusers pip install torch torchvision

If you are using a GPU, it is recommended to install PyTorch with CUDA support to improve performance.

- Getting a Qwen-Image Model:

After selecting the Qwen-Image workflow in ComfyUI, you are automatically prompted to download the model weights (Qwen/Qwen-Image). It can also be downloaded manually from Hugging Face or ModelScope. - Configuration environment:

To ensure hardware support, we recommend using a high-performance GPU such as the RTX 4090D (24GB of RAM). CPU operation is possible but slower. VRAM usage reference: the first run takes about 86% (24GB of RAM) and takes 94 seconds; the second run takes about 71 seconds.

Using Qwen-Image in ComfyUI

ComfyUI provides a modular workflow that is suitable for running Qwen-Image locally. the following is the procedure:

- Start ComfyUI:

Run the ComfyUI application to enter the main screen. - Loading Qwen-Image Workflows:

- Setting Cues:

Enter a detailed text cue word, for example:A realistic vintage TV news broadcast scene from the 1980s, displayed on an old CRT television with rounded screen edges, static noise, and scanlines. The screen shows a breaking news segment with a lower-third banner that reads: "Breaking: ComfyUI just supported Qwen-Image".It is recommended to add positive cues to optimize results:

Ultra HD, 4K, cinematic composition - Adjustment of generation parameters:

- resolution (of a photo): Select 16:9 (1664 x 928) or another supported aspect ratio.

- inference step: 50 steps are recommended to balance quality and speed.

- CFG Scale: Set to 4.0 to ensure that the image is highly relevant to the cue word.

- random seed: Set fixation seeds (e.g. 42) to ensure reproducible results.

- Generating images:

strike (on the keyboard)Runbutton, ComfyUI will call Qwen-Image to generate an image. The result can be saved as a PNG file.

Cue word optimization

To improve the quality of generation, Qwen-Plus' prompt word enhancement tool can be used:

- code integration:

from tools.prompt_utils import rewrite prompt = rewrite(prompt) # 优化提示词 - command-line operation:

set upDASHSCOPE_API_KEYAfter the run:cd src DASHSCOPE_API_KEY=sk-xxxxxxxxxxxxxxxxxxxx python examples/generate_w_prompt_enhance.py

Advanced Function Operation

- Text Rendering:

Qwen-Image excels in multi-language text rendering and is suitable for generating posters, magazine covers, and more. For example, generating a fashion magazine cover:A high-fashion magazine cover inspired by Vogue. Stylish model in avant-garde outfit, dramatic pose, soft studio lighting. Elegant layout with English headlines: "THE BOLD ISSUE — Confidence is the New Couture", "100 LOOKS THAT DEFINE TOMORROW".The model will ensure that text fonts and typography blend naturally with the background.

- image editing:

Upcoming editing features support style conversion, object addition and deletion, and text modification. For example, a photo background can be replaced with a pixel art style or new objects can be added to an image. - graphic understanding:

Qwen-Image supports tasks such as target detection and semantic segmentation. For example, it can be used to analyze the position of an object or segmentation region in an image. The specific operation should wait for the official documentation to be updated.

Deployment and Optimization

If you need to deploy multi-GPU services to support high concurrency:

- Configure environment variables:

export NUM_GPUS_TO_USE=4 export TASK_QUEUE_SIZE=100 export TASK_TIMEOUT=300 - Start the Gradio server:

cd src DASHSCOPE_API_KEY=sk-xxxxxxxxxxxxxxxxx python examples/demo.pyAccess to the Gradio web interface is available through your browser.

Community Support

- Hugging Face: Support

diffusersWorkflow, LoRA and fine-tuning features are coming soon. - ModelScope: Supports low memory inference (4GB VRAM), FP8 quantization, and LoRA training.

- WaveSpeedAI and LiblibAI: Provides online experience, visit their official website for details.

- ComfyUI Documentation: Check out https://docs.comfy.org/tutorials/image/qwen/qwen-image for more tutorials.

application scenario

- advertising design

Generate posters or billboards containing branded text, with text and images seamlessly blended.

For example, generate a billboard on a city rooftop that says "ComfyUI is built with love". - art

Artists can generate images in diverse styles such as pixel art or high fashion covers for illustration or digital art.

For example, create a 16-bit pixel-style game interface. - Education and Research

Utilizing image understanding functions for target detection or semantic segmentation to aid academic research.

For example, analyzing the layout of merchandise in a retail scenario. - Marketing Content

Generate professional marketing slideshows or video advertisement material with multi-language text and elegant typography support.

For example, create a milk branding slideshow with headlines and visual elements.

QA

- How does Qwen-Image work in ComfyUI?

The Qwen-Image model is loaded through ComfyUI's modular workflow, prompts and parameters are set and the image is generated. - What text languages are supported?

Support for Chinese and English and other languages, Chinese rendering is particularly accurate, suitable for complex typesetting. - What are the hardware requirements?

RTX 4090D (24GB RAM) is recommended, CPUs can run it but it's slower, ModelScope supports low RAM optimization. - How can I participate in the model review?

Visit the official AI Arena website to participate in the image generation comparison, or contact weiyue.wy@alibaba-inc.com to deploy the model.