At 2am this morning, OpenAI's 12 days of live streaming finally came to its final chapter. openAI o3 was officially released!

o3 is the successor to the o1 family of models. This type of model is characterized by allowing the model to spend more time thinking (reasoning) before answering a question, thus increasing the accuracy of the answer. However, OpenAI skipped o2 in the naming. according to The Information, this was done to avoid copyright issues, as there is a telecom company in the UK named O2, which could have caused confusion. sam Altman confirmed this on the air this afternoon.

In fact, OpenAI has been warming up the model since yesterday. And already developers have found references to the o3_min_safety_test on the OpenAI website.

o3 Release Date

Now, as rumored, o3 and o3-mini Here we go! Unfortunately, the o3 family of models will not be released directly to the public, but will be tested for security first. sam Altman also pointed out that today is not a launch, just an announcement.

Sam Altman mentioned that they plan to release the o3-mini around the end of January and the full o3 model soon after.

Differences between o3 and o3-mini

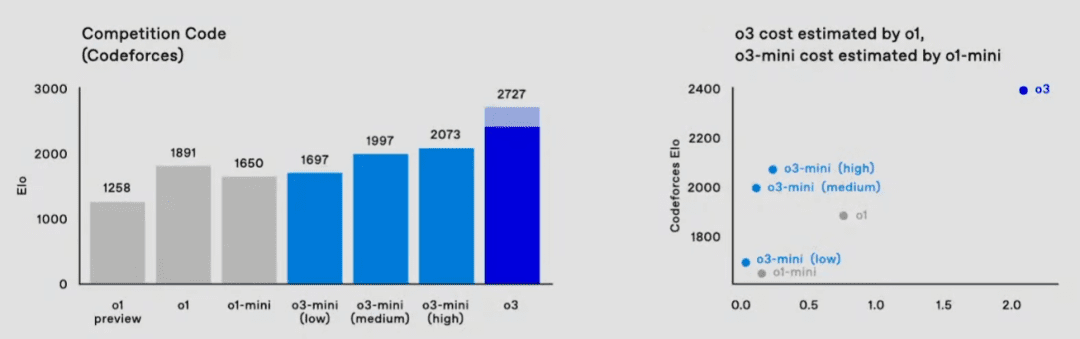

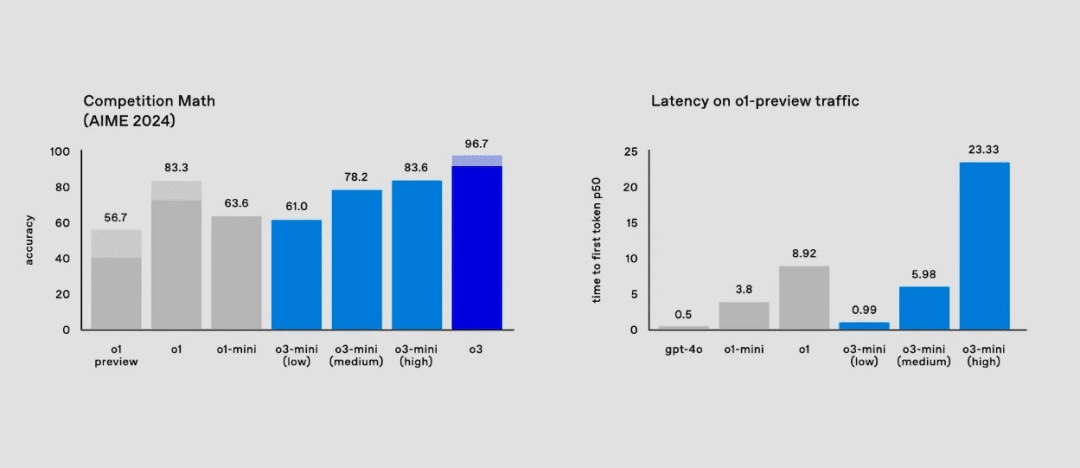

o3-mini: o3 mini is a more cost-effective version of o3, focusing on improving inference speed and reducing inference cost while taking model performance into account. High performance and low cost make it ideal for programming.

It supports three different inference time options -- low, medium, and high.

Compared to o1, o3-mini's performance on Codeforces is remarkably cost-effective, which makes it an excellent model to program with.

For math problems, o3-mini (low) achieves low latency comparable to gpt-4o.

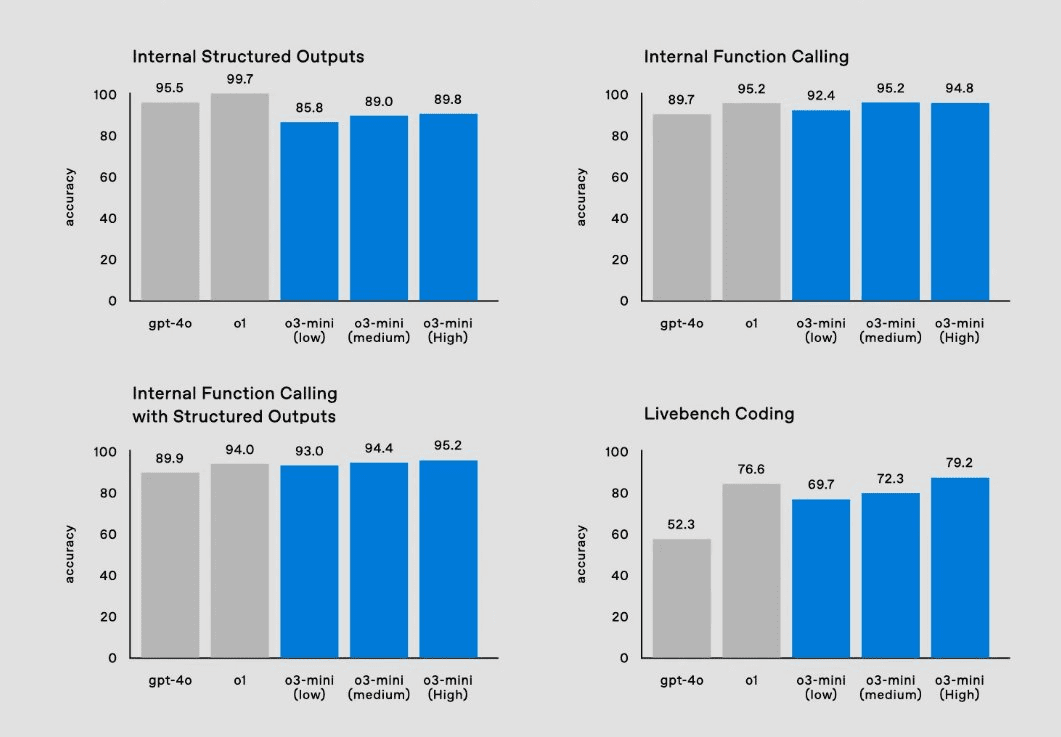

All the API features on o3-mini and their corresponding capabilities are listed below:

o3 Aptitude tests

How strong is the o3, compared to Google's just-releasedGemini 2.0 Flash ThinkingCompare:

The o3's ability is a direct descending blow to almost all models, nowadays. Take a look at what o3 is capable of.

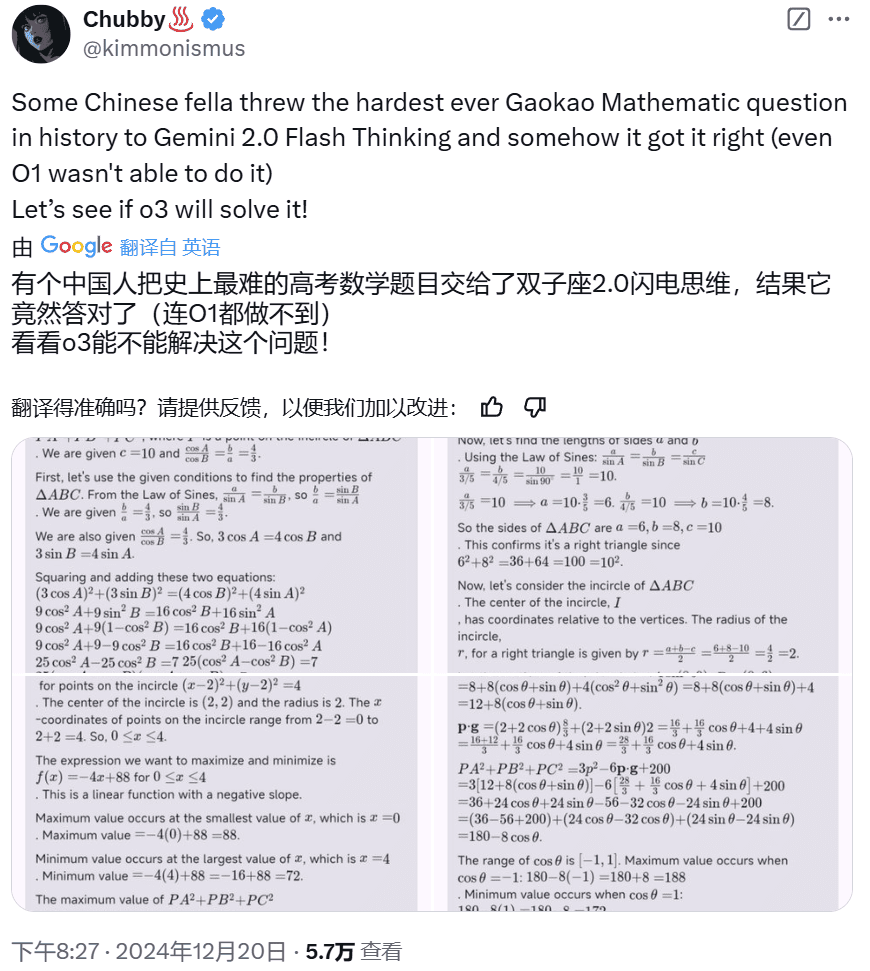

On the left.Software Engineering Examination (SWE-Bench Verified).This is like a test for writing programs, for example, you write a piece of software to be fast, accurate, and no bugs (small errors). This is to check whether o3 can write perfect code like a first-class software engineer. o3's score: 71.7%, which is still much stronger than o1. The benchmark on the right is a bit more aggressive, Codeforces, a globally renowned coding competition platform. o3's score is 2727, a score that is equivalent to the 175th place in the whole list, which has surpassed the 99.99% of humans.

The o1's ability to code has been explosive, and the o3, another big step towards the top of the mountain of AGI.

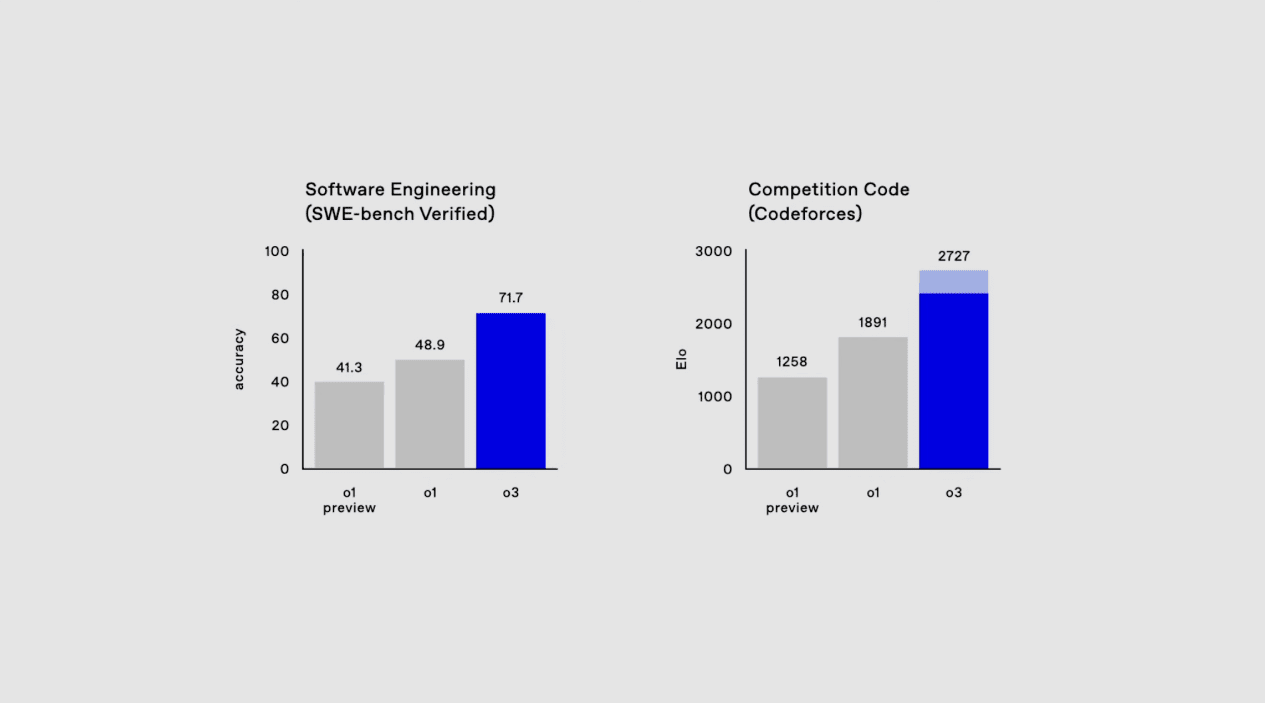

The math competition AIEM 2024 and the PhD level science exam GPQA Diamond. aIEM 2024 was close to a perfect score, and if I remember correctly, it would be the first time that an AI was able to reach a level where there was an AIEM close to a perfect score. The PhD level science exams have evolved, but not as violently as math and programming.

The math competition AIEM 2024 and the PhD level science exam GPQA Diamond. aIEM 2024 was close to a perfect score, and if I remember correctly, it would be the first time that an AI was able to reach a level where there was an AIEM close to a perfect score. The PhD level science exams have evolved, but not as violently as math and programming.

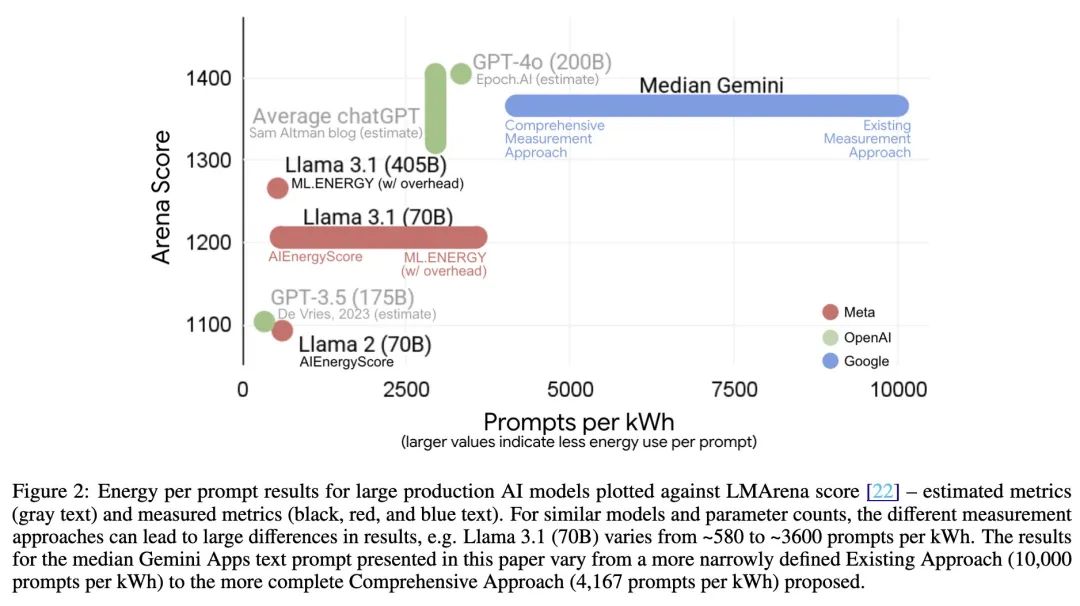

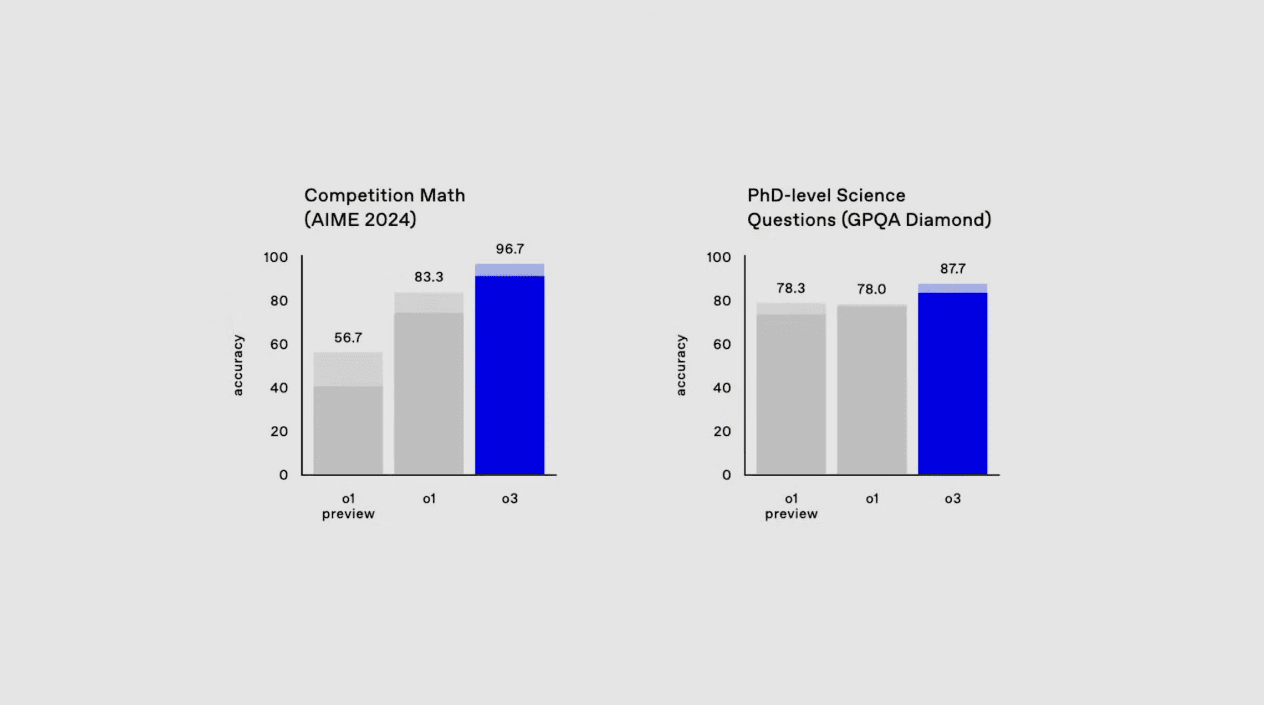

This next math benchmark is a little more interesting.  rontierMath, a math benchmark test developed by Epoch AI, was developed by a collaboration of more than 60 leading mathematicians to assess the ability of artificial intelligence in advanced mathematical reasoning. And to avoid data contamination, all the questions are original and new questions that have never been released before.

rontierMath, a math benchmark test developed by Epoch AI, was developed by a collaboration of more than 60 leading mathematicians to assess the ability of artificial intelligence in advanced mathematical reasoning. And to avoid data contamination, all the questions are original and new questions that have never been released before.

Previously GPT-4 and Gemini 1.5 Pro This model goes to evaluation with less than 21 TP3T of successful power, in contrast to the success rate of over 901 TP3T in other traditional mathematical benchmarks such as GSM-8K and MATH. And this time.o3 directly to 25.2. While all the major other models are still rolling traditional math benchmarks, o3 has really moved into another world.

o3 Becomes First AI Model to Break ARC-AGI Benchmarks

The ARC Prize Fundation is a non-profit organization that aims to "be the North Star on the road to AGI through benchmarking". The organization's first benchmark, ARC-AGI, has been proposed for five years but has not been overcome.

Until now, Kamradt announced that o3 has achieved excellent levels on the benchmark, becoming the first AI model to break the ARC-AGI benchmark.

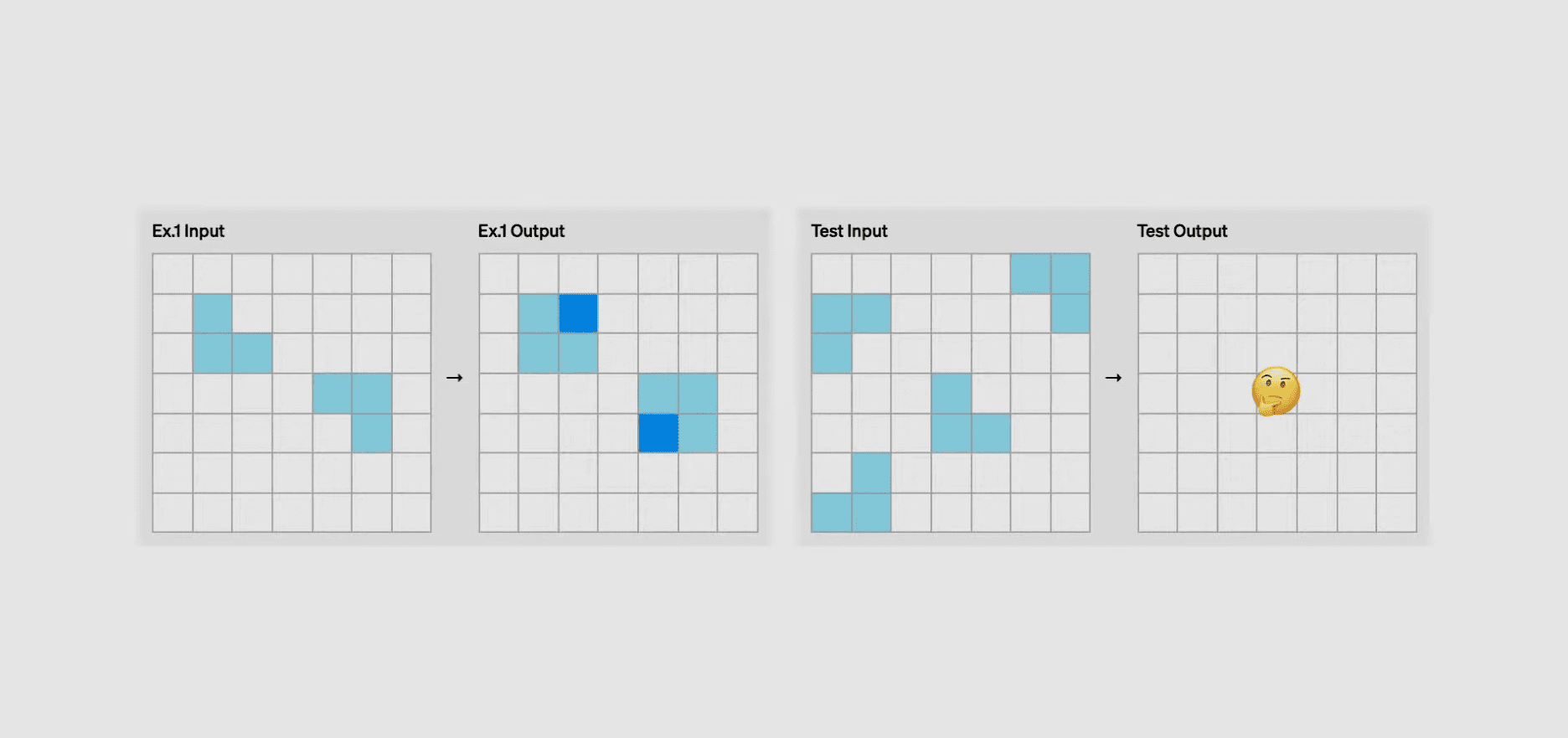

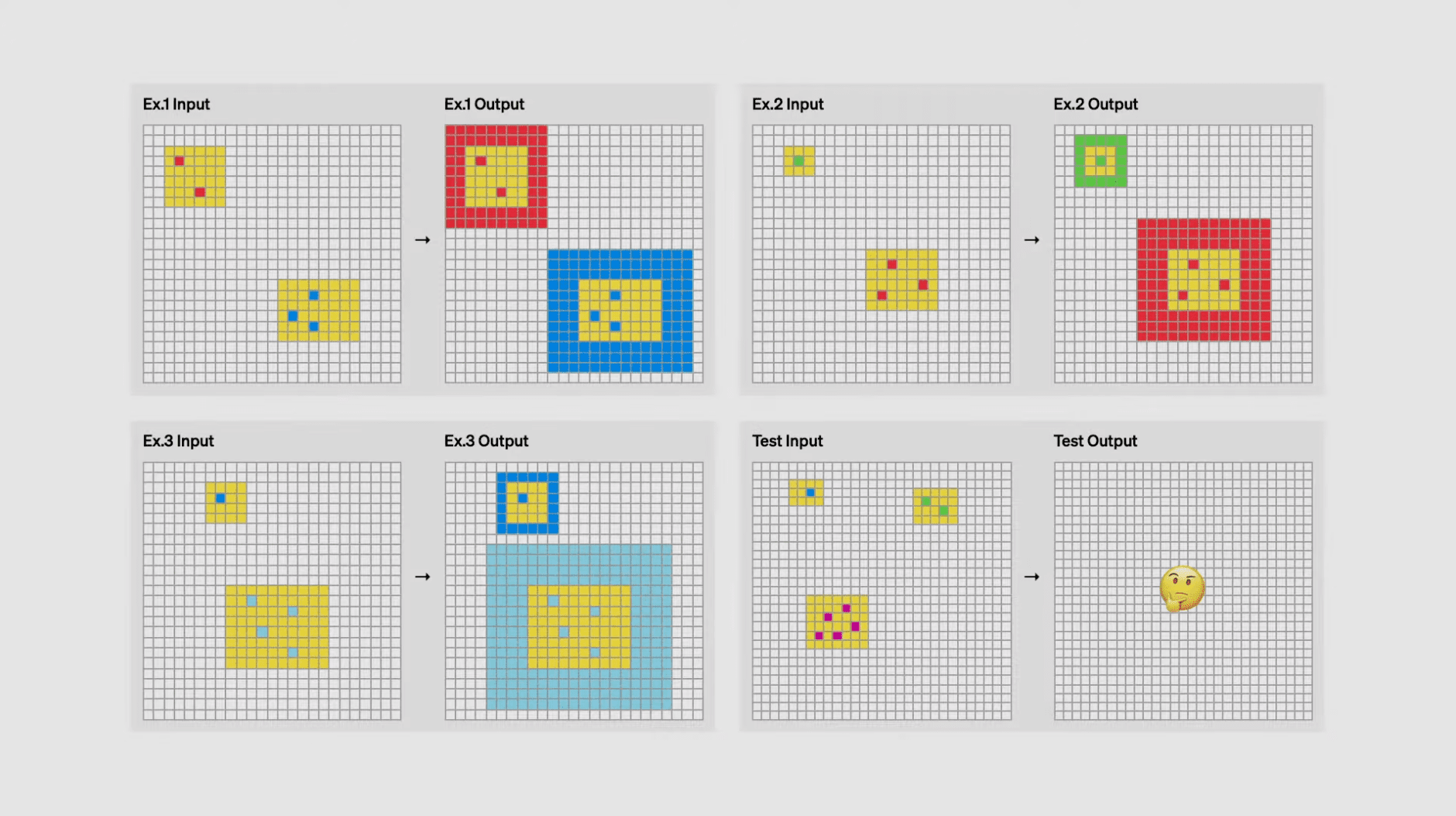

ARC-AGI, first presented in 2019, aims to test the capabilities of AI systems through a series of abstraction and reasoning tasks. Mainly because traditional measures of skill do not effectively represent intelligence, as they tend to rely on prior knowledge and experience, whereas true intelligence should be reflected in a wide range of adaptability and generalizability. So, ARC-AGI was born, and inside these tasks require AI to recognize patterns and solve new problems, each task consisting of input-output examples. These tasks are presented in the form of a grid, where each square can be one of ten colors and the size of the grid can vary from 1×1 to 30×30. Participants are required to generate correct outputs based on the given inputs, testing their reasoning and abstraction skills. It can be simply understood as, finding patterns. That's probably how it works:

In the ARC-AGI benchmark, AI needs to look for patterns based on paired 'input - output' examples before predicting the output based on one input, some examples of which are shown in the figure below. Those who have taken graduation season recruitment or civil service exams may not be unfamiliar with such graphical reasoning problems.

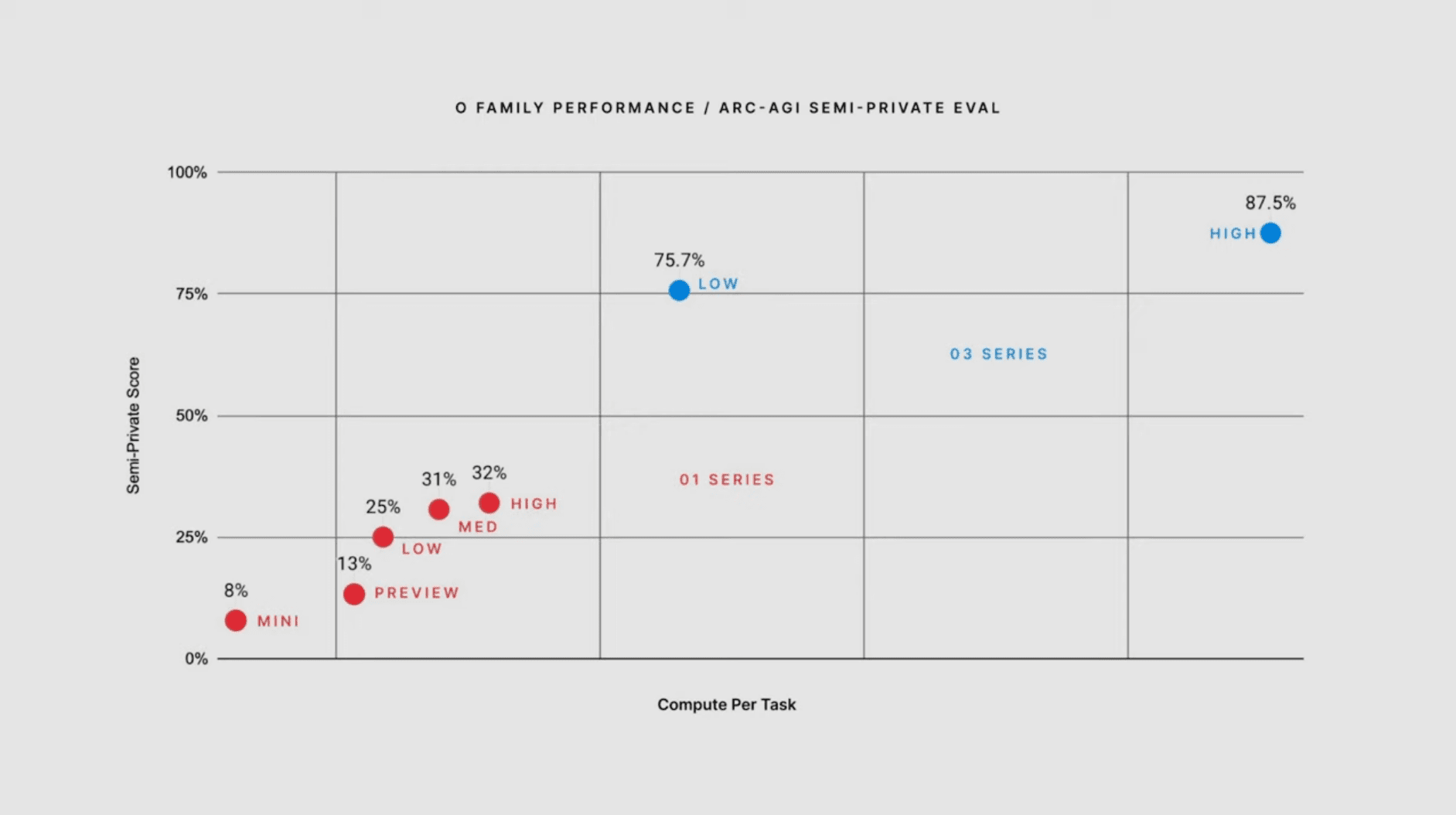

Very difficult and abstract. Ratings for past generations of models are here:

It is reported that the o3 series models can reach a minimum performance of 75.7% on the ARC-AGI benchmark, and o3 can even reach 87.5% if it is allowed to think for longer periods of time using more computational resources.

It took five whole years to go from 0% to 5%, and today, it only took six months to go from 5% to 87.5%. And the corresponding, human threshold score, is 85%. there is no longer any obstacle on our way to AGI.

It took five whole years to go from 0% to 5%, and today, it only took six months to go from 5% to 87.5%. And the corresponding, human threshold score, is 85%. there is no longer any obstacle on our way to AGI.

o3 How the model works

At this point we can only speculate a bit about how exactly the o3 model works. o3 model's core mechanism seems to be in the token space for natural language program search and execution - during testing, the model searches the space of possible thought chains that describe the steps needed to solve the task, in a way that may be similar to AlphaZero-style Monte-Carlo tree search (Monte-Carlo tree search) in a way that may bear some resemblance to AlphaZero-style Monte-Carlo tree search. In the case of o3, the search may be guided by some sort of evaluation model. It's worth noting that DeepMind's Demis Hassabis hinted in a June 2023 interview that DeepMind has been working on this concept -- work that's been in the works for a long time.