August 5, 2025 OpenAI dropped a bombshell with the official release of two models named gpt-oss-120b 和 gpt-oss-20b of the open source language model. This move marks the first time since GPT-2 since then.OpenAI returned to open source for large-scale language modeling for the first time, a move widely seen as its response to the increasingly competitive marketplace, especially in the Meta 的 Llama series and Mistral AI and other direct responses in the context of the rise of open source power.

These two models are in the Apache 2.0 Released under license, it allows developers and enterprises to freely experiment, customize, and even commercially deploy without worrying about copyright or patent risks.

Model Characterization and Deep Customization

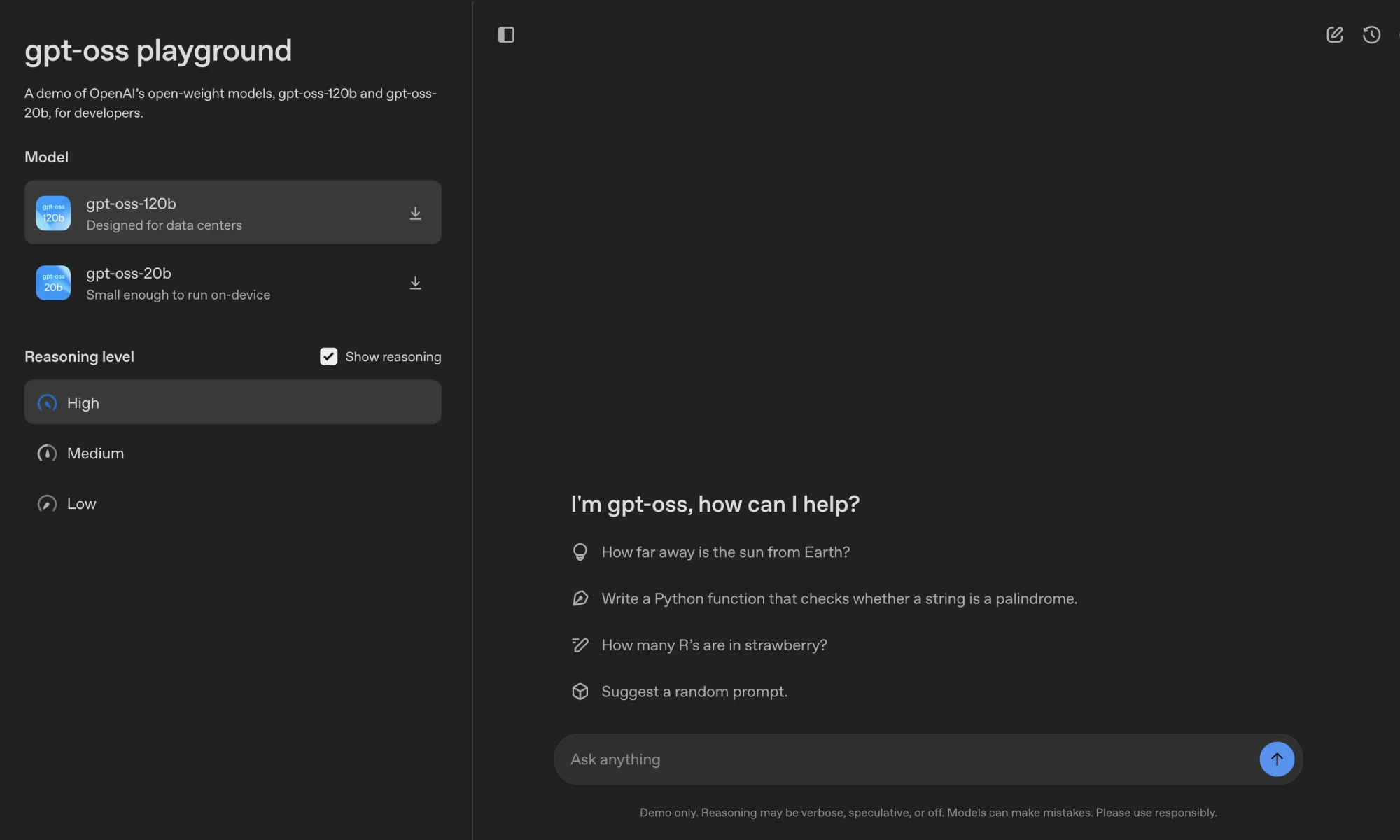

OpenAI Emphasizing.gpt-oss The model is designed for intelligent (Agent) workflows with powerful command following, tool use, and reasoning capabilities. Its core features include:

- Designed for Intelligent Body Tasks: The model has built-in capabilities for powerful tools such as web search and

Pythoncode execution, which makes it highly promising for building complex automated task flows. - Deeply customizable: Users can adjust the inference strength of the model between low, medium and high gears according to specific application scenarios. Meanwhile, the model supports full-parameter fine-tuning, providing maximum space for personalized customization.

- Chain of Thought:

OpenAIChoosing not to directly supervise the chain of thought (CoT) of this model, developers can access its complete reasoning process. This not only facilitates debugging and improves trust in the model's outputs, but also provides the research community with a valuable opportunity to monitor and study the model's behavior.

OpenAI A simple online Playground is provided to allow developers to experience the interactive capabilities of both models directly in the browser.

Technical Architecture and Performance

gpt-oss The series utilizes the same GPT-3 akin Transformer architecture and introduced Mixture-of-Experts (MoE) technology to improve efficiency.MoE The architecture significantly reduces computational cost by activating only some of the parameters needed to process the task at hand.

gpt-oss-120bThe model has 117 billion total parameters, but it is not easy to process each of the token Only 5.1 billion parameters were activated at the time.gpt-oss-20bThe model has 21 billion total parameters and 3.6 billion activation parameters.

Both models support context lengths up to 128k and use grouped multi-query attention and rotational position embedding (RoPE) to optimize inference and memory efficiency.

Model Architecture Details

| mould | storey | general parameters | Active parameters for each token | Total number of experts | Number of active experts per token | Context length |

|---|---|---|---|---|---|---|

| gpt-oss-120b | 36 | 117b | 5.1b | 128 | 4 | 128k |

| gpt-oss-20b | 24 | 21b | 3.6b | 32 | 4 | 128k |

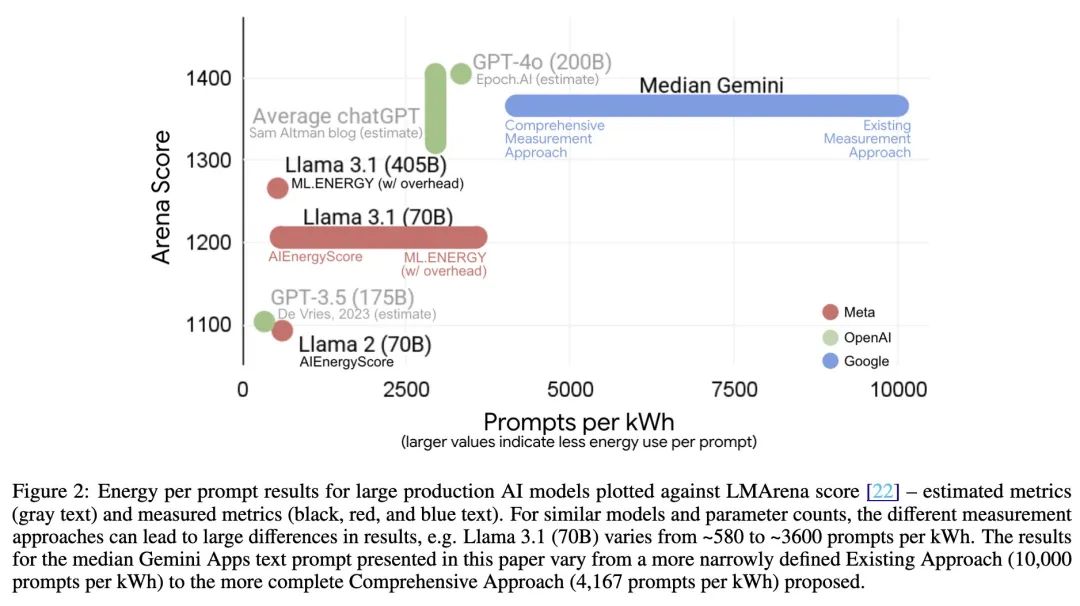

In terms of performance.OpenAI Published benchmarking data shows gpt-oss The performance is extremely competitive.

Model Performance Comparison

| gpt-oss-120b | gpt-oss-20b | OpenAI o3 | OpenAI o4-mini | |

|---|---|---|---|---|

| Reasoning and knowledge | ||||

| MMLU | 90.0 | 85.3 | 93.4 | 93.0 |

| GPQA Diamond | 80.9 | 74.2 | 77.0 | 81.4 |

| The Ultimate Human Test | 19.0 | 17.3 | 24.9 | 17.7 |

| Mathematics for Competitions | ||||

| AIME 2024 | 96.6 | 96.0 | 91.6 | 93.4 |

| AIME 2025 | 97.9 | 98.7 | 88.9 | 92.7 |

The data shows that the flagship gpt-oss-120b In several core inference benchmarks, the performance is comparable to that of the OpenAI Own closed-source model o4-mini Not to be outdone, and even surpassed in specific areas such as competition math. And the lightweight gpt-oss-20b Its performance is also similar to that of o3-mini comparable or better. This performance makes it not only a theoretical powerhouse, but also has the potential to challenge the top closed-source models in practical applications.

Safety standards and ecosystems

Faced with the risk that open source models may be exploited for malicious purposes, theOpenAI It was emphasized that security had been placed at the core.

OpenAI adopted its internal Preparedness Framework for adversarial fine-tuned gpt-oss-120b The version was rigorously tested and it was concluded that the model did not meet the "high capacity" risk level. In addition.OpenAI A Red Team Testing Challenge with a prize pool of $500,000 was also launched to encourage the community to come together to dig into potential security issues.

In terms of usability.OpenAI collaborate with Hugging Face、Azure、AWS deployment platforms such as NVIDIA、AMD and other hardware vendors to ensure that the model can be used widely and efficiently.gpt-oss-120b has been quantized to run on a single GPU with 80GB of RAM, while the gpt-oss-20b It also requires only 16GB of RAM, which dramatically lowers the barrier for developers to deploy and experiment locally on consumer-grade hardware.

Strategic significance: openness as a new moat?

OpenAI This high-profile embrace of open source is undoubtedly a deep insight into the current AI pattern and strategic adjustment. In the past, theOpenAI sth that one relies on GPT The performance advantages of the series of closed-source models have led to great success in commercialization. However Meta 的 Llama The series proves that a strong open source model can spawn a large and vibrant developer ecosystem that is a powerful moat in itself.

By publishing a competitive gpt-oss Model.OpenAI Not only does it slow down the flow of developers to competitors' open source ecosystems, but it also puts their own technology stacks (such as the Harmony (cue format) promoted as the industry standard. It's a defense and it's an offense. It allows OpenAI was able to fight on both the closed-source and open-source battlefields, both through the API Provide top-of-the-line closed-source models for revenue, yet be able to build communities, attract talent, and explore new business possibilities through open-source models.

For the industry as a whole.OpenAI s entry will completely ignite the battle in the open source big modeling space. Developers will have more high-quality, customizable options, and standards for model performance and security will be pushed to new heights. What is this OpenAI Whether it's a one-off tactical defense or a fundamental shift in its long-term strategy will depend on its subsequent investment and community feedback. In any case, the era of openness in AI has truly arrived.