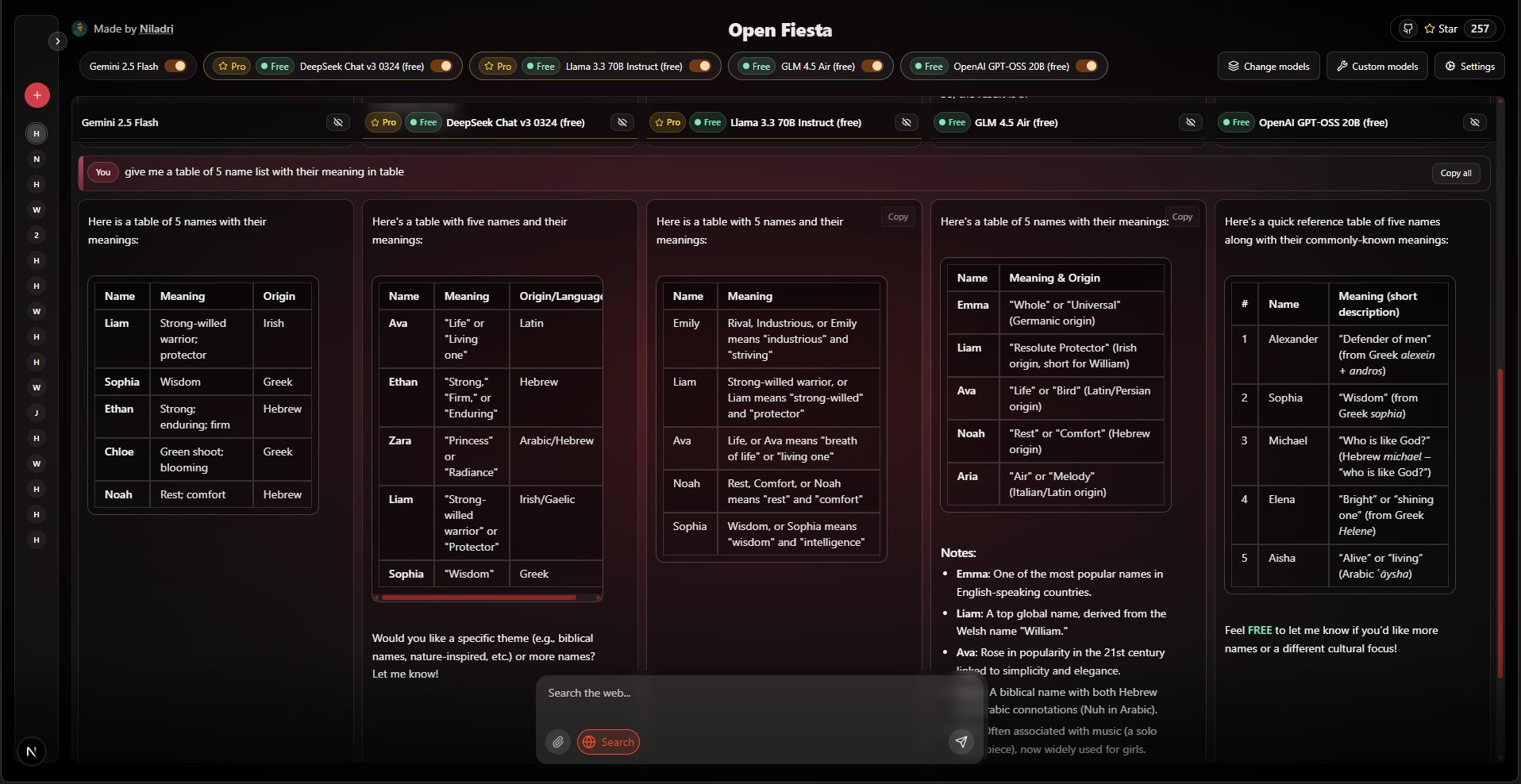

Open-Fiesta is an open source AI chat tool. It allows users to talk to several different large language models at the same time in the same interface. Users can select up to five models, send a question once, and see the different answers from each model side-by-side for direct comparison. The tool supports access to a wide range of models on the Gemini and OpenRouter platforms, such as Llama 3.3, Qwen and Mistral. In addition to text chat, it provides additional features for web search and uploading images (Gemini models only) to enhance the model's answering capabilities. The entire project is developed using the Next.js framework, which allows users to deploy it themselves and use it by filling in their API key in the settings.

Function List

- Multi-model chat: Supports selecting up to 5 AI models in the same interface for simultaneous dialog and comparison.

- Rich model support: Support including DeepSeek R1, Llama 3.3, Qwen, through access to Gemini and OpenRouter. Mistral, Moonshot, Reka, Sarvam and many other mainstream and open source models.

- Web Search: You can choose whether or not to enable the web search function when sending each message, allowing the model to access the latest Internet information to answer questions.

- Image Input: When using a Gemini model, the user can upload an image and have the model understand the content of the image and take a quiz.

- Simple user interface: The interface is clean and uncluttered, supports direct question submission from the keyboard, and smoothly displays the answers generated by the model in real time.

- API Key Management: Users can provide or modify their API key at any time in the "Settings" panel of the GUI, or configure it via the environment variable file.

- Response Formatting: Special processing for the output of the DeepSeek R1 model automatically removes inference tags and converts Markdown formatting to plain text to improve the readability of the content.

- Open source and self-deployment: The project is based on the MIT license open source , users can download the source code to run locally or on the server .

Using Help

Open-Fiesta is a web application that you can use directly through the URL provided by the developer, or you can download the source code and deploy it to your own computer or server. Below is the detailed usage and deployment process.

Local Deployment Process

If you want to run Open-Fiesta on your own computer, you need to install the Node.js environment first and then follow these steps:

- Download Code

Open a terminal (command line tool) and usegitcommand to clone the project code to your computer:git clone https://github.com/NiladriHazra/Open-Fiesta.gitThen go to the project directory:

cd Open-Fiesta - Installation of dependencies

In the project root directory, run the following command to install all the dependency packages required for the project to run:npm i - Configuring the API Key

The project requires an API key to connect to the Big Model service. You need to create a local environment variable file.env.localto store the key.- In the project root directory, manually create a file named

.env.localof the document. - Open this file and add the following, depending on the model you need to use:

If you want to use models from the OpenRouter platform (recommended, as it supports a wide range of free and paid models), you need to get the API key from https://openrouter.ai and add it to the file:

OPENROUTER_API_KEY=你的OpenRouter密钥If you want to use Google's Gemini model (which supports image input), you need to start with the Google AI Studio Get the API key and add it to the file:

GOOGLE_GENERATIVE_AI_API_KEY=你的Gemini密钥

You can configure both keys or just one.

- In the project root directory, manually create a file named

- Starting the Development Server

After completing the above steps, run the following command to start the project:npm run devThe terminal will show that the application has been successfully launched and provide a local URL, usually the

http://localhost:3000。 - start using

Open in your browserhttp://localhost:3000You can then start using Open-Fiesta with your own deployment.

Function Operation Guide

When you open the Open-Fiesta interface, you will see a very clean chat window.

- Select Model

At the top of the chat interface, you will see the model selection area. Clicking on it expands a list of models that are supported through OpenRouter and Gemini. You can check up to 5 models from this list that you want to talk to at the same time. - send a message

Type your question or instruction in the input box at the bottom. Once you have finished typing, you can simply press the return (Enter) key to send it. - Using Web Search

Next to the input box, there is a "Web Search" switch. If you want the model to search the web for information before answering, you can turn this switch on before sending the question. This is especially useful for questions that require up-to-date information or fact-checking. - Upload images (Gemini only)

If you select a model that includes Gemini, an image upload button will also appear next to the input box. Click on it to select an image from your computer. Once it's uploaded, you can send in a question and the Gemini model will answer it with the picture. For example, you could upload a picture of food and ask "What is this dish?". . - View and compare answers

After sending a question, several models of your choice will start generating answers at the same time. Their answers are displayed side-by-side in their respective cards, making it easy for you to directly compare the differences and strengths and weaknesses between the different models. - Runtime Configuration Keys

If you deploy without configuring the.env.localfile, or if you want to use another API key temporarily, you can click the "Settings" button on the interface. In the popup panel, you can directly enter your OpenRouter or Gemini API key. The key you enter here is only valid for the current browser session, you will need to re-enter it after closing the web page.

application scenario

- content creator

Content creators, such as bloggers or marketers, can input the same topic or instructions to multiple AI models at the same time to quickly get drafts of copy in multiple styles and perspectives. This helps them compare the writing capabilities of different models and select or combine the best quality content from them, greatly improving creative efficiency. - Developers and researchers

Developers writing code or solving technical problems can ask multiple models questions and compare the code examples, solutions, or debugging suggestions they provide. Researchers, on the other hand, can use this tool to compare the depth of knowledge and reasoning ability of different models in specific areas of expertise to inform their research. - Students and educators

Students learning complex concepts or writing papers can use this tool to deepen their understanding by getting different interpretations and perspectives from multiple AI "tutors". They can ask the model to explain a scientific principle, provide context for a historical event, or conceptualize an outline for a paper, and compare them to get a fuller picture. - Curiosity Exploration for the Average User

For any user interested in AI technology, this tool provides an excellent playground. Users can ask as many questions as they like, ranging from everyday life ("Help me plan a weekend trip") to imaginative topics ("Write a short story about a space adventure"), to visualize the different AI models' from daily life ("Help me plan a weekend trip") to imaginative topics ("Write a short story about space adventure"), intuitively feel the different AI models' "personalities" and ability differences, and satisfy their curiosity.

QA

- Is this program free?

The project itself is open source and free, and you are free to download and deploy it. However, using it requires calling third-party AI modeling services (such as OpenRouter or Google Gemini), which may charge a fee. However, the OpenRouter platform also offers many models that can be used for free, with an amount sufficient for daily use. - Is my API key secure?

If you are deploying locally, your API key will only be stored on your own computer (.env.localfile), is secure. If you enter the key in the settings of the web version, the key is also stored only in your browser and is not uploaded to the server. - Why are some models' answers of low quality?

Open-Fiesta is just an interface tool to call different models, and the quality of the answer depends entirely on the capabilities of the AI model you choose itself. Different models have their own strengths, weaknesses and areas of specialization, and you can try a few more models to find the one that best suits your needs. - Is it possible to add more models?

Can. If you are a developer, you can modify thelib/directory model configuration file to add any new models supported by the OpenRouter platform in the existing format.