One Balance is an open source tool, built on Cloudflare AI Gateway, focused on helping developers efficiently manage multiple AI API keys. It allocates API requests through intelligent polling and health checks, optimizes quota usage, and reduces the risk of key blocking. Users can quickly deploy to Cloudflare Worker, which supports Google AI Studio, OpenAI and other AI providers. The project is open source on GitHub, with simple configuration, suitable for individual developers or teams.

Function List

- Forward API requests through Cloudflare AI Gateway to secure keys.

- Intelligent polling of multiple API keys and automatic distribution of requests to maximize quota.

- Supports model-level flow limiting, accurately identifying and temporarily blocking over-limited models.

- Automatically create and manage D1 databases to store key status.

- Provide a unified API request portal, compatible with a variety of AI providers.

- Deploy to Cloudflare Worker with one click, generating a dedicated Worker URL.

- Intelligent error handling, distinguishing between minute and day quotas, automatic cooling.

Using Help

Installation process

One Balance relies on Cloudflare Worker deployment, which is a simple process but requires basic technical knowledge. Below are the detailed steps:

- Cloning Project Warehouse

Open a terminal and run the following command to clone the One Balance repository:git clone https://github.com/glidea/one-balance.git cd one-balance - Installation of dependencies

使用 pnpm 安装项目所需依赖:pnpm install - Configure the authorization key

Setting environment variablesAUTH_KEY, which is used to validate API requests.- Mac/Linux : Run the following command:

AUTH_KEY=your-super-secret-auth-key pnpm run deploycf- Windows (PowerShell) : Run the following command:

$env:AUTH_KEY = "your-super-secret-auth-key"; pnpm run deploycf - Deploying to Cloudflare Worker

After running the deploy command, you need to log in to Cloudflare'swranglertool (needs to be installed in advance). If you are not logged in, follow the prompts to complete the login. The deployment automatically creates the D1 database and generates the Worker URL, for example:https://one-balance-backend.<your-subdomain>.workers.dev - Validating Deployment

After successful deployment, access the generated worker URL (e.g.https://one-balance-backend.workers.dev). A VPN may be required in mainland China.curlcommand to test whether the API is responding properly.

Usage

One Balance proxies API requests through the Cloudflare AI Gateway to connect to the Google AI Studio or services such as OpenAI. Here's how it works:

- Send API request

Send the request using the generated worker URL. For example, calling Google Gemini 2.5 The request format for the Pro model is:https://one-balance-backend.workers.dev/api/google-ai-studio/v1beta/models/gemini-2.5-pro:generateContentThe request needs to include in the Header

AUTH_KEY, example:curl -H "Authorization: Bearer your-super-secret-auth-key" \ https://one-balance-backend.workers.dev/api/google-ai-studio/v1beta/models/gemini-2.5-pro:generateContent - Manage key status

One Balance uses the D1 database to store key status, including:- Active : The key is available.

- Cooling Down : The model triggers a current limit and is temporarily cooled.

- Blocked : The key is blocked and stopped.

The system is based on minute-by-minute or day-by-day quotas (e.g., Google AI Studio quota) automatically adjusts the cooldown time, e.g., 24 hours after the sky-level quota is triggered.

- View Request Log

View AI Gateway request logs in the Cloudflare console, including success rates, error types, and more.One Balance automatically handles 429 (rate-limit) errors, switching to an available key.

Featured Function Operation

- Cloudflare AI Gateway Forwarding : Requests are made through the Gateway proxy, hiding the original key and reducing the risk of blocking.

- Intelligent Polling : Automatically selects available keys for sending requests, eliminating the need for manual management.

- Model-level current limiting : When a model triggers a flow limit, the system suspends the model request and switches to other available models or keys.

- Rapid deployment : Deploy to Cloudflare Worker with a single click, generate the Worker URL and you're ready to go.

- error handling : Recognizes quota errors and automatically cools and switches keys to guarantee service continuity.

caveat

- Ensure network access to the Cloudflare service; users in mainland China may require a VPN.

- Don't share.

AUTH_KEY, lest request tracking errors lead to flow limiting. - Log in to the Cloudflare console periodically to update keys or optimize quota policies.

application scenario

- Efficient key management for AI developers

Developers hold multiple AI API keys and need to maximize quota usage. one Balance simplifies management by intelligently distributing requests through the Cloudflare AI Gateway. - High Availability for Enterprise AI Applications

Enterprises developing chatbots or content generation tools need to stabilize API calls. One Balance ensures key polling and error handling to prevent service outages. - Open Source Community Collaboration

Open source project teams can share key pools with One Balance to reduce the risk of blocking and improve development efficiency.

QA

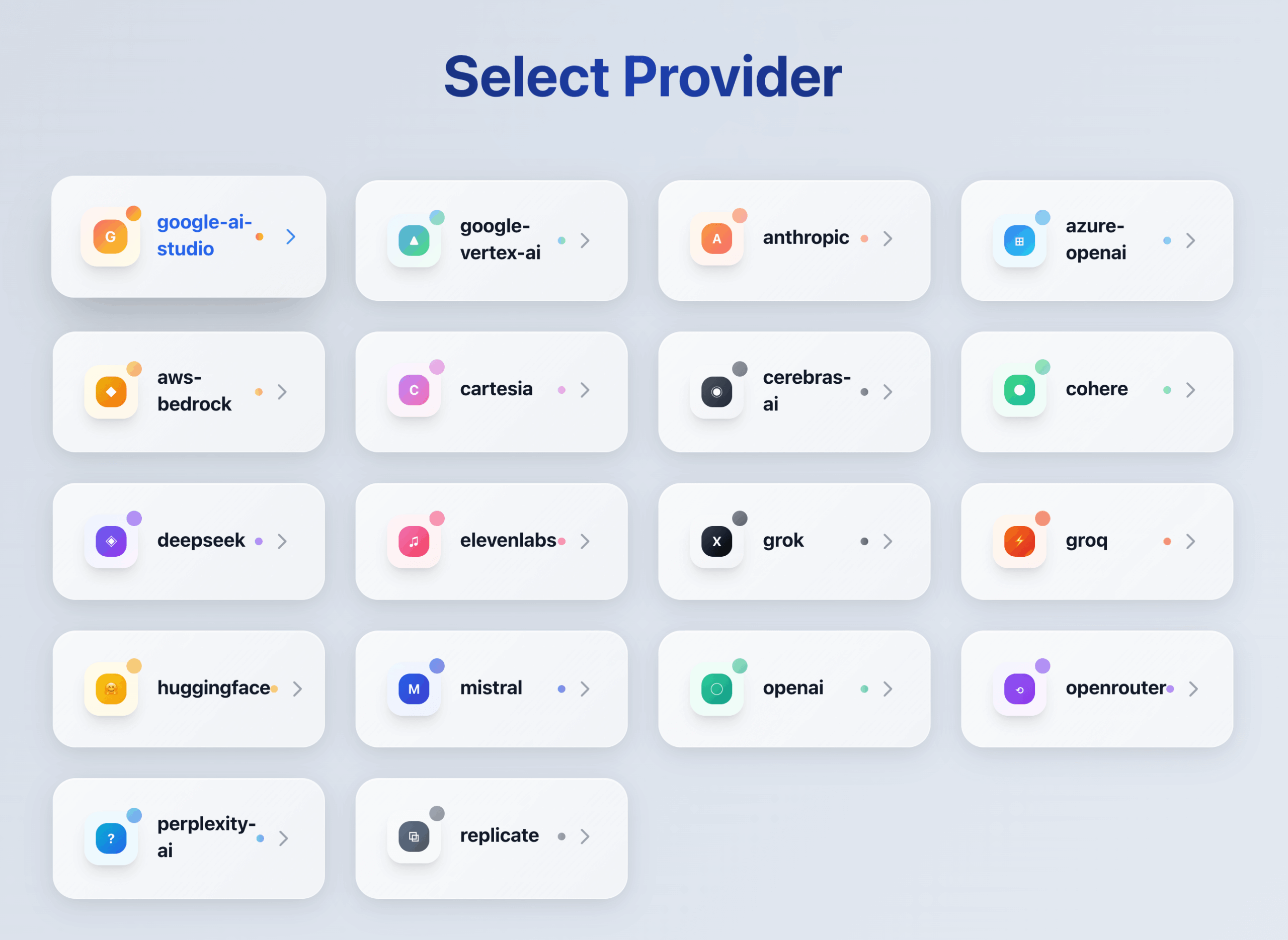

- What AI providers does One Balance support?

Support for Google AI Studio, OpenAI, etc., as described in the Cloudflare AI Gateway documentation (https://developers.cloudflare.com/ai-gateway/providers)。 - How to reduce the risk of key blocking?

Use Cloudflare AI Gateway proxy requests to hide key information. Avoid sharingAUTH_KEY, regular quota checks. - How is deployment failure handled?

recognizewranglerLogged in, checkingAUTH_KEYSettings. Check the terminal logs or GitHub Issues for help. - How do you support other AI providers?

Modify the request forwarding logic to refer to the Cloudflare AI Gateway documentation to add the new provider's API format.