On July 30th, local time, the popular developer runtime framework for the Local Large Language Model (LLM) Ollama , officially unveiled its new macOS 和 Windows desktop applications. This move marks the Ollama A key step from a command-line tool for developers to a graphical platform that is easy for the average user to get started with, dramatically reducing the need to deploy and use a PC with LLM The threshold.

For users seeking data privacy, offline accessibility, and low cost, running a local LLM has always been the ideal choice. However, the complexity of configuring the environment and command line operations is often prohibitive.Ollama The newly launched desktop app is designed to address this pain point.

Simplified model interaction experience

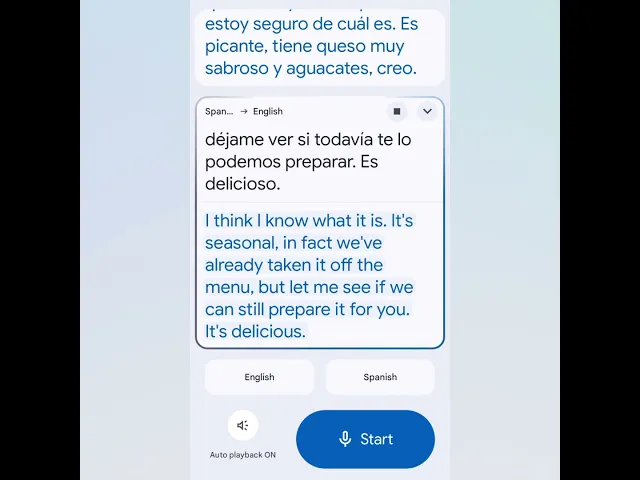

This new application provides an intuitive chat interface that allows users to talk to a variety of open source models running locally as if they were using an online chatbot. From downloading new models to switching between different models, all operations can be done in the graphical interface, saying goodbye to cumbersome terminal commands.

Powerful document and multimodal processing

One of the highlights of the new app is its powerful file handling capabilities. Users can now drag and drop text files, PDFs or even code files directly into the chat window and let the model analyze, summarize or answer questions based on the file content. This is a great convenience for working with long reports, reading papers or understanding complex code bases.

In order to process documents that contain a lot of information, users can increase the Context Length of the model in the settings. It is important to note that higher context lengths take up more of the computer's memory resources.

In addition, the app integrates Ollama The latest multimodal engine makes it possible to process image input. Users can send a model to a model that supports visual functions (e.g. Google DeepMind 的 Gemma 3) to send pictures and ask questions or discuss the content of the pictures. This feature provides local LLM application scenarios open up new possibilities, from recognizing objects in pictures to interpreting graphical data, all in a completely offline environment.

Code Assistance for Developers

For software developers, the new application provides equally powerful support. By providing code files to the model, developers can quickly access code explanations, documentation suggestions, or refactoring solutions to significantly improve productivity.

How to get started

Users can now use the Ollama The official website download applies to macOS 和 Windows of the new version of the application. For experienced users who still favor the command line, theOllama Continuing in its GitHub A standalone, command-line-only version is available on the release page.