For any developer wishing to run open source large language models locally, theOllama has become an indispensable tool. It has greatly simplified the process of downloading, deploying and managing models. However, with the explosive growth in model parameter sizes, even professional PCs can't handle hundreds of gigabytes of video and memory requirements, making many cutting-edge models out of reach for most developers.

To address this core pain point, theOllama The Cloud Models preview was recently launched. The new service is designed to allow developers to run those giant models on datacenter-grade hardware in a clever scheme that is as interactive as running them locally.

Core features: break through hardware limitations and maintain local experience

Ollama Cloud The design philosophy is not to simply provide a cloud API interface, but to seamlessly integrate cloud arithmetic into the local workflows that developers are familiar with.

- Breaking through hardware limitations: Users can now directly run a program like

deepseek-v3.1:671b(671 billion parameters) orqwen3-coder:480b(480 billion parameters) such giant models. The former is a powerful hybrid thinking model, while the latter is developed by Alibaba and specializes in code generation and agent tasks. These models require computational resources far beyond the realm of personal devices, and theOllama CloudThen this obstacle is removed once and for all. - Seamless localization experienceThis is

Ollama CloudThe most attractive point. Users don't need to change the existing toolchain, all operations are still performed through the localOllamaclient to complete. Whether you use theollama runto have an interactive dialog, or throughollama lsViewing the list of models is exactly the same experience as managing local models. The cloud model appears locally as a lightweight reference or "shortcut" that takes up no disk space. - Prioritize privacy and security: Data privacy is a key consideration in AI applications.

OllamaOfficials explicitly promise that the cloud servers will not retain any query data from users, ensuring that conversations and code snippets remain private. - Compatible with OpenAI API:

Ollamaof local services because of their impact onOpenAIThe API format is popular for its compatibility.Ollama CloudThe inheritance of this feature means that all existing support forOpenAIApplications and workflows with APIs can без seamlessly switch to using these large models in the cloud.

Currently available cloud models

Currently.Ollama Cloud The preview version offers the following oversized parametric models, all with the model name -cloud Suffixes are used to differentiate:

- qwen3-coder:480b-cloud: Alibaba's flagship model focused on code generation and agent tasks.

- deepseek-v3.1:671b-cloud: An ultra-large-scale general-purpose model that supports mixed modes of thinking and excels in reasoning and coding.

- gpt-oss:120b-cloud

- gpt-oss:20b-cloud

Quick Start Guide

experience for oneself Ollama Cloud The process is very simple and can be done in just a few steps.

Step 1: Update Ollama

Ensure that the locally installed Ollama Version upgrade to v0.12 or higher. You can download the latest version from the official website or use System Package Manager to update.

Step 2: Log in to your Ollama account

Since the cloud model needs to call the ollama.com computing resources, users must log in to their Ollama account to complete the authentication. Execute the following command in the terminal:

ollama signin

This command directs the user to complete the login authorization in the browser.

Step 3: Run the cloud model

Once you have successfully logged in, you can run the cloud model directly as if it were a local model. For example, to start the 480 billion parameter Qwen3-Coder model, just execute:

ollama run qwen3-coder:480b-cloud

Ollama The client automatically handles all request routing to the cloud, and the user simply waits for the model to respond.

Step 4: Manage the cloud model

utilization ollama ls command to see the list of models that have been pulled locally. You'll notice that the cloud model's SIZE The column is shown as -, which intuitively shows that it's just a reference that doesn't take up local storage space.

% ollama ls

NAME ID SIZE MODIFIED

gpt-oss:120b-cloud 569662207105 - 5 seconds ago

qwen3-coder:480b-cloud 11483b8f8765 - 2 days ago

API Integration and Calls

For developers, API calls are at the heart of integration.Ollama Cloud Supports two main types of API calls: via local proxies and direct access to cloud endpoints.

Option 1: Via Local Ollama Service Agent

This is the easiest and most recommended way to work with existing workflows.

First, use the pull command pulls the model reference locally:

ollama pull gpt-oss:120b-cloud

Then, as you would with any local model, call the local Ollama Services (http://localhost:11434) Send the request.

Python Example

import ollama

response = ollama.chat(

model='gpt-oss:120b-cloud',

messages=[{

'role': 'user',

'content': 'Why is the sky blue?',

},

])

print(response['message']['content'])

JavaScript (Node.js) Examples

import ollama from "ollama";

const response = await ollama.chat({

model: "gpt-oss:120b-cloud",

messages: [{ role: "user", content: "Why is the sky blue?" }],

});

console.log(response.message.content);

cURL Example

curl http://localhost:11434/api/chat -d '{

"model": "gpt-oss:120b-cloud",

"messages": [{

"role": "user",

"content": "Why is the sky blue?"

}],

"stream": false

}'

Way 2: Direct access to the cloud API

In some scenarios, such as in a server or cloud function, it is more convenient to call the cloud API directly.

- API endpoints:

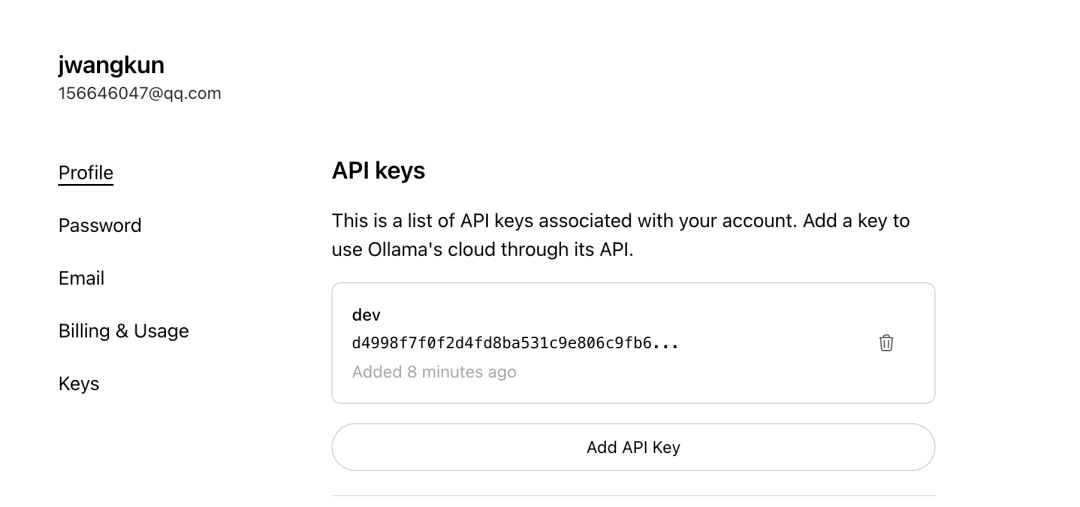

https://ollama.com/v1/chat/completions - API Key Application: A dedicated API Key is required to access this endpoint directly. users can find the API key in the

OllamaAfter logging in on the official website, go to the Keys page to generate your own key.

Direct calls to cloud APIs follow the standard OpenAI format, simply carry the corresponding Authorization Vouchers are sufficient.

Integration with third-party tools

Ollama Cloud The strength of the design lies in its seamless ecological compatibility. All support is available through the Ollama Third-party clients that connect from local API endpoints, such as the Open WebUI、LobeChat 或 Cherry StudioThe cloud model can be used directly without any modification.

以 Cherry Studio configuration as an example:

- assure

APIendpoint points to localOllamaExample:http://localhost:11434。 - In the list of model names, fill in the name of the cloud model you have pulled directly, for example

gpt-oss:120b-cloud。 API Keyfield is usually left blank because the authentication has already been passedollama signinCompleted in the local client.

Once the configuration is complete, your calls to the cloud model in these tools will be locally Ollama The client is automatically proxied to the cloud for processing, and the entire process is completely transparent to the upper tier application.

Strategic significance and outlook

Ollama Cloud The launch marks a significant step forward in the usability of open source AI models. Not only does it open the door to top-tier big models for individual developers and enthusiasts, but more importantly, it reduces the cost of learning and migration for developers by keeping the interaction experience localized.

The service is currently in the preview stage, with officials mentioning that there is a temporary rate limit to ensure service stability, and plans to introduce a usage-based billing model in the future. This initiative will Ollama Positioned as a bridge between local development environments and the powerful computing power of the cloud, making it possible to connect with the Groq、Replicate and other cloud-only reasoning services have a unique developer experience advantage over the competition.