Executive Summary

Nexa Native Inference Framework makes the deployment of generative AI models on the device side seamless and efficient. The technology supports a wide range of chipsets including AMD, Qualcomm, Intel, NVIDIA, and homegrown chips, and is compatible with all major operating systems. We provide benchmark data for generative AI models on a variety of common tasks, each tested at TOPS performance level on different types of devices.

Core strengths:

- multimodal capability - be in favor ofText, audio, video and visualGenerative AI-like tasks

- Wide range of hardware compatibility - Runs AI models on PCs, laptops, mobile devices, and embedded systems

- leading performance - With our edge inference framework, NexaQuant, models run 2.5x faster and storage and memory requirements are reduced by 4x while maintaining high accuracy

Why end-side AI?

Deploying AI models directly on the device side has several advantages over relying on cloud APIs:

- Privacy and Security - Data retention on the device side ensures confidentiality

- reduce costs - No need to pay for expensive cloud-based reasoning

- Speed and Response - Low-latency inference without relying on the network

- offline capability - AI applications can still be used in low connectivity areas

With Nexa edge inference technology, developers can efficiently run generative AI models on a wide range of devices while minimizing resource consumption.

New Trends in Multimodal AI Applications

Nexa AI End-side deployment supportMultimodal AI, enabling applications to handle and integrate multiple data types:

- Text AI - Chatbots, document summarization, programming assistants

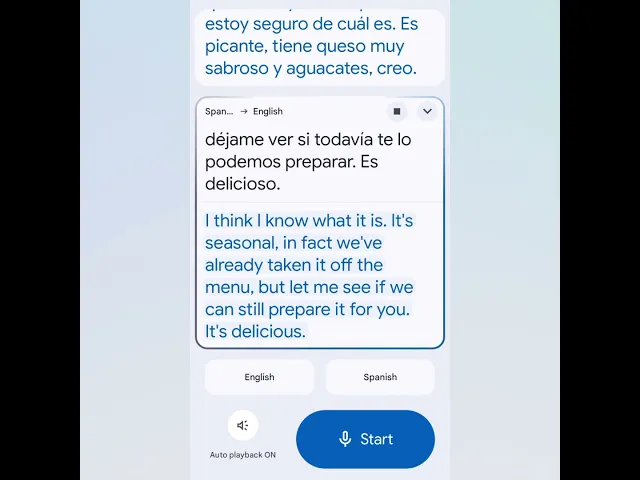

- Speech to Speech AI - Real-time voice translation, AI voice assistant

- Visual AI - Target detection, image description, document OCR processing

This is accomplished through the use ofNexaQuantOur multimodal models achieve excellent compression and acceleration while maintaining top performance.

Cross-Device Generative AI Task Performance Benchmarks

We provide benchmarking data for generative AI models on a variety of common tasks, each tested at the TOPS performance level on different types of devices. If you have a specific device and target use case, you can refer to similarly performing devices to estimate processing power:

Generative AI tasks covered:

- Voice to Voice

- Text to Text

- Visual to text

Covered device types:

- Modern Notebook Chips - Optimized for desktop and laptop native AI processing

- flagship mobile chip - AI models running on smartphones and tablets

- embedded system (~4 TOPS) - Low Power Devices for Edge Computing Applications

Speech-to-speech benchmarking

Evaluating Real-Time Speech Interaction Capabilities with Language Models - ProcessingAudio input generates audio output

| Equipment type | Chips & Devices | Delay (TTFT) | decoding speed | Average Peak Memory |

|---|---|---|---|---|

| Modern Notebook Chips (GPU) | Apple M3 Pro GPU | 0.67 seconds | 20.46 tokens/second | ~990MB |

| Modern Notebook Chips (iGPU) | AMD Ryzen AI 9 HX 370 iGPU (Radeon 890M) | 1.01 seconds | 19.28 tokens/second | ~990MB |

| Modern Notebook Chips (CPU) | Intel Core Ultra 7 268V | 1.89 seconds | 11.88 tokens/second | ~990MB |

| Flagship Mobile Chip CPU | Qualcomm Snapdragon 8 Gen 3 (Samsung S24) | 1.45 seconds | 9.13 token/second | ~990MB |

| Embedded IoT System CPU | Raspberry Pi 4 Model B | 6.9 seconds | 4.5 token/second | ~990MB |

Speech-to-Speech Benchmarking Using Moshi with NexaQuant

Text-to-text benchmarking

valuationGenerate text based on text inputAI model performance

| Equipment type | Chips & Devices | Initial Delay (TTFT) | decoding speed | Average Peak Memory |

|---|---|---|---|---|

| Modern Notebook Chips (GPU) | Apple M3 Pro GPU | 0.12 seconds | 49.01 token/second | ~2580MB |

| Modern Notebook Chips (iGPU) | AMD Ryzen AI 9 HX 370 iGPU (Radeon 890M) | 0.19 seconds | 30.54 tokens/second | ~2580MB |

| Modern Notebook Chips (CPU) | Intel Core Ultra 7 268V | 0.63 seconds | 14.35 tokens/second | ~2580MB |

| Flagship Mobile Chip CPU | Qualcomm Snapdragon 8 Gen 3 (Samsung S24) | 0.27 seconds | 10.89 tokens/second | ~2580MB |

| Embedded IoT System CPU | Raspberry Pi 4 Model B | 1.27 seconds | 5.31 token/second | ~2580MB |

Text-to-text benchmarking using llama-3.2 with NexaQuant

Visual-to-text benchmarking

Evaluating AI Analyzing Visual InputsThe ability to generate responses, extract key visual information, and dynamic guidance tools -Visual Input, Text Output

| Equipment type | Chips & Devices | Initial Delay (TTFT) | decoding speed | Average Peak Memory |

|---|---|---|---|---|

| Modern Notebook Chips (GPU) | Apple M3 Pro GPU | 2.62 seconds | 86.77 tokens/second | ~1093MB |

| Modern Notebook Chips (iGPU) | AMD Ryzen AI 9 HX 370 iGPU (Radeon 890M) | 2.14 seconds | 83.41 tokens/second | ~1093MB |

| Modern Notebook Chips (CPU) | Intel Core Ultra 7 268V | 9.43 seconds | 45.65 tokens/second | ~1093MB |

| Flagship Mobile Chip CPU | Qualcomm Snapdragon 8 Gen 3 (Samsung S24) | 7.26 seconds. | 27.66 tokens/second | ~1093MB |

| Embedded IoT System CPU | Raspberry Pi 4 Model B | 22.32 seconds | 6.15 tokens/second | ~1093MB |

Visual-to-Text Benchmarking Using OmniVLM with NexaQuant