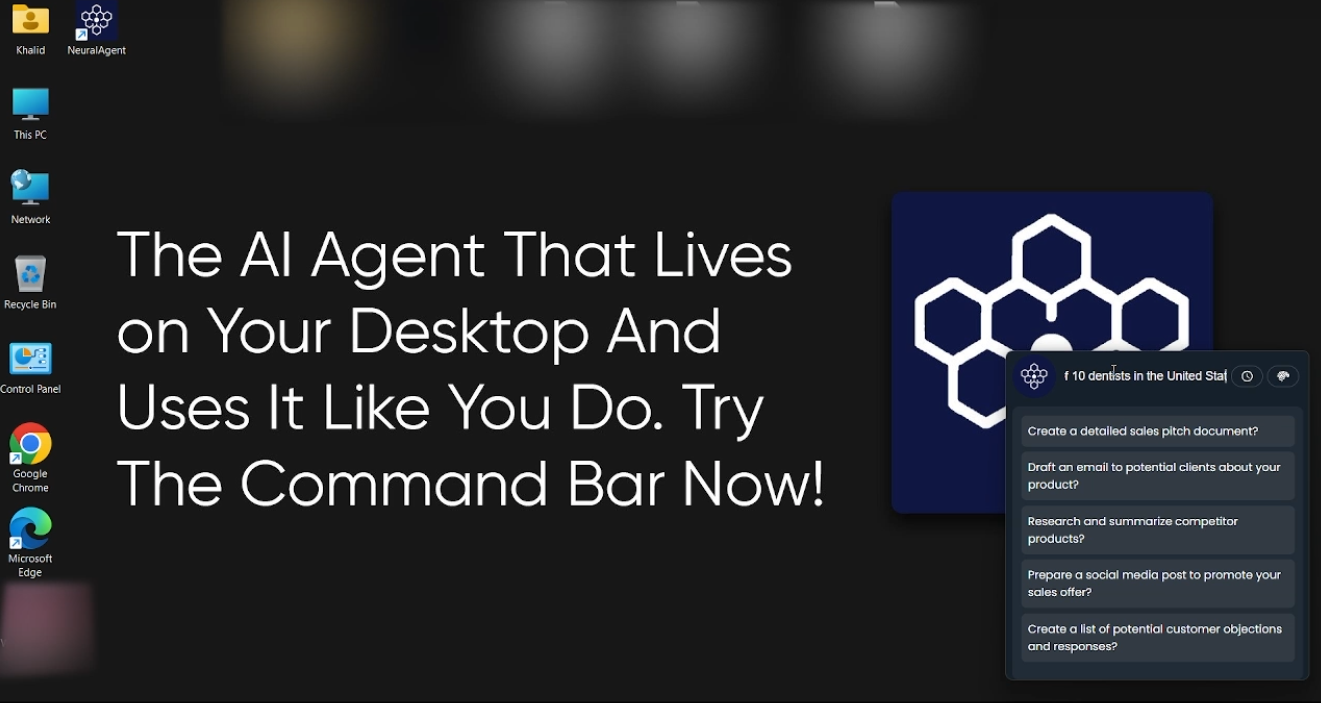

NeuralAgent is an open source AI intelligent body tool that runs on the user's local computer. It accomplishes a variety of tasks by simulating human actions such as clicking, typing, scrolling, and navigating the application. Users simply give commands in natural language and NeuralAgent automatically executes them, such as filling out a form, sending an email or searching for information. It supports Windows, macOS, and Linux systems, and emphasizes local operation to protect privacy without relying on cloud services. Built with FastAPI and ElectronJS, the project supports a variety of large language models (e.g., GPT-4, Claude), and allows the use of the Ollama Using native models. NeuralAgent provides a fast, scalable architecture for further customization by developers. Users can access the source code on GitHub, participate in community contributions, or get support through Discord.

Function List

- Task automation: Automate actions on the computer, such as opening applications, filling out forms, and sending emails, through natural language commands.

- Cross-platform support: Compatible with Windows, macOS and Linux systems, some features (such as background browser control) are currently Windows only.

- Multi-model support: Support for multiple large language models, including OpenAI, Anthropic, Azure OpenAI, Bedrock, and native Ollama models.

- Command Bar Functions: Provide a resident command bar ready to accept user commands and perform tasks quickly.

- open source and scalable: Based on the MIT license, it allows developers to clone code, modify or contribute new features.

- local operation: No cloud services are required, user privacy is protected, and data and operations are handled locally.

- back-office automation: Supports tasks running in the background, such as automatically searching or manipulating applications through the browser.

Using Help

Installation process

To run NeuralAgent locally, you need to follow the steps below to install it. The entire process requires basic programming knowledge and familiarity with terminal commands.

- Cloning Codebase

Clone the NeuralAgent code locally by running the following command in a terminal:git clone https://github.com/withneural/neuralagent.gitOnce the cloning is complete, go to the project directory:

cd neuralagent - Setting up a virtual environment

It is recommended to create a Python virtual environment to avoid dependency conflicts. You can create a virtual environment for Python in thebackenddirectory to run:cd backend python -m venv venvActivate the virtual environment:

- Windows:

venv\Scripts\activate - macOS/Linux systems:

source venv/bin/activate

- Windows:

- Installation of dependencies

Install the backend dependencies in the virtual environment:pip install -r requirements.txtThen, go to

desktopcatalog to install front-end dependencies:cd ../desktop/neuralagent-app npm install - Configuring the PostgreSQL Database

NeuralAgent requires a local PostgreSQL database. Users need to install PostgreSQL first (please refer to the official PostgreSQL website for details). After creating the database, configure the environment variables:export DB_HOST=localhost export DB_PORT=5432 export DB_DATABASE=neuralagent export DB_USERNAME=your_username export DB_PASSWORD=your_password export DB_CONNECTION_STRING=postgresql://your_username:your_password@localhost:5432/neuralagent export JWT_ISS=NeuralAgentBackend export JWT_SECRET=your_random_string将

your_username、your_password和your_random_stringReplace with the actual value.JWT_SECRETIt needs to be a random string, which can be generated by command:openssl rand -hex 32 - Configuring AI Models

NeuralAgent supports a variety of large language models, and users need to configure API keys or local models according to their needs. For example, configure OpenAI:export OPENAI_API_KEY=your_openai_keyIf using a local Ollama model, make sure the Ollama service is running and set up:

export OLLAMA_URL=http://127.0.0.1:11434 export CLASSIFIER_AGENT_MODEL_TYPE=ollama export CLASSIFIER_AGENT_MODEL_ID=gpt-4.1Similarly, Anthropic, Azure OpenAI, or other supported models can be configured.

- Starting services

Open two terminal windows to launch the back-end and front-end respectively:- Backend (in the

backend(Catalog):uvicorn main:app --reload - The front end (in the

desktop/neuralagent-app(Catalog):npm start

- Backend (in the

- Verify Installation

Upon startup, the NeuralAgent interface is displayed on the desktop. Users can test whether it is working properly by typing commands into the command bar. For example, type "Open Notepad and write 'Hello World'".

Usage

The core of NeuralAgent is to accomplish computer operations through natural language commands. Below is the detailed operation flow of the main functions:

- Task automation

Enter natural language commands in the command bar, for example:查找 5 个热门 GitHub 仓库,然后在记事本中记录并保存到桌面NeuralAgent automatically opens a browser, searches the GitHub Trends page, extracts the information, opens Notepad, enters the content, and saves it. The user doesn't have to do anything manually, the AI does the whole thing.

- command-line operation

NeuralAgent's command bar is a resident interface, ready to accept instructions. The user can type:打开 Gmail 并发送一封邮件给 test@example.com,主题为‘测试’,内容为‘这是 NeuralAgent 发送的邮件’NeuralAgent automatically opens a browser, logs into Gmail (with pre-configured login information), fills in the content of the email and sends it.

- Using Local Models

If Ollama is configured, local models can be used for task processing. The user needs to ensure that the computer has enough performance to run the large language model. Once the setup is complete, instructions are processed faster and a network connection is not required. Example:在终端运行 'ls -l' 并记录输出到文件NeuralAgent will execute the terminal commands and save the results.

- Developer Customization

Developers can modify thebackenddirectory or the Python code in thedesktop/neuralagent-appin the directory React Front-end code. For example, adding a new feature requires adjusting theaiagentin the directorypyautoguiScripts. Community support for submitting Pull Requests via GitHub.

caveat

- The first run is required to ensure an internet connection to download the dependencies.

- Background automation features such as browser control may have limitations on macOS and Linux.

- High performance hardware (e.g. GPU) is required to use local models, otherwise they may run slowly.

- Use caution when testing, as NeuralAgent operates the mouse and keyboard directly.

application scenario

- Daily office automation

Users need to quickly handle repetitive tasks, such as sending emails in bulk or organizing files, and NeuralAgent can do so with a single command, such as "Open Excel, organize the table data, and save it". - Developer Debugging Tools

Developers can use NeuralAgent to automatically run test scripts or execute terminal commands. For example, "Run Python script and save error log to desktop". - Education and learning

Students can learn command line operations with NeuralAgent. For example, type "run the git command in the terminal and interpret the output" and the NeuralAgent will execute and generate instructions. - Content creation support

Creators can have NeuralAgent automatically search for material and organize it. For example, "Search for 10 landscape images and save them to a folder."

QA

- Does NeuralAgent require cloud services?

No. NeuralAgent runs on the local computer and data processing and operations are done locally to protect user privacy. - Is there support for local large language models?

Support. Users can configure the local model through Ollama, but need to ensure that the hardware performance is sufficient. - How long does it take to install?

Depending on network and hardware, usually 10-30 minutes. Cloning code and installing dependencies takes up most of the time. - Can it run on a low performance computer?

It will run, but if using a local model, a high performance CPU or GPU is recommended, otherwise it may be slow. - How can I participate in the development?

Clone your GitHub repository, change your code and submit a Pull Request. join the Discord community for more support.