GLM-4.5V: A multimodal dialog model capable of understanding images and videos and generating code

GLM-4.5V is a new generation of Visual Language Megamodel (VLM) developed by Zhi Spectrum AI (Z.AI). The model is built based on the flagship text model GLM-4.5-Air using MOE architecture, with 106 billion total references, including 12 billion activation parameters.GLM-4.5V not only processes images and text, but also understands visual...

ARC-Hunyuan-Video-7B: An Intelligent Model for Understanding Short Video Content

ARC-Hunyuan-Video-7B is an open source multimodal model developed by Tencent's ARC Lab that focuses on understanding user-generated short video content. It provides in-depth structured analysis by integrating visual, audio and textual information of videos. The model can handle complex visual elements, high-density audio information and fast-paced short video...

GLM-4.1V-Thinking: an open source visual inference model to support multimodal complex tasks

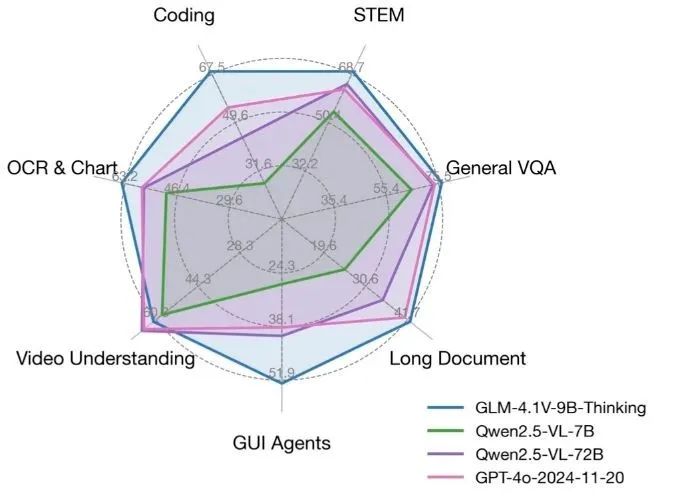

GLM-4.1V-Thinking is an open source visual language model developed by the KEG Lab at Tsinghua University (THUDM), focusing on multimodal reasoning capabilities. Based on the GLM-4-9B-0414 base model, GLM-4.1V-Thinking utilizes reinforcement learning and "chain-of-mind" reasoning mechanisms to...

VideoMind

VideoMind is an open source multimodal AI tool focused on inference, Q&A and summary generation for long videos. It was developed by Ye Liu of the Hong Kong Polytechnic University and a team from Show Lab at the National University of Singapore. The tool mimics the way humans understand video by breaking down the task into steps such as planning, positioning, verifying and answering, one by...

DeepSeek-VL2

DeepSeek-VL2 is a series of advanced Mixture-of-Experts (MoE) visual language models that significantly improve the performance of its predecessor, DeepSeek-VL. The models excel in tasks such as visual quizzing, optical character recognition, document/table/diagram comprehension, and visual localization.De...

Reka: providing multimodal AI models, supporting multilingual processing, optimizing data analysis, and enhancing visual understanding

Reka is a company dedicated to providing a new generation of multimodal AI solutions. Its products include Reka Core, Flash, Edge, and Spark models that support the processing of text, code, image, video, and audio data.Reka's models feature powerful reasoning capabilities and multi-language support for a wide range of deployment loops...

Top