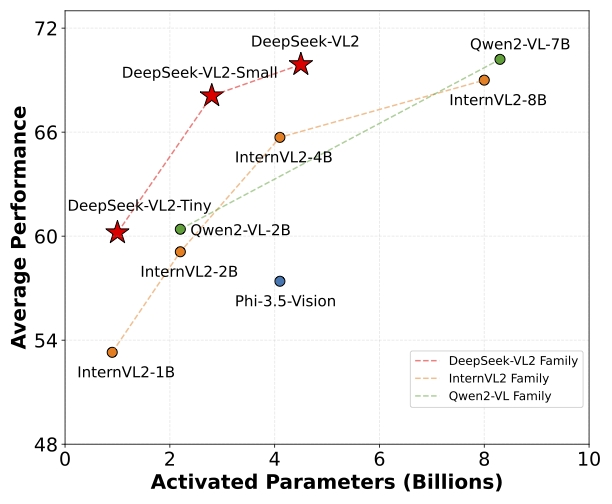

DeepSeek-VL2 is a series of advanced Mixture-of-Experts (MoE) visual language models that significantly improve on the performance of its predecessor, DeepSeek-VL. The models excel in tasks such as visual question and answer, optical character recognition, document/table/diagram comprehension, and visual localization.The DeepSeek-VL2 family consists of three variants: DeepSeek-VL2-Tiny, DeepSeek-VL2-Small, and DeepSeek-VL2, with 1.0B, 2.8B, and 4.5B activation parameters, respectively. activation parameters, respectively. The models achieve comparable or superior performance to existing open-source dense and MoE models with similar or fewer number of parameters.

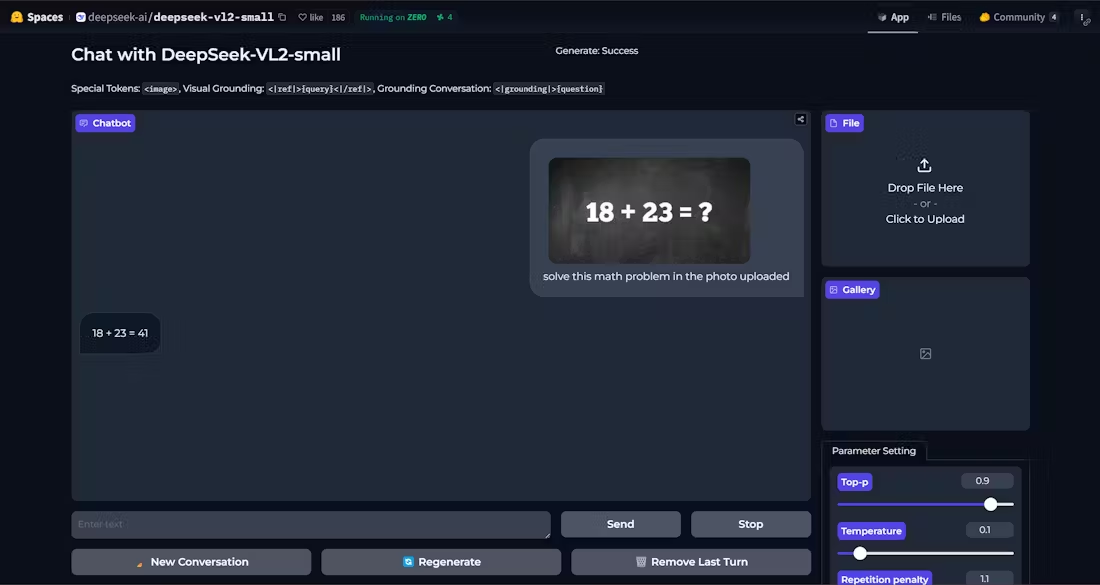

Demo: https://huggingface.co/spaces/deepseek-ai/deepseek-vl2-small

Function List

- Visual Q&A: Supports complex visual quizzing tasks by providing accurate answers.

- Optical Character Recognition (OCR): Efficient recognition of text content in images.

- Document Understanding: Parsing and understanding complex document structure and content.

- Form comprehension: Identify and process tabular data to extract useful information.

- Graphical understanding: Analyze and interpret data and trends in graphs and charts.

- visual orientation: Accurately locate the target object in the image.

- Multi-variant SupportThe Tiny, Small and Standard models are available to meet different needs.

- High performance: Reduces the number of activation parameters while maintaining high performance.

Using Help

Installation process

- Make sure Python version >= 3.8.

- Clone the DeepSeek-VL2 repository:

git clone https://github.com/deepseek-ai/DeepSeek-VL2.git

- Go to the project directory and install the necessary dependencies:

cd DeepSeek-VL2

pip install -e .

usage example

Example of simple reasoning

Below is sample code for simple inference using DeepSeek-VL2:

import torch

from transformers import AutoModelForCausalLM

from deepseek_vl2.models import DeepseekVLV2Processor, DeepseekVLV2ForCausalLM

from deepseek_vl2.utils.io import load_pil_images

# 指定模型路径

model_path = "deepseek-ai/deepseek-vl2-tiny"

vl_chat_processor = DeepseekVLV2Processor.from_pretrained(model_path)

vl_model = DeepseekVLV2ForCausalLM.from_pretrained(model_path)

# 加载图像

images = load_pil_images(["path_to_image.jpg"])

# 推理

inputs = vl_chat_processor(images=images, return_tensors="pt")

outputs = vl_model.generate(**inputs)

print(outputs)

Detailed function operation flow

- Visual Q&A::

- Load models and processors.

- Enter an image and a question and the model will return the answer.

- Optical Character Recognition (OCR)::

- utilization

DeepseekVLV2ProcessorLoad image. - The model is called for inference to extract the text in the image.

- utilization

- Document Understanding::

- Loads the input containing the document image.

- The model parses the document structure and returns the parsing result.

- Form comprehension::

- Enter an image containing the form.

- The model recognizes the structure and content of the form and extracts key information.

- Graphical understanding::

- Load the chart image.

- The model analyzes chart data, providing interpretation and trend analysis.

- visual orientation::

- Enter a description and image of the target object.

- The model locates the target object in the image and returns the position coordinates.

With the above steps, users can fully utilize the power of DeepSeek-VL2 to accomplish a variety of complex visual language tasks.