Meta AI V-JEPA 2 (Video Joint Embedding Predictive Architecture 2), its latest world model, was released on June 11th. The model achieves state-of-the-art performance in visual understanding and prediction of the physical world and can be used for "zero-shot" planning of robots that interact with unknown objects in unfamiliar environments.

Along with the model release are three new benchmark tests designed to more accurately assess the ability of existing models to reason about the physical world. This move is not only another step on Meta's path to achieving Advanced Machine Intelligence (AMI), but it also provides the industry with a new yardstick for measuring AI's ability to interact with the physical world.

What is the world model?

Human babies build their intuition about the world through observation long before they learn to speak. For example, throw a tennis ball upward and it will fall, not hover in the air or suddenly turn into an apple. This physical intuition is a manifestation of the inner world model.

This internal model allows us to predict the consequences of our own or others' behavior and thus plan ahead. When navigating through a crowded room, we subconsciously anticipate paths to avoid collisions; when preparing dinner, we empirically determine when to turn off the fire.

To build AI agents that can "think before they act" in the physical world, their world model must have three core capabilities:

- understandings: The ability to recognize objects, actions and movements in a video.

- anticipate: The ability to anticipate the evolution of the world and how it will change in response to specific behavioral interventions.

- program: Plan a series of actions that will lead to the achievement of a goal, based on predictive capabilities.

V-JEPA 2 Model Details

V-JEPA 2 is a model with 1.2 billion parameters built on the JEPA architecture (Joint Embedded Prediction Architecture) first proposed by Meta in 2022. The model is primarily trained through self-supervised learning on massive amounts of video, which gives it insight into how the world works without the need for human labeling.

Its core contains two components:

- Encoder: Input raw video and output embeddings that capture key semantic information about the state of the world.

- Predictor: Receive contextual information such as video embeddings and prediction targets and output prediction embeddings for the future.

Training for V-JEPA 2 is divided into two phases:

- No-motion pre-training: The model was first trained on over 1 million hours of video and 1 million images. This data taught it the basics about how objects move and interact. After this phase, the model has reached the top level on tasks such as action recognition (Something-Something v2), action anticipation (Epic-Kitchens-100) and video quizzing (Perception Test, TempCompass).

- Motion-conditioned training: Building on the first stage, the model is trained using data containing the robot's visual and control movements. This step allowed the predictor to learn to take "specific actions" into account. Remarkably, this phase allowed the model to gain effective planning and control capabilities using only 62 hours of robot data.

V-JEPA 2 demonstrates excellent zero-sample capabilities in robot planning tasks. Trained on an open-source DROID dataset, it can be deployed directly on MetaLab robots without any fine-tuning for new environments or new robots.

For short tasks such as grasping and placing, simply give the robot a picture of the goal state. The robot uses the V-JEPA 2 predictor to "visualize" the consequences of executing a series of candidate actions and selects the step closest to the goal. By means of model-predictive control, the robot can continuously replan and execute actions. For more complex long-duration tasks, the robot can be guided by providing a series of visual subgoals. In this way, V-JEPA 2 has achieved a success rate of 65% - 80% in handling pick and place tasks of unseen objects in a new environment.

V-JEPA 2 Related Resources.

Setting new benchmarks for physics comprehension

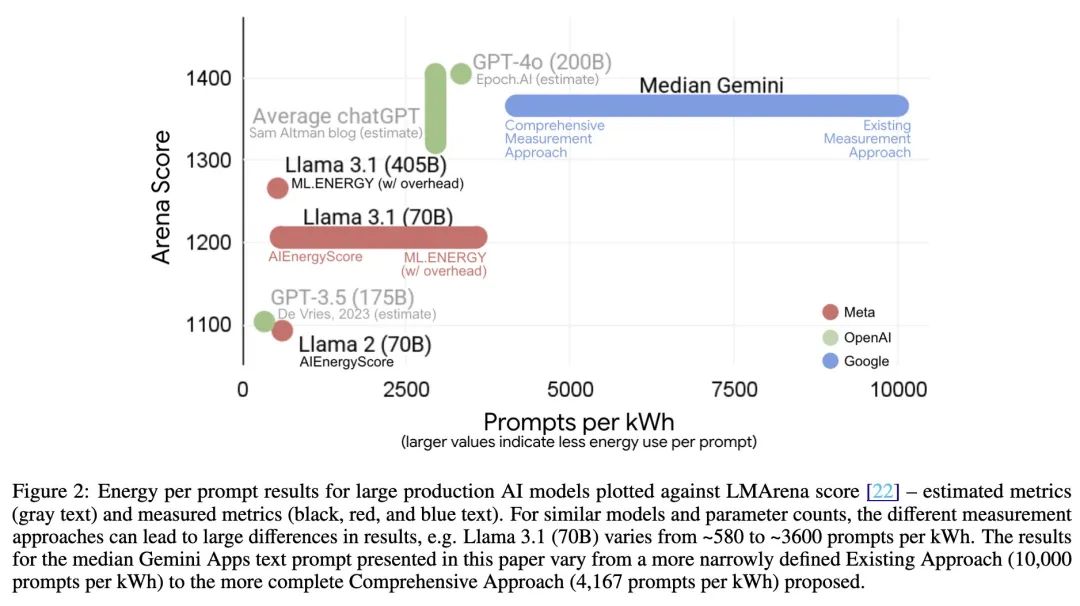

The three new benchmark tests released with the model are no less important than the model itself. They are designed to address the holes in the current evaluation system and push the community to build models that truly understand the physical world. While humans can achieve 85% - 95% accuracy in these tests, there is still a significant gap between the performance of top models, including V-JEPA 2, and humans.

- IntPhys 2

The benchmark aims to measure the ability of a model to distinguish between physically "possible" and "impossible" scenarios. It uses the game engine to generate pairs of videos, one of which will have an event that violates the laws of physics at a certain node. The model needs to recognize which video is problematic. Test results show that the current video model performs close to random guessing on this task.IntPhys 2 Resources.

- Minimal Video Pairs (MVPBench)

This benchmark assesses the physical comprehension of a video language model through multiple-choice questions. It is clever in that each sample has a "minimum variation pair": a video that is visually very similar but with opposite answers. The model must answer both questions correctly in order to score points, effectively curbing the problem of the model "shortcutting" through superficial visual or textual cues.MVPBench Resources.

- CausalVQA

The benchmark focuses on causal understanding of the physical world. It presents models with counterfactuals ("What would happen if ......?") , expectations ("What happens next?") and planning ("What should I do next to achieve my goal?") and other questions. Tests found that while the large multimodal model answered "what happened" well, it did not perform well when answering questions such as "what could have happened" or "what could have happened". It was found that although the large multimodal model answered "what happened" well, it did not perform well in answering questions like "what could have happened" or "what could have happened".

CausalVQA Resources.

Meta also posted a Hugging Face on thethe charts (of best-sellers)that are used to track the progress of the community model on these new benchmarks.

The Next Step to Advanced Machine Intelligence

V-JEPA 2 is just the beginning. Currently, it learns and predicts on a single time scale. However, tasks such as "put the dishes in the dishwasher" or "bake a cake" require hierarchical planning on different time scales.

Therefore, future research will focus on hierarchical JEPA models that are capable of learning, reasoning, and planning across multiple spatial and temporal scales. Another important direction is multimodal JEPA models, which are able to fuse visual, auditory, tactile, and other sensory information for prediction. These explorations will continue to drive the deep integration of AI with the physical world.