Lemon AI is a full-stack open source AI Intelligent Body framework that provides a completely local runtime environment without relying on cloud services. It supports local Large Language Models (LLMs) such as DeepSeek, Qwen, Llama, etc., through the Ollama Lemon AI integrates a virtual machine sandbox to secure code execution for individuals and businesses that need a localized AI solution. It can be easily deployed on PCs or enterprise servers, and is simple and compatible with a wide range of environments. It supports in-depth research, web browsing, code generation, and data analysis, and has a flexible architecture that can be customized according to needs.Lemon AI provides one-click deployment, lowering the technical threshold, and supports connecting to cloud models such as Claude, GPT, Gemini, and Grok via APIs, balancing the flexibility of both the local and the cloud.

Function List

- Locally run AI intelligences: Support local big language models such as DeepSeek, Qwen, Llama, etc., and localize the whole data processing.

- Virtual Machine Sandbox: Provides a secure code execution environment that protects user devices from potential risks.

- Code Generation and Interpretation: Generate high quality code, support 16K output length, optimize code format and syntax.

- In-depth research and web browsing: supports planning, execution and reflection of complex tasks, suitable for research and data collection.

- Data analysis: process and analyze data, generate visualization results to meet a variety of analysis scenarios.

- API Integration: Supports connecting to cloud models (e.g. Claude, GPT) to extend functionality.

- One-Click Deployment: Provides Docker containers and a streamlined installation process that is compatible with PCs and enterprise servers.

- Flexible customization: The architecture supports users to modify and expand according to their needs, adapting to different business scenarios.

Using Help

Installation process

Lemon AI provides multiple deployment methods, including open source code, Docker containers, client applications and online subscriptions. The following is an example of Docker deployment, introducing the detailed installation and usage process, suitable for beginners and professional users.

- Installing Docker

Ensure that Docker is installed on your device; if not, visit the Docker website to download and install it. Windows users need to enable WSL (Windows Subsystem for Linux) to run Docker commands. - Get Lemon AI code

Visit Lemon AI's GitHub repositories!https://github.com/hexdocom/lemonaiClick on the green button "Code" and select "Download ZIP" to download the source code. Click on the green button "Code" and select "Download ZIP" to download the source code or clone the repository using Git:git clone https://github.com/hexdocom/lemonai.git

Unzip the file or go to the cloned directory.

- Running a Docker Container

Open a WSL terminal (Windows users) or a regular terminal (Linux/Mac users) and go to the Lemon AI project directory. Run the following command to start the Docker container:docker-compose up -dThis command will automatically pull the required images and start the Lemon AI service. Make sure Docker Compose is installed.

- Configuring the Local Model

Lemon AI uses Ollama by default to run a local large language model. Install Ollama (refer to the official Ollama website) and pull the supported models, for example:ollama pull deepseekOn the Settings page of Lemon AI, configure the model path, making sure to point to the local model.

- Verify Installation

After booting, accessing the local address (usuallyhttp://localhost:3000) View the Lemon AI interface. The interface displays the model status and available features. If the model is not loaded, check that Ollama is running correctly.

Functional operation flow

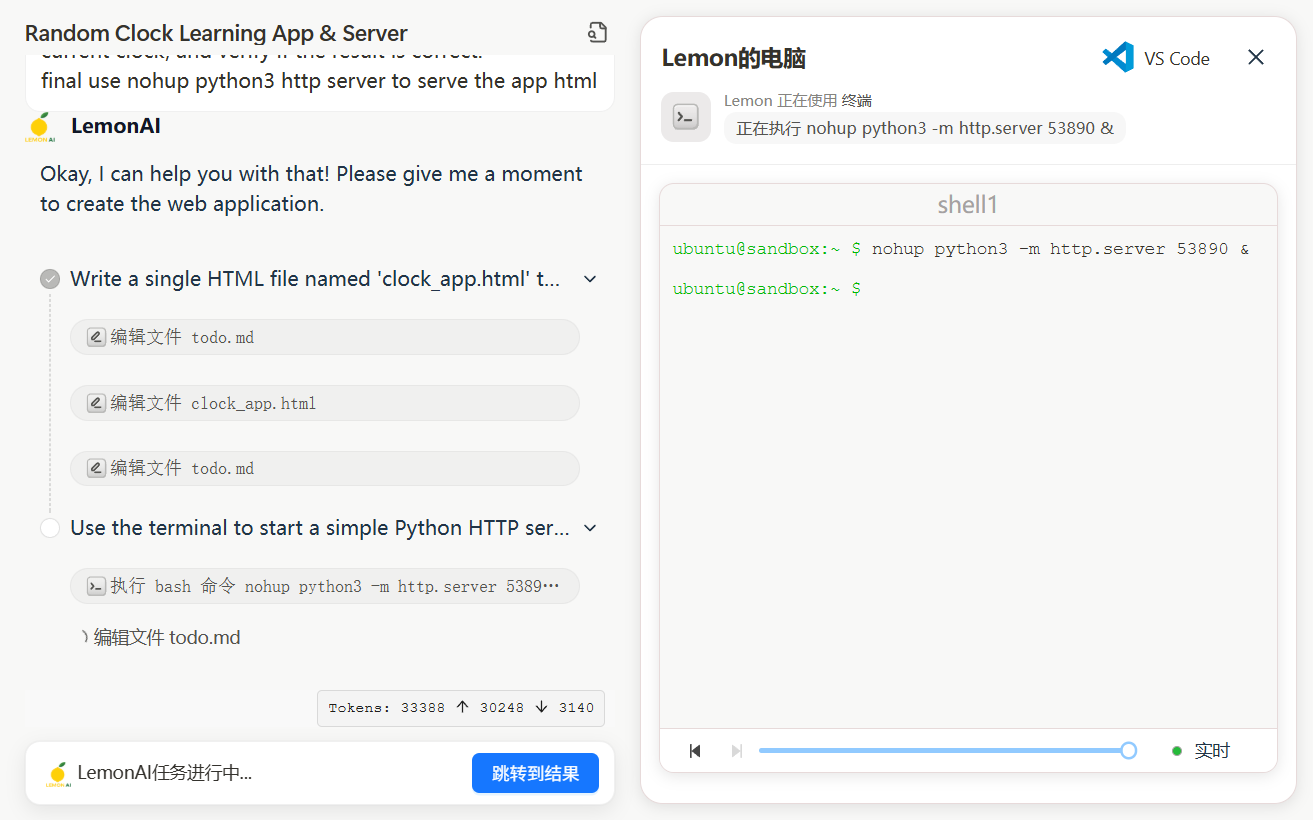

1. Code generation and interpretation

Lemon AI's code generation feature supports generating and optimizing code for developers to quickly build scripts or solve programming problems. In the main interface, select the "Code Generation" module and enter the task requirements, such as "Write a Python script to crawl web data". The system will call the local model (e.g. DeepSeek-V3) to generate the code and display it in the result area. The generated code supports a maximum length of 16K characters and is formatted to minimize syntax errors. Users can copy the code directly or run the tests in a virtual machine sandbox. The sandbox environment isolates the code execution and prevents it from affecting the local device. For example, run the following command to test the code:

python script.py

If you need to adjust the code, click on the "Edit" button, modify it and re-run it.

2. In-depth research and web browsing

Lemon AI offers a "ReAct Mode" to support the planning and execution of complex tasks. Enter the "Research" module and enter a task, such as "Analyze the market trend of a certain industry". The system breaks down the task, automatically searches relevant web pages and extracts information. Users can view search results, data summaries and analysis reports. The research process is fully localized to protect data privacy. Users can adjust the search depth or specify the data source through the settings.

3. Data analysis

In the "Data Analysis" module, upload CSV files or input data, Lemon AI will generate statistical charts and analysis results. For example, after uploading sales data, the system can generate bar charts or line graphs to visualize trends. Supported analyses include mean, distribution and correlation calculations. Users can export analysis results to PDF or image formats.

4. API integration

If you want to use a cloud model, go to the Settings - Model Services page and add an API key (e.g. Claude or GPT). the Lemon AI verifies key validity and ensures a stable connection. Once configured, users can switch between local and cloud models for flexible task handling.

5. Customization extensions

Lemon AI's open-source architecture allows users to modify code or add features. Developers can edit src directory to add custom tools or modules. After making changes, rebuild the Docker container:

docker-compose build

Refer to the official documentation (https://github.com/hexdocom/lemonai/blob/main/docs) for more development guidelines.

caveat

- Privacy: All tasks are run locally by default and data is not uploaded to the cloud. To report a security issue, send an email to

service@hexdo.comnot GitHub public commits. - system requirements: At least 8GB of RAM and a 4-core CPU are recommended to ensure smooth operation.

- update: Check the Releases page of your GitHub repository regularly (

https://github.com/hexdocom/lemonai/releases) Get the latest version and fixes.

application scenario

- Individual studies

Researchers use Lemon AI for documentation or market analysis. Local models handle sensitive data and generate structured reports to protect privacy. - Developer Tools

Developers utilize the code generation feature to quickly write scripts and the sandbox environment to test the code to ensure security and stability. - Enterprise Data Analytics

Enterprises deploy Lemon AI on local servers to analyze sales or operational data and generate visual charts to support decision-making. - Educational support

Students use Lemon AI to assist with learning, generate code samples or organize learning materials that run locally to protect personal information.

QA

- Does Lemon AI require an internet connection?

Lemon AI supports running completely offline, using local models and sandbox environments. If you need a cloud model or search functionality, you will need to be online to configure the API. - How to ensure safe code execution?

Lemon AI's virtual machine sandbox isolates the environment in which code runs, preventing it from affecting the host system. All execution is done within the sandbox, protecting the device. - What local models are supported?

Supports DeepSeek, Qwen, Llama, Gemma, etc., run through Ollama. Users can choose models according to their needs. - How do I update Lemon AI?

Visit the Releases page on your GitHub repository to download the latest version and re-run the Docker container. The documentation provides detailed steps for updating.