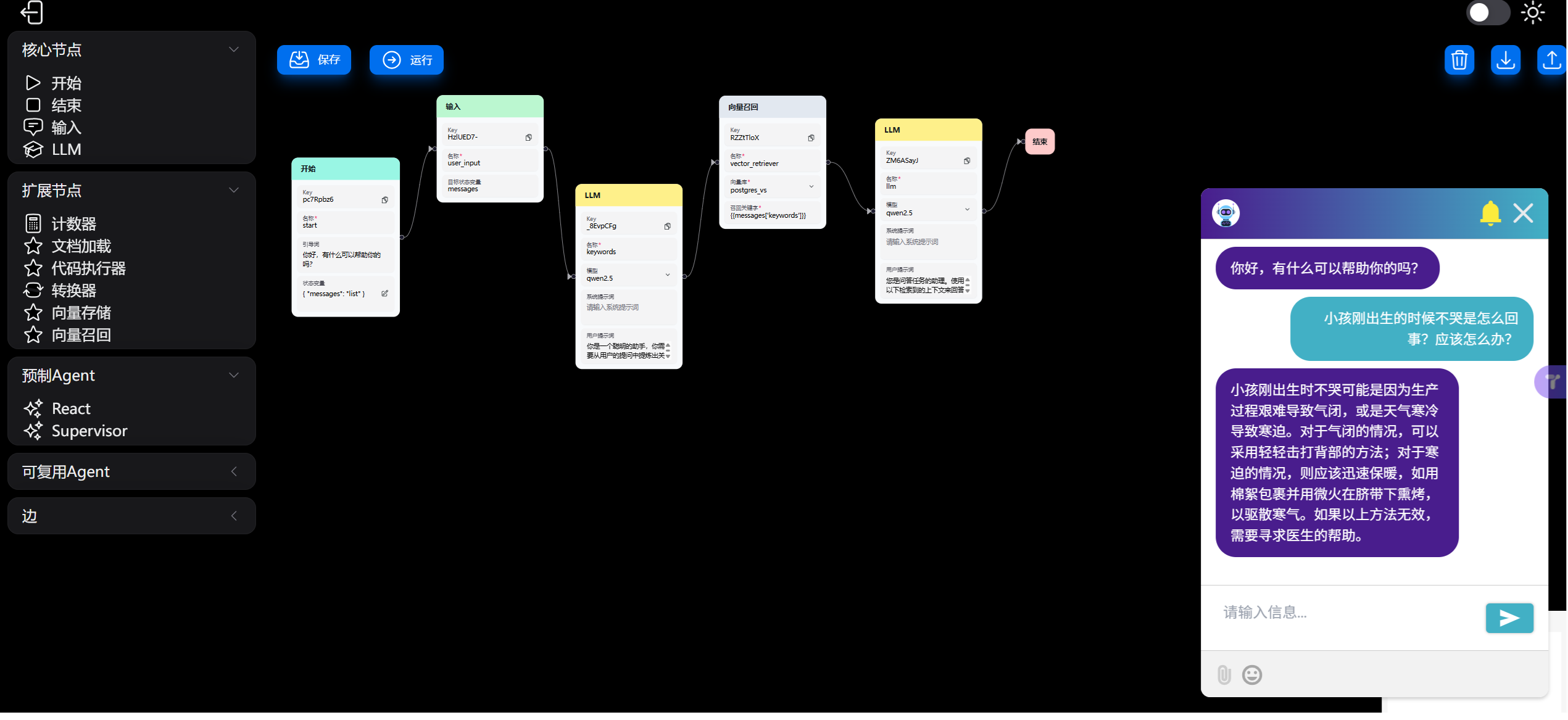

Lang-Agent is an AI Intelligent Body (Agent) configuration platform developed based on the LangGraph technology stack. It provides a visual interface that allows users to build complex workflows with limited programmability by dragging and dropping nodes and connecting lines. The project's design philosophy is close to that of ComfyUI, encouraging developers to create specialized functional nodes based on their business needs, rather than providing a fully encapsulated platform like dify or coze. The core feature of Lang-Agent is the introduction of the concept of "state variables", which allows data to be passed and controlled flexibly throughout the workflow, breaking the restriction of "the output of the previous node is the only input of the next node" in the traditional workflow, and thus realizing more precise and complex logic control. The project adopts front-end and back-end separation architecture, the back-end is based on FastAPI, and the front-end is built using ReactFlow and HeroUI.

Function List

- Visual Process Orchestration: Provides a drag-and-drop canvas interface that allows users to intuitively build and organize the Agent's execution logic.

- Rich built-in nodes: The system has a variety of built-in core nodes, including start, end, user input, LLM (Large Language Model), counters, document loading, code executors, and vector storage and recall.

- state variable management: Support custom global state variables, which can be used in input/output of nodes and conditional judgment of edges to achieve precise control of workflow.

- Prefabricated Agent: Integration of two pre-built Agents that can be used directly:

ReactAgent(ability to call on external tools autonomously) andSupervisorAgent(Ability to call other Agents autonomously). - Model and Vector Library Configuration: Users can easily configure connections to different Large Language Models (LLMs) and Embedding models, and support docking to a variety of vector databases such as Postgres and Milvus.

- Tool Call (MCP) Configuration:: Support for configuring MCPs (tools that provide access to external services for large language models) for scenarios that require tool calls.

- high scalability: Provides clear guidelines for custom node development, developers can easily extend front-end components and back-end logic to suit specific business scenarios.

- Process Import and Export: Support exporting configured Agent workflows to JSON files or importing from JSON files for easy sharing and reuse.

Using Help

Lang-Agent consists of two parts: front-end (lang-agent-frontend) and back-end (lang-agent-backend), which need to be installed and started respectively before use.

Installation and startup

1. Cloning of project codes

First, clone the project's source code locally from GitHub.

git clone https://github.com/cqzyys/lang-agent.git

2. Back-end installation and startup (lang-agent-backend)

back-end usePoetryPerform package management.

- Initialization environment: Go to the backend project directory and install the dependencies using Poetry.

cd lang-agent-backend poetry env use python poetry shell poetry install - Initiation of projects: Execute the following command to start the back-end service.

python -m lang_agent.main

3. Front-end installation and startup (lang-agent-frontend)

The front-end uses Yarn for package management.

- Installation of dependencies: Go to the front-end project directory and install the dependency packages using Yarn.

cd lang-agent-frontend yarn install - Initiation of projects: Execute the following command to start the front-end development server.

yarn dev

After a successful launch, you can access the http://localhost:8820 Enter the operation interface of Lang-Agent.

Core Function Operation

1. Environmental configuration

Before you can start building the Agent, some basic configuration needs to be done.

- Model Configuration:

- Click [Model Configuration] in the top navigation bar.

- Click the [+] icon in the upper right corner of the page to create a new model connection.

- name (of a thing): A unique custom name for the model.

- typology: Select

llm(for language model nodes) orembedding(for vectorized nodes). - irrigation ditch: Only OpenAI-compatible channels are currently supported.

- Model connection parameters: Fill in the model's API address, key, and other parameters.

- Vector Library Configuration:

- Click the [Vector Library Configuration] tab.

- Click the [+] icon to configure a new vector library.

- name (of a thing): A unique name for the vector library.

- typology:: Currently supported

postgres和milvus。 - Fill in the connection address (URI), username, and password for the vector library.

- embedding model: Select one that has been set up in the model configuration

embeddingModel.

2. Agent configuration

This is the core functionality of the project, building Agents through a visual interface.

- Creating an Agent:

- Click the [Agent Configuration] tab, and then click the [Add Agent] card to enter the configuration page.

- The configuration page is divided into left and right sections: on the left is theresource tree, which contains all available nodes and Agents; on the right is thecanvas (artist's painting surface), which is used to build workflows.

- Build process:

- Drag and drop nodes: Drag and drop the desired nodes (e.g., "Start Node", "LLM Node", "Input Node") from the resource tree on the left to the canvas on the right.

- Configuration Nodes:

- start node: There must be one and only one for each Agent. Here you can set thestate variable, for example, the default

messagesfor storing conversation history, you can also add custom variables (such ascounter(for counting). - LLM node:: This is the core of the realization of intelligent conversations. It is necessary to select a configured

llmmodel and can write System Prompts and User Prompts. Prompts can use state variables with the syntax{{variable_name}}或{{messages['node_name']}}。 - input node: is used to receive input from the user and store it into the specified state variable (usually the

messages)。

- start node: There must be one and only one for each Agent. Here you can set thestate variable, for example, the default

- connection node:

- default side: Drag the dot to the right of one node to the dot to the left of another node to create an execution path. This means that after the source node is executed, the target node will be executed next.

- conditional side: After a connection is made, an execution condition can be set for the edge. This condition expression can be judged using state variables. For example, when a counter variable

{{counter}}The next node is executed only if the value of the node is less than 5. This makes it possible to build loops and branching logic.

- Operation and Commissioning:

- When the configuration is complete, click the [Save] button at the top of the canvas.

- Click the [Run] button and Agent will start.

- You can interact with the Agent and see the results of the run in the chatbot at the bottom right corner of the interface.

application scenario

- Intelligent Customer Service and Q&A Bots

A process can be constructed: first receive the user's question, then retrieve the relevant information from the knowledge base through the Vector Recall Node, and finally give the retrieved information together with the user's question to the LLM Node to generate the final answer. - Automated content generation

A poet Agent can be designed to generate a line of poem by "LLM node", and then use "counter node" and "conditional edges" to realize a loop and automatically generate a complete poem. A complete poem can be generated automatically by looping through "counter nodes" and "conditional edges". - Intelligentsia for complex task processing

utilizationSupervisorAgentIn this way, a main Agent can decide autonomously to call other sub-agents specialized in different tasks (e.g., data querying, file processing) to collaborate on complex tasks according to the user's instructions. - Code generation and execution

Users can put forward a computational requirement, generate Python code through the "LLM node", and then pass the code to the "code executor node" to run and return the result, realizing a simple programming assistant.

QA

- What is the difference between Lang-Agent and other Agent platforms (e.g. Dify, Coze)?

The design concept of Lang-Agent is closer to ComfyUI, which does not provide a fully encapsulated application platform, but encourages users to extend the functionality by developing customized nodes according to their business needs. Its core advantage lies in the realization of more flexible process control and data transfer through "state variables", providing a limited but powerful programmability. - What is a "state variable"? What does it do?

A "state variable" can be understood as a global dictionary during the internal operation of the Agent. It can be used to pass data between different nodes, and can also be used to control the logical judgment of conditional edges. For example, you can define a variable namedcountvariable, use the "counter node" to add one to it each time it loops, and then use the "conditional edge" to determine if the{{count}}Whether or not a certain value is reached determines whether or not to end the loop. - How to add a new function node to Lang-Agent?

Developers need to extend both on the front-end and back-end. On the front-end, a new React component needs to be created in the specified directory (.tsxfile) to define the node's interface and input parameters. On the backend, a new Python class file needs to be created (.pyfile) to define the business logic of the node. The detailed pseudo-code and directory structure is provided in the official documentation. ReactAgent和SupervisorAgentWhat's the difference?ReactAgentis an Agent that lets a large language model autonomously invoke external tools (Tools) to accomplish tasks that need to be predefined in the MCP configuration.SupervisorAgentIt is a "Supervisor" Agent that does not directly perform the task itself, but rather decides, based on instructions, which of the configured "Reusable Agents" to call to accomplish the task.