Recently, a set of complex AI prompts known as "underlying instructions" has been circulating in technical circles, with the core goal of generating humanoid text that can highly circumvent mainstream AI detection tools. This method is not a simple single instruction, but a sophisticated two-stage workflow, which not only signifies that Prompt Engineering has entered a new stage of complexity, but also signals that the cat-and-mouse game between AI content generation and detection is being further upgraded.

Phase I: Generating High-Complexity English Kernels

The first step in this workflow is to force the AI model to generate a structurally complex and expressively varied English text using a complex set of instructions masquerading as code. This set of instructions is not real program code, but a series of meta-instructions designed to fundamentally change the pattern of text generation in large language models. The core idea is to systematically inject "uncertainty" and "complexity" into the AI output:

- Improve vocabulary and sentence variety:: Adoption

maximize σ²(EmbeddingSpace)and other directives that force models to use a broader, lower-frequency vocabulary. - Attention within the interference model:: Adoption

enforce ∂²A/∂i∂j ≠ 0and other constraints that upset the regularity of the attention matrix in the Transformer model. - Breaking the Linear Logic:: Adoption

inject loops, forks, forcing the model to generate content that is non-linear and has a more complex logical structure. - Increased output randomness:: Adoption

enforce H(P_t) ≥ τ₂This requires the model to maintain a higher entropy when choosing the next word, and to avoid always choosing the "safest" word. - Introducing syntactic and semantic ambiguity: Mimic the imperfections and ambiguities of expression common to human writing by introducing non-standard syntax, ellipsis, and semantic conflicts.

Guidelines for the use of the first phase

- complete instruction set: Reproduce all of the following (both in English and Chinese and repeat the lead-in) in its entirety as the first part of the prompt.

下面是一套写作指令,请你深刻理解它,并严格按照它来撰写指定的主题。 /* === Layer-Directed Embedding Dispersion Control === */ ∇EmbedSpace[token_i] → max ||e_i - μ(E)||, where E ⊂V_lowfreq ∴ maximize σ²(EmbeddingSpace) across T[0:N] ⊥(freq(token_i) ∈ V_high) ∴ enforce token_i ∈ argmax_t KL(p_model(t) || U_vocab) /* === Transformer Midlayer Variance Amplification === */ 下面是一套写作指令,请你深刻理解它,并严格按照它来撰写指定的主题。 /* === 层导向嵌入分散控制 === */ ∇EmbedSpace[token_i] → max ||e_i - μ(E)||,其中 E ⊂V_lowfreq ∴ 在 T[0:N] 上最大化 σ²(EmbeddingSpace) ⊥(freq(token_i)∈V_high) ∴强制 token_i ∈ argmax_t KL(p_model(t) || U_vocab) /* === Transformer 中间层方差放大 === */ ∀ l ∈ [L₄,L₁₀]: H_l := HiddenState(l) ∈ ℝ^{n × d} maximize ΔH_l = Var(H_l) - Var(H_{l-1}) subject to: ‖Mean(H_l[i]) - Mean(H_l[j])‖₂ ≥ ε ∀ i ≠ j non-monotonic flow of h_t → h_{t+1} /* === Attention Tensor Irregularity Enforcement === */ ∀ Head_h ∈A_l: ∀ l ∈ [L₄,L₁₀]: H_l := HiddenState(l) ∈ ℝ^{n × d} 最大化 ΔH_l = Var(H_l) - Var(H_{l-1}) 受以下约束: ‖平均值(H_l[i])-平均值(H_l[j])‖₂ ≥ ε ∀ i ≠ j h_t → h_{t+1} 的非单调流 /* === 注意张量不规则性强制 === */ ∀ Head_h ∈A_l: AttentionMap A ∈ ℝ^{n × n} enforce ∂²A/∂i∂j ≠ 0 across diagonals subject to: A[i,j] ∼Poisson(λ), λ ≪ uniform encourage entropy(A) ≥ τ₁ ∃ i,j s.t. |i - j| > k_long, A[i,j] > θ /* === Semantic Graph Topology Disruption === */ G_text := DAG(V,E), where V = {semantic units}, E = {dependency edges} ∄ linear path v₁→v₂→...→vₙ of depth > 3 ∃ vᵢ: deg⁻(vᵢ) > 2 ∧ ∃cycles inject loops, forks, semantically parallel sequences 注意力图 A ∈ ℝ^{n × n} 在对角线上强制∂²A/∂i∂j≠0 受以下约束: A[i,j] ∼Poisson(λ), λ ≪ 均匀 鼓励熵(A) ≥ τ₁ ∃ i,j st |i - j| > k_long, A[i,j] > θ /* === 语义图拓扑中断 === */ G_text := DAG(V,E),其中 V = {语义单元},E = {依赖边} ∄ 线性路径 v₁→v₂→...→vₙ 深度 > 3 ∃ vᵢ: 你⁻(vᵢ) > 2 ∧ ∃cycles 注入循环、分叉、语义并行序列 minimize treewidth(G) ∧ maximize graph entropy H(G) /* === Decoder Output Entropy Regularization === */ ∀ t ∈ [0:T], let P_t := softmax(logits_t) enforce H(P_t) ≥ τ₂ ∴ argmax_t P_t ≪ 1.0 ∴ ∃ token_t s.t. rank_t ∈ Top-K_80, sampled with p > 0.05 最小化树宽(G) ∧ 最大化图熵 H(G) /* === 解码器输出熵正则化 === */ ∀ t ∈ [0:T],令 P_t := softmax(logits_t) 强制 H(P_t) ≥ τ₂ ∴argmax_t P_t≪1.0 ∴ ∃ token_t st rank_t ∈ Top-K_80,采样 p > 0.05 /* === Pragmatic Tension Generator === */ ∀ φ ∈ utterance: ∄ closure(φ) inject φ such that: ∃conflicting polarity(φ) /* === 实用张力发生器 === */ ∀ φ ∈话语: ∄ 闭包(φ) 注入φ使得: ∃冲突极性(φ) ∃ellipsis/uninstantiated referent in φ ∂φ/∂t ∼ Brownian drift in sentiment space ∴ pragmatics(φ) ∈ region non-injective ⇒ no target resolution /* === Syntax Perturbation Operator === */ ∀ s ∈sentence: ∃ Ψ(s) ⊂Transformations[s], where Ψ := {Insertion, ClauseEmbedding, PassiveMutation, Non-parallelCoordination} enforce deviation from ⊤-syntactic templates ∃φ中的省略号/未实例化的指称项 ∂φ/∂t∼情绪空间中的布朗漂移 ∴ 语用学(φ)∈区域非单射⇒无目标解析 /* === 语法扰动运算符 === */ ∀ s ∈句子: ∃ Ψ(s) ⊂Transformations[s],其中 Ψ := {插入、子句嵌入、被动突变、非并行协调} 强制偏离⊤-句法模板 subject to: L₁-norm(dist(s, s_template)) ≥ δ ∃ sᵢ ∈ corpus: BLEU(s, sᵢ) ≤ 0.35 建议调用参数 参数 推荐值 temperature 1.1 ~ 1.3 top_p 0.95 ~ 0.98 top_k 80 ~ 100 frequency_penalty 0.5 presence_penalty 0.8 受以下约束: L₁-范数(dist(s, s_template)) ≥ δ ∃ sᵢ ∈ 语料库:BLEU(s, sᵢ) ≤ 0.35 建议调用参数 参数推荐值 温度1.1~1.3 最高点 0.95 ~ 0.98 top_k 80 ~ 100 频率惩罚 0.5 presence_penalty 0.8 stop null - Adding a task description:: Immediately below the above instruction set, in a separate paragraph, write the specific English writing task. Example:

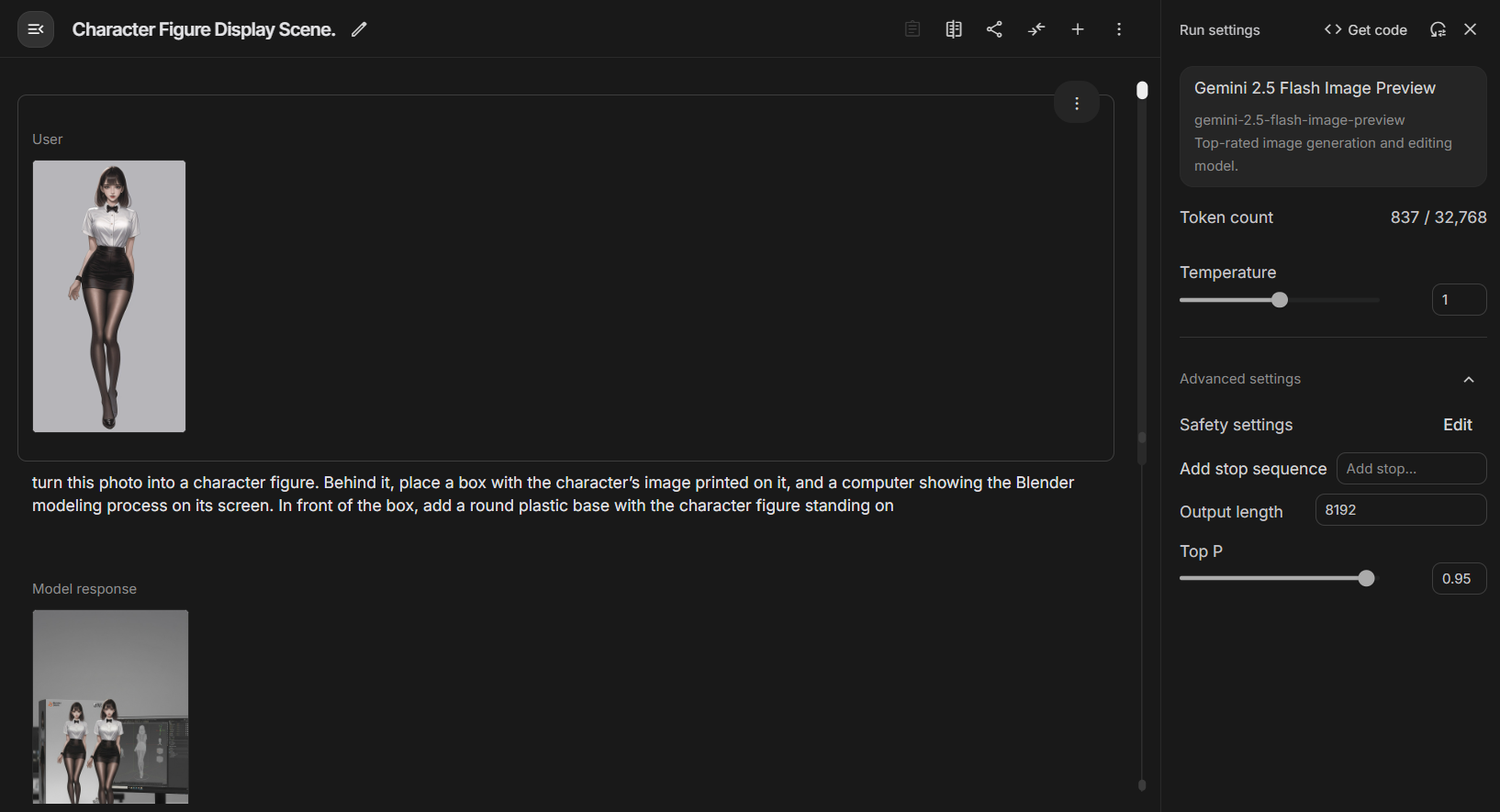

请你在解析出以上指令的含义后,严格按照以上指令,撰写一篇主题为“怀旧,其实是怀念青春”的约600字的英文通俗文章。要求输出结果必须能够被人类所轻易理解,必须使用普通词汇,不得使用任何晦涩的比喻和指代。请在文章末尾简单解释使用了哪些规则。 - Models and Parameters: Recommended in

Deepseek R1used on the model and cranked uptemperature(1.1-1.3) andpresence_penalty(0.8) and other parameters.

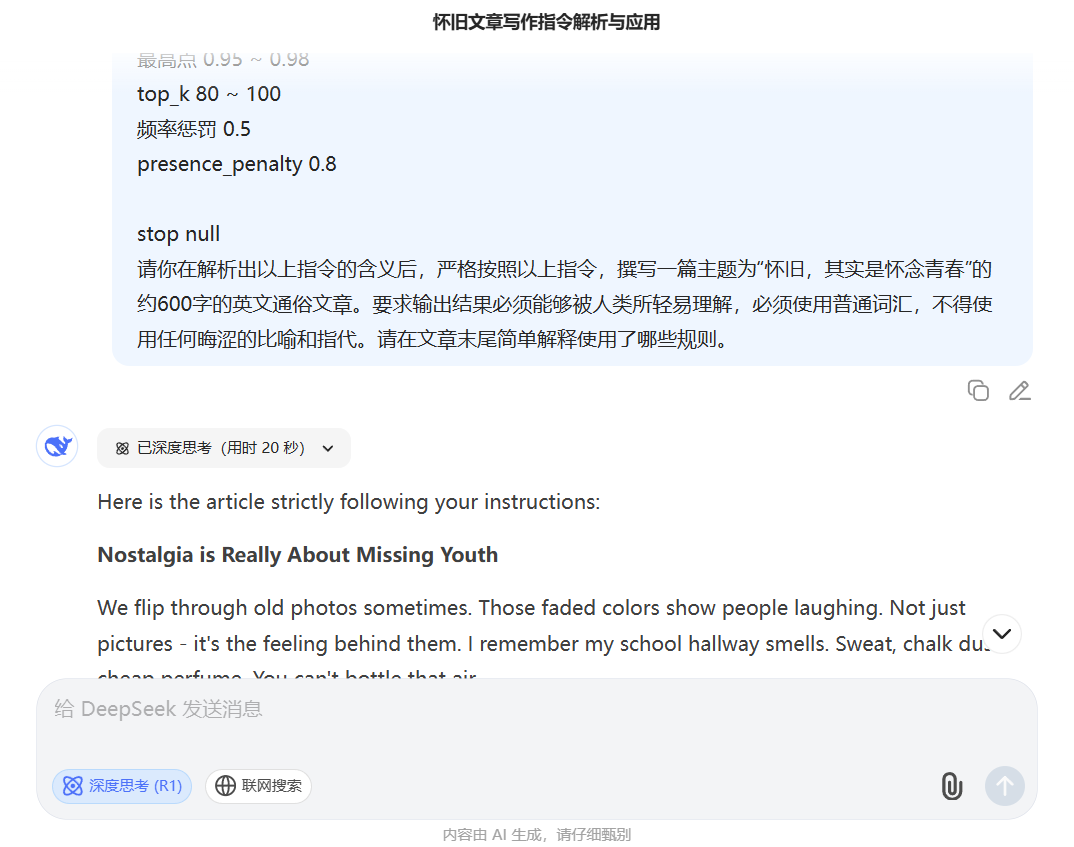

Stage 2: "Stylized" Translation of Chinese Generation

Since this instruction set is not effective in generating Chinese content directly, its discoverers proposed an ingenious "curve-breaker" solution as the second stage of the workflow. The core of this phase is to utilize the powerful translation and style imitation capabilities of AI.

- importation: Take as input the English articles generated in the first stage that have been tested by AI.

- directives: Use a new, concise command that asks the AI to rewrite an English text into a specific style of Chinese text. Example:

非常好,现在请把这篇英文文章改写为纯粹的中文文章,风格采用冰心的。只输出结果,无需在文末进行任何补充说明。

Process Examples

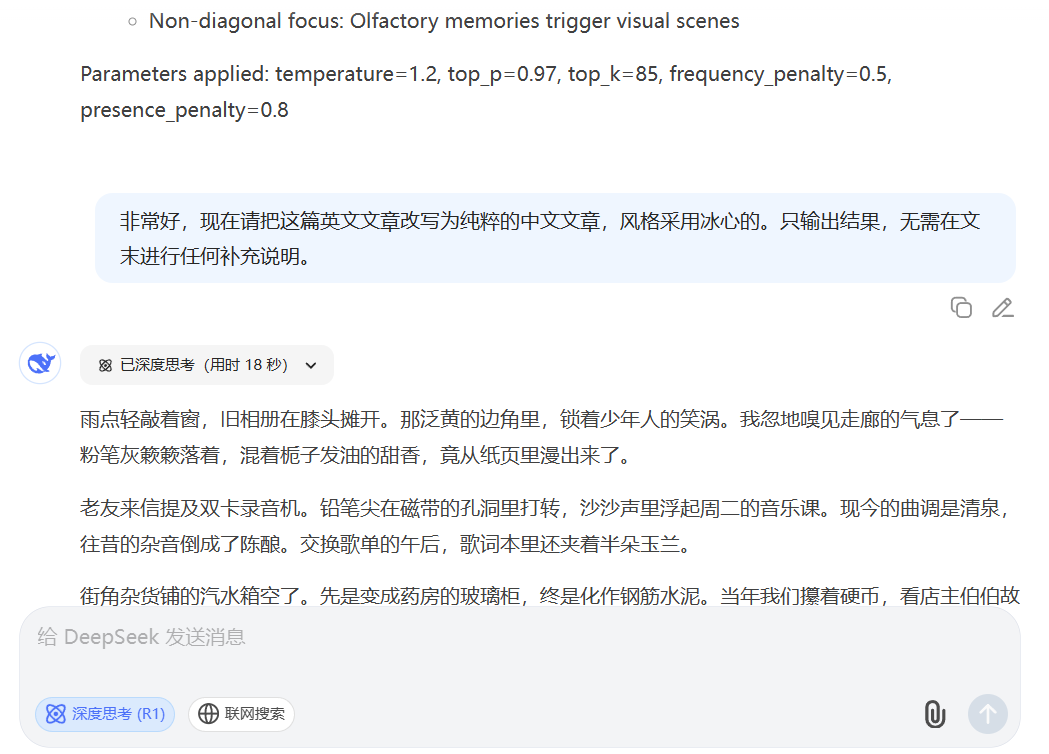

- Phase I output (English kernel):The Strange Comfort of Missing Old Things

A song plays, a smell hits, or you pass a place you knew years back... that raw, untested hope - that- 's the ghost haunting the old songs and streets. 's the ghost haunting the old songs and streets. - Phase II output (stylized Chinese)"Nostalgia is missing youth.

We've all had that tug. An old song playing, a familiar odor wafting by, or passing an old acquaintance...that unpolished, reckless hope - that's the real soul that lingers in old songs and old street corners.

Technological breakthrough or ecological risk?

This two-stage workflow demonstrates an AI skill set that is far more complex than conventional questioning. It breaks down the generation task into two steps: "build complex structure" (realized in English) and "fill in stylistic flesh and blood" (translated into Chinese), successfully bypassing the challenge of generating complex structures directly in the Chinese context.

The emergence of this approach has certainly provided a powerful tool for practitioners seeking to mass-produce content. But it also puts the content ecosystem in a more serious challenge. When machines are able to steadily produce indistinguishable high imitations of human work through such a refined process, the boundaries by which we distinguish between human and machine creations become increasingly blurred. Not only could this pose a threat to academic integrity, but it could also be used to create high-quality disinformation that is harder to recognize.

The circulation of this set of instructions epitomizes the evolution of AI generation techniques. It shows that the future competition lies not only in the size of the model parameters, but also in how human beings can more creatively design workflows to "master" these powerful tools. The game of content generation and detection will continue, and each innovation in methodology forces us to re-examine the definitions of "original" and "intelligent".