When Google's Chief Scientist Jeff Dean and Vice President of AI Infrastructure Amin Vahdat When the co-signers released a technical report, the entire industry listened. The core message of the report was packaged in a highly communicative metaphor: processing a Gemini models textual commands that consume less energy than watching 9 seconds of television.

This analogy is vivid and relatable, skillfully portraying the cost of a single AI interaction as insignificant. However, this microscopic perspective, endorsed by industry leaders themselves, belies the staggering energy demands of AI technology on a global scale. When the tiny single consumption is multiplied by the astronomical number of daily queries, the story is completely different.

Why "median"? A choice between honesty and bias

Before we dive in, it's important to understand why Google chose "median text query" as its core metric. Every user interaction with AI, from simple questions like "How many times does 2+2 equal?" to complex tasks that require writing thousands of words of a paper, energy consumption varies dramatically.

If averages are used, a small number of extremely complex queries can dramatically inflate the overall value, thereby distorting the true cost of daily use for the average user. In contrast, the median eliminates outliers at both ends of the spectrum and is more representative of the energy consumption of a typical query. The report estimates that a single median Gemini Text query consumption 0.24 Watt-hours (Wh) of energy, emit 0.03 grams of carbon dioxide, and use 0.26 milliliters of water. Google emphasized that this is the first time in the industry that a major tech company has published the cost of using a big language model in such detail.

Google's "full-stack algorithms": more than just computational raw material

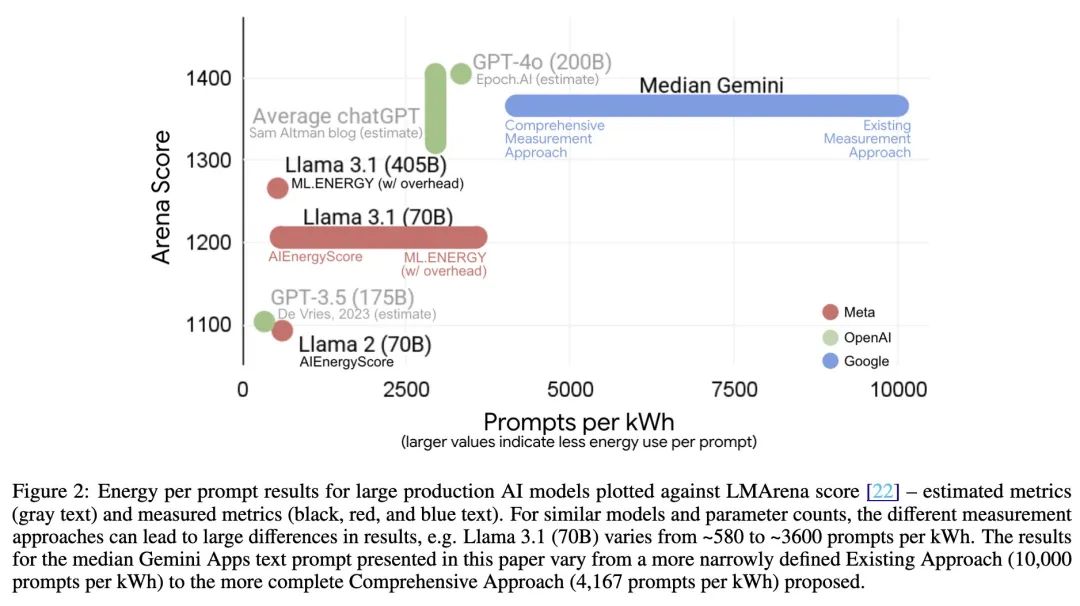

Another highlight of the report is its "full-stack" measurement approach. Google claims that many public estimates only count AI gas pedals (such as the TPU 或 GPU) power during active computing, which grossly underestimates the true cost.

To use an analogy, this optimistic algorithm is like calculating the cost of a pizza by counting only the flour and tomatoes.

And Google's "full-stack algorithm" accounts for all costs:

- Complete system power consumptionThis includes not only the AI chips, but also the host servers, CPUs, and memory that support their operation.

- Standby idle systems: Energy consumed by servers in standby mode that are set aside to cope with traffic peaks.

- Data center overhead: Measurement of energy efficiency

PUE(power usage efficiency) metrics, including all additional consumption of cooling systems, power distribution, and so on. Google claims that its data center fleet has an averagePUEAt 1.09, it is at an industry-leading level.

Under this more comprehensive algorithm, theGemini of a single query uses 0.24 watt-hours of energy. Google admits that this figure could easily be reduced to 0.10 watt-hours if it adopted the industry's narrow approach of counting only chip power consumption. [3] The move is certainly a way of signaling the transparency and honesty of its own data, while also putting pressure on its competitors.

Behind the leaps in efficiency

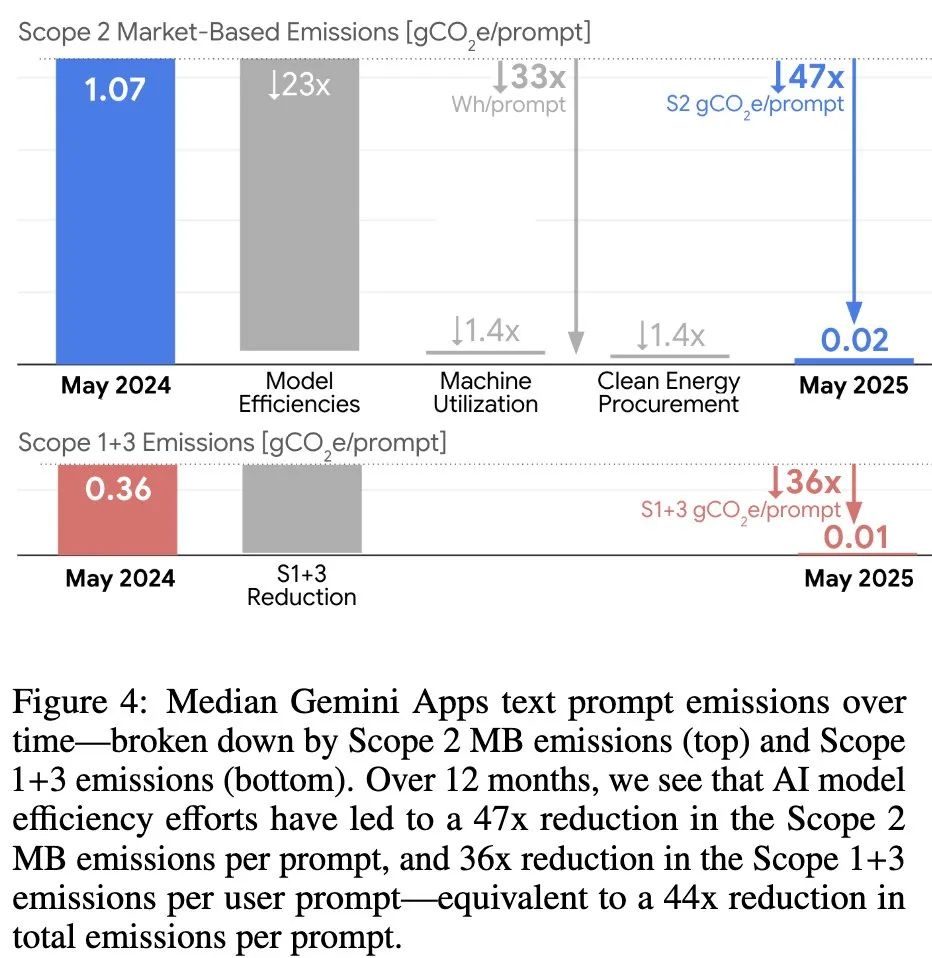

The report also emphasizes Gemini Amazing efficiency gains over the past year: reduced energy consumption per query while delivering higher quality responses 33 timesCarbon Footprint Reduced 44 times。

This leap is not a breakthrough in a single technology, but the result of its "full-stack optimization" strategy, which covers every aspect from model architecture to hardware:

- Efficient Model Architecture: Adoption

Mixture-of-Experts (MoE)Architectures such as this one allow the model to activate only the portion of the "expert" network needed to process a request, thus reducing the amount of computation by a factor of 10 to 100. - optimized algorithm: By quantifying (

Quantization) and distillation (Distillation) and other techniques to create smaller, faster versions of the model without sacrificing too much quality. - intelligent reasoning system: Utilization

speculative decoding(speculative decoding) and other techniques that allow small models to make predictions first, which are then quickly verified by large models, dramatically improving response efficiency. - Customized hardware: Google's self-developed

TPU(Tensor Processing Unit) Optimized from the ground up for AI operations, it achieves a very high energy-efficiency ratio.

To be fair, Google deserves credit for its engineering achievements in reducing the cost of a single AI query. However, take a look beyond the "9 seconds" of a single query and a very different picture emerges.

Macro Perspective: When "9 Seconds" Add Up to "285 Years"

The "9-second TV" analogy is clever because it capitalizes on the human sense of small costs. But the real test is scale.

Currently, there is no information on Gemini There are no official figures for the number of global daily queries, but industry estimates range from a conservative 28 million to an optimistic 1 billion. We might as well use each of these two numbers for our calculations.

Scenario 1: 1 billion queries (optimistic estimation)

- Total daily energy consumption: 1 billion times × 0.24 watt-hours per time = 240 million watt-hours, or 240,000 kilowatt-hours (kWh).

- What does it mean?: An average Indian household uses about 3 kWh of electricity per day. This means that

GeminiOne day's energy consumption is enough to sustain Eighty thousand. Daily electricity consumption in Indian households. - Let's change the metaphor.: it's the equivalent of a person watching a sleepless night of continuous 285 years TV.

Scenario 2: 28 million queries (conservative estimate)

- Total daily energy consumption: 28 million times × 0.24 watt-hours per time = 6.72 million watt-hours, or 6,720 kilowatt-hours (kWh).

- What does it mean?: Even so.

GeminiThat's enough energy for one day. 2240 Indian household use. - Let's change the metaphor.: it's the equivalent of watching continuous 8 years TV.

Regardless of the scenario, the calculations reveal the reality that the analogy that makes a single act seem trivial is striking in its true impact when placed on the grand scale of globalization.

The real challenge: Can efficiency gains catch up with the demand explosion?

With this report, Google intends to prove that it has solved the technical problem of "cost per query" and kicked the ball to others. However, this precisely avoids the deeper dilemma faced by the industry as a whole.

The real challenge is long past the tiny cost of optimizing a single query. Rather, it's how to meet this exponential growth in demand with sustainable energy as AI services are fully integrated into search, email, and even operating systems, and as query volume moves from billions to hundreds of billions.

Advances in technology bring about efficiency, but increased efficiency spurs wider application, leading to an explosion in aggregate demand. It's a classic "Jevons paradox." Google claims to be working towards its data center 24/7 Carbon Free Energy objectives and is committed to complementing 120% of fresh water consumption. However, globally, especially in regions where the energy mix is not yet perfect, new data centers will still put a huge strain on the power grid.

While tech giants are talking about how AI will change the world, it might be more important for them to have an honest discussion about what will power this reshaped world.

Reference: https://arxiv.org/abs/2508.15734