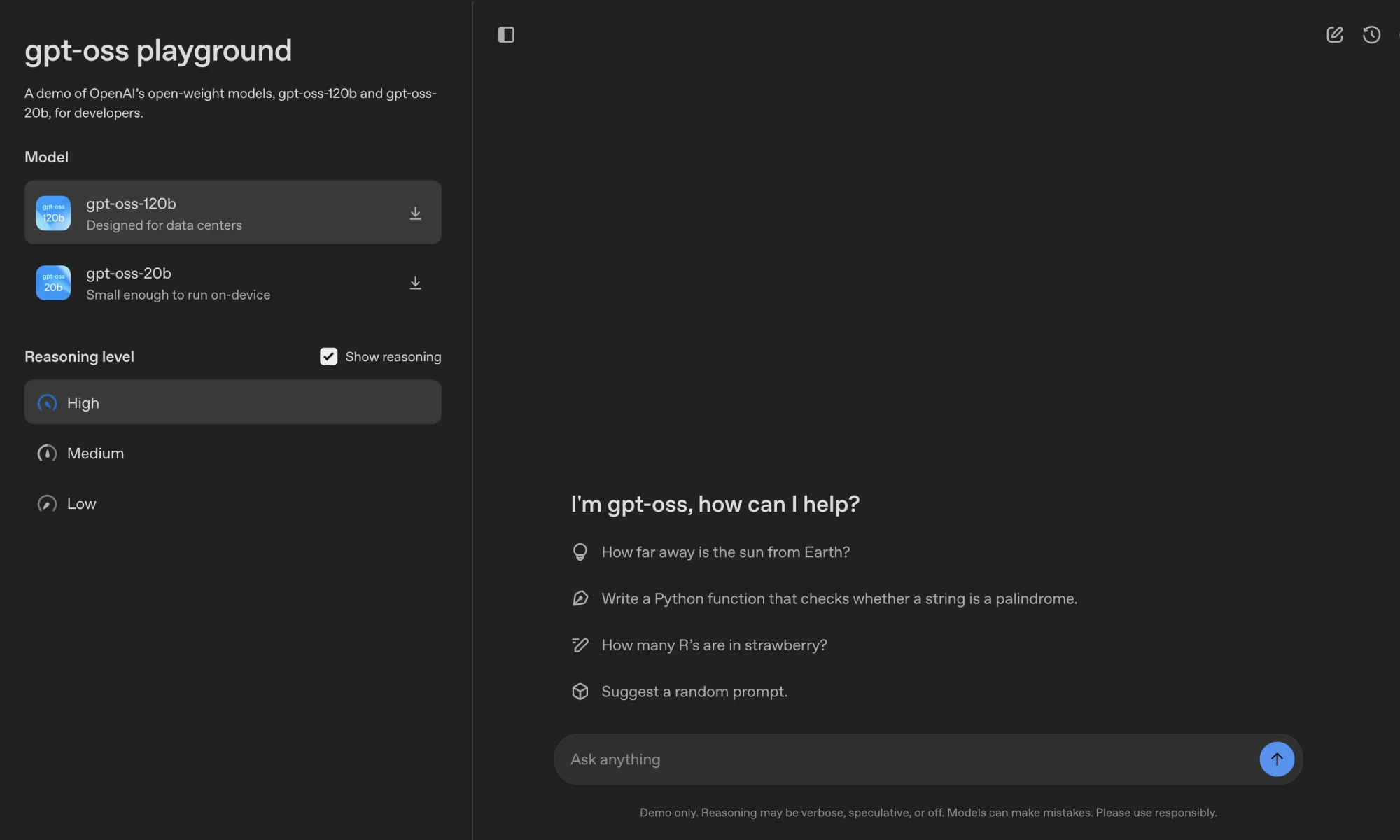

GPT-OSS is a family of open source language models from OpenAI, including gpt-oss-120b 和 gpt-oss-20bThe Apache 2.0 license allows developers to download, modify, and deploy them for free, with 117 billion and 21 billion parameters, respectively.gpt-oss-120b Ideal for data centers or high-end equipment, running on a single Nvidia H100 GPU;gpt-oss-20b For low-latency scenarios, it runs on devices with 16GB of RAM. Models support chained inference, tool calls, and structured outputs for smart body tasks and localized applications.OpenAI ensures model security through secure training and external auditing for enterprise, research, and individual developers.

Function List

- Open source model download: Provided

gpt-oss-120b和gpt-oss-20bModel weights, Hugging Face platform free access. - Efficient Reasoning: quantified using MXFP4.

gpt-oss-120brunning on a single GPU.gpt-oss-20bCompatible with 16GB RAM devices. - logical reasoning: Supports low, medium, and high inference strengths, and developers can adjust performance and latency according to the task.

- Tool Call: Integrate web search, Python code execution, and file manipulation tools for improved interactivity.

- Harmony format: Using the proprietary Harmony response format ensures that the output is structured for easy debugging.

- Multi-platform supportCompatible with Transformers, vLLM, Ollama, LM Studio and other frameworks, and adapted to a wide range of hardware.

- security mechanism: Reduce risks such as tip injection through prudent alignment and instruction prioritization systems.

- trimmable: Supports full parameter fine-tuning to adapt to specific task scenarios.

- Long Context Support: Native support for 128k context length for complex tasks.

Using Help

Installation process

To use the GPT-OSS model, download the model weights and configure the environment. The following are the detailed steps:

- Download model weights

Get model weights from Hugging Face:huggingface-cli download openai/gpt-oss-120b --include "original/*" --local-dir gpt-oss-120b/ huggingface-cli download openai/gpt-oss-20b --include "original/*" --local-dir gpt-oss-20b/Ensure installation

huggingface-cli:pip install huggingface_hub。 - Configuring the Python Environment

Create a virtual environment with Python 3.12:uv venv gpt-oss --python 3.12 source gpt-oss/bin/activate pip install --upgrade pipInstall the dependencies:

pip install transformers accelerate torch pip install gpt-ossFor Triton implementations, additional installation is required:

git clone https://github.com/triton-lang/triton cd triton pip install -r python/requirements.txt pip install -e . pip install gpt-oss[triton] - operational model

- Transformers Realization: Load and run

gpt-oss-20b:from transformers import pipeline import torch model_id = "openai/gpt-oss-20b" pipe = pipeline("text-generation", model=model_id, torch_dtype="auto", device_map="auto") messages = [{"role": "user", "content": "量子力学是什么?"}] outputs = pipe(messages, max_new_tokens=256) print(outputs[0]["generated_text"][-1])Make sure you use the Harmony format or the model will not work properly.

- vLLM Implementation: Start an OpenAI-compliant server:

uv pip install --pre vllm==0.10.1+gptoss --extra-index-url https://wheels.vllm.ai/gpt-oss/ vllm serve openai/gpt-oss-20b - Ollama Realization(consumer-grade hardware):

ollama pull gpt-oss:20b ollama run gpt-oss:20b - LM Studio Realization:

lms get openai/gpt-oss-20b - Apple Silicon Realization: Convert weights to Metal format:

pip install -e .[metal] python gpt_oss/metal/scripts/create-local-model.py -s gpt-oss-20b/metal/ -d model.bin python gpt_oss/metal/examples/generate.py gpt-oss-20b/metal/model.bin -p "为什么鸡过马路?"

- Transformers Realization: Load and run

operating function

- logical reasoning: The model supports three inference strengths (low, medium and high). The developer can set this via a system message, for example:

system_message_content = SystemContent.new().with_reasoning_effort("high")High intensity is good for complex tasks such as mathematical reasoning, and low intensity is good for quick answers.

- Harmony format: The model output is divided into

analysis(reasoning process) andfinal(Final Answer). Parsed using the Harmony library:from openai_harmony import load_harmony_encoding, HarmonyEncodingName, Conversation, Message, Role, SystemContent encoding = load_harmony_encoding(HarmonyEncodingName.HARMONY_GPT_OSS) messages = [Message.from_role_and_content(Role.USER, "旧金山天气如何?")] conversation = Conversation.from_messages(messages) token_ids = encoding.render_conversation_for_completion(conversation, Role.ASSISTANT)Show only to users

finalChannel Content. - Tool Call:

- Web Search: By

browserTools to search, open, or find web content. Enable the tool:from gpt_oss.tools.simple_browser import SimpleBrowserTool, ExaBackend backend = ExaBackend(source="web") browser_tool = SimpleBrowserTool(backend=backend) system_message_content = SystemContent.new().with_tools(browser_tool.tool_config)configuration

EXA_API_KEYEnvironment variables. - Python Code Execution: Run computing tasks, for example:

from gpt_oss.tools.python_docker.docker_tool import PythonTool python_tool = PythonTool() system_message_content = SystemContent.new().with_tools(python_tool.tool_config)Note: Python tools use Docker containers and need to handle the prompt injection risk with care.

- file operation: By

apply_patchtool to create, update, or delete files.

- Web Search: By

- Structured Output: Support for the Responses API format ensures output consistency and is suitable for intelligent body workflows.

caveat

- hardware requirement:

gpt-oss-120b80GB GPU required (e.g. Nvidia H100).gpt-oss-20b16GB RAM required. Apple Silicon requires Metal format weights. - Context length: supports 128k contexts, needs to be adjusted

max_context_lengthParameters. - Safe use: Avoid direct display of chained reasoning content to prevent harmful information leakage.

- Sampling parameters: Recommendations

temperature=1.0和top_p=1.0for optimal output.

application scenario

- Enterprise Localization Deployment

Organizations can run GPT-OSS on a local server to handle sensitive data, suitable for customer service, internal knowledge base or compliance-critical scenarios. - Developer Customization

Developers can fine-tune the model based on the Apache 2.0 license to optimize specific tasks such as legal document analysis or code generation. - academic research

Researchers can use the models to experiment with AI algorithms, analyze reasoning behavior or develop security monitoring systems. - Consumer Device Applications

gpt-oss-20bAdaptable to laptops or edge devices, suitable for developing personal assistants or offline writing tools.

QA

- What hardware does GPT-OSS support?

gpt-oss-120b80GB GPU required (e.g. Nvidia H100).gpt-oss-20bRuns on 16GB RAM devices such as high-end laptops or the Apple Silicon. - How do I secure my model?

Models are trained with prudent alignment and instruction prioritization to resist hint injection.OpenAI hosts the $500,000 Red Team Challenge to encourage the community to discover security vulnerabilities. - Is multimodal supported?

Only text input and output is supported, not images or other modalities. - How do I fine-tune the model?

Full parameter fine-tuning on a custom dataset after loading model weights using Transformers or other frameworks. - What does the Harmony format do?

The Harmony format ensures structured output for easy debugging and trust. It must be used or the model will not function properly.