GPT-Load is an open source, high-performance AI proxy tool focused on providing unified interface management and load balancing for a wide range of big model services. It simplifies developer access to models such as OpenAI, Gemini, Claude, etc. through an intelligent key polling mechanism. Users can quickly deploy and manage configurations in real time through the web interface. The project supports Docker containerized deployment and uses SQLite database by default, which is suitable for lightweight applications, as well as MySQL, PostgreSQL and Redis to meet the demand for clustering.GPT-Load is developed by tbphp and hosted on GitHub, which is suitable for both enterprise and individual developers.

Function List

- Intelligent Key Polling: Automatically manage multiple API keys, dynamically distribute requests, and improve interface invocation efficiency.

- Multi-model supportThe following is a list of the major models: OpenAI, Gemini, Claude, etc., providing a unified API entry point.

- load balancing: Optimize request allocation to ensure stability in high concurrency scenarios.

- Web Management Interface: Supports real-time configuration changes that take effect without restarting the service.

- Database flexibility: Default SQLite, with support for MySQL, PostgreSQL and Redis.

- Docker Deployment: Provides containerized solutions to simplify installation and expansion.

- Cluster Support: Enables multi-node collaboration through shared databases and Redis.

- API Proxy Forwarding: Supports multiple models of API request formats to simplify development.

Using Help

Installation process

GPT-Load supports both Docker and source build installation. Here are the detailed steps:

Method 1: Docker Deployment (recommended)

- Installing Docker and Docker Compose

Ensure that Docker and Docker Compose are installed on your system. which can be checked with the following command:docker --version docker compose version - Creating a project directory

Create a local directory and enter it:mkdir -p gpt-load && cd gpt-load - Download Configuration File

Get the default configuration file from the GitHub repository:wget https://raw.githubusercontent.com/tbphp/gpt-load/main/docker-compose.yml wget -O .env https://raw.githubusercontent.com/tbphp/gpt-load/main/.env.example - Configuring Environment Variables

compiler.envfile to set the authentication key and other parameters. Example:AUTH_KEY=sk-123456The default authentication key is

sk-123456, which can be modified as needed. - Starting services

Start the service using Docker Compose:docker compose up -dThe service will be available in

http://localhost:3001Running. - Access Management Interface

Open your browser and visithttp://localhost:3001Use.envset inAUTH_KEYLog in. - Management services

- Check the status of the service:

docker compose ps - View Log:

docker compose logs -f - Restart the service:

docker compose down && docker compose up -d - Updated version:

docker compose pull && docker compose down && docker compose up -d

- Check the status of the service:

Method 2: Source code build

- clone warehouse

Clone the project code from GitHub:git clone https://github.com/tbphp/gpt-load.git cd gpt-load - Installing the Go Environment

Make sure Go 1.18 or later is installed on your system. Check the version:go version - Installation of dependencies

Pull project dependencies:go mod tidy - Configuring Environment Variables

Copy the sample configuration file and edit it:cp .env.example .envmodifications

.envhit the nail on the headDATABASE_DSN(database connection string) andREDIS_DSN(Redis connection string, optional). Example:AUTH_KEY=sk-123456 DATABASE_DSN=mysql://user:password@host:port/dbname - Operational services

Start the service:make runThe service runs by default in the

http://localhost:3001。

Database Configuration

- SQLite: Default database, suitable for standalone lightweight applications without additional configuration.

- MySQL/PostgreSQL:: Editorial

docker-compose.yml, uncomment the relevant services, configure environment variables and reboot. - Redis: Must be configured when the cluster is deployed for caching and node synchronization.

Main Functions

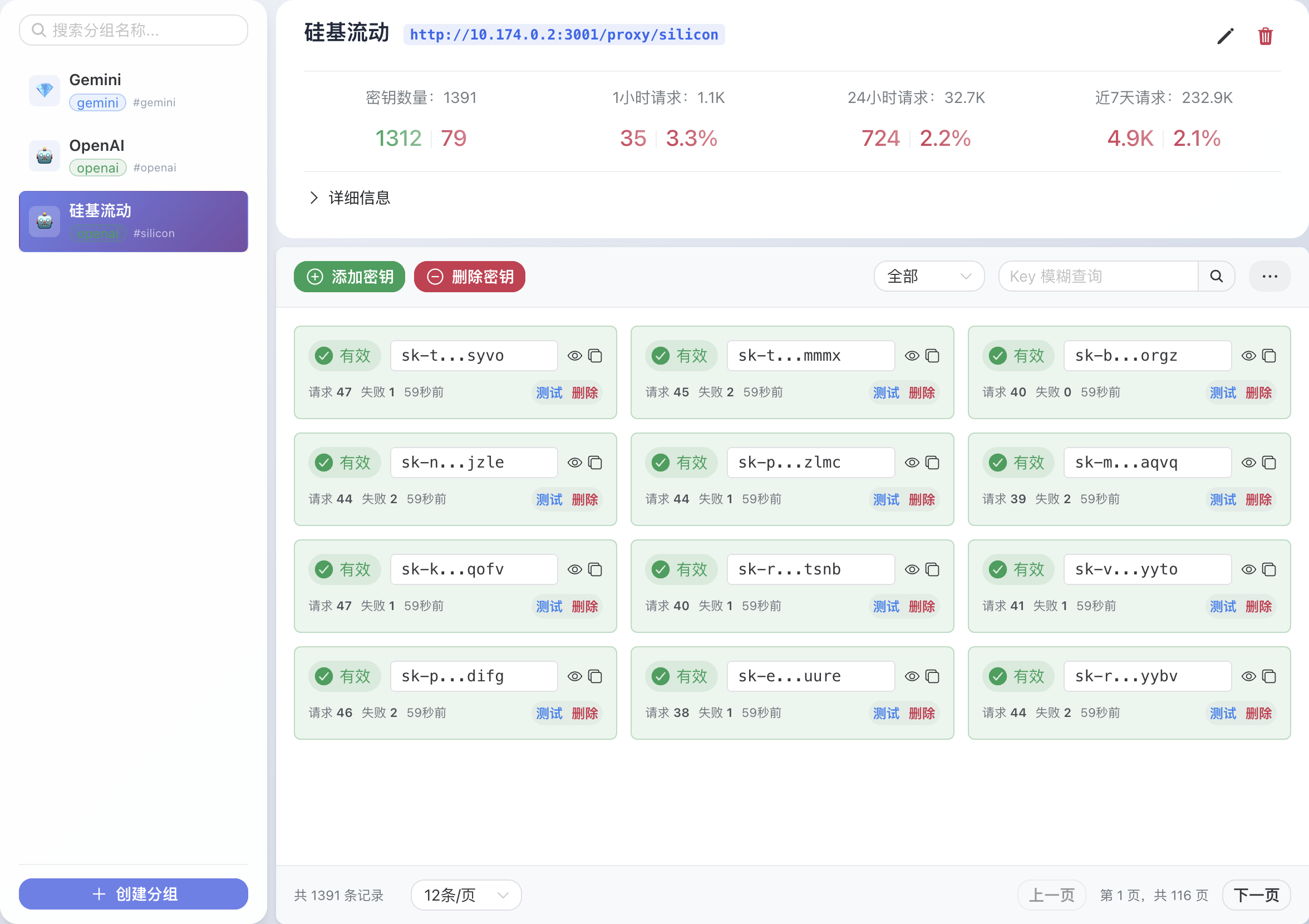

1. Key management

- After logging in to the web interface, go to the "Key Management" page.

- Add multiple API keys (e.g. for OpenAI, Gemini).

- The system automatically polls for available keys, prioritizing requests sent with keys that are not overrun.

2. API proxy requests

- GPT-Load provides a unified proxy interface. For example, calling OpenAI's chat interface:

curl -X POST http://localhost:3001/proxy/openai/v1/chat/completions \ -H "Authorization: Bearer sk-123456" \ -H "Content-Type: application/json" \ -d '{"model": "gpt-4.1-mini", "messages": [{"role": "user", "content": "Hello"}]}' - Proxy requests for models such as Gemini, Claude, etc. are supported in a similar format.

3. Real-time configuration

- Modify the model parameters, key priority, or load balancing policy in the web interface.

- The configuration takes effect immediately after it is saved, and there is no need to restart the service.

4. Cluster deployment

- Ensure that all nodes are connected to the same MySQL/PostgreSQL and Redis.

- 在

.envConfigure unified database and Redis connections in the - Deploy multiple nodes using Docker Compose or Kubernetes.

caveat

- Ensure that the firewall has port 3001 open.

- Redis must be configured when the cluster is deployed or in-memory storage will be enabled.

- Regularly check key usage to avoid service interruptions due to overruns.

application scenario

- Enterprise AI Integration

When developing AI applications, organizations need to call on both OpenAI, Gemini, and Claude GPT-Load provides a unified entry point to simplify the development process and reduce maintenance costs. - Highly Concurrent AI Services

In chatbot or intelligent customer service scenarios, GPT-Load's load balancing and key polling ensure the stability of highly concurrent requests for large-scale user access. - Individual Developer Experiments

Developers can quickly deploy GPT-Load, test the performance of different large models, manage multiple free or paid keys, and reduce trial and error costs.

QA

- What models does GPT-Load support?

Support OpenAI, Gemini, Claude and other mainstream big models, the specific compatibility depends on the API format. - How do I switch databases?

compilerdocker-compose.ymlTo uncomment MySQL or PostgreSQL, configure the.envhit the nail on the headDATABASE_DSN, and then restart the service. - What is required for cluster deployment?

All nodes should have the same MySQL/PostgreSQL and Redis connections, Redis is mandatory. - How is the web interface secured?

pass (a bill or inspection etc).envhit the nail on the headAUTH_KEYFor authentication, it is recommended to use strong keys and change them regularly.