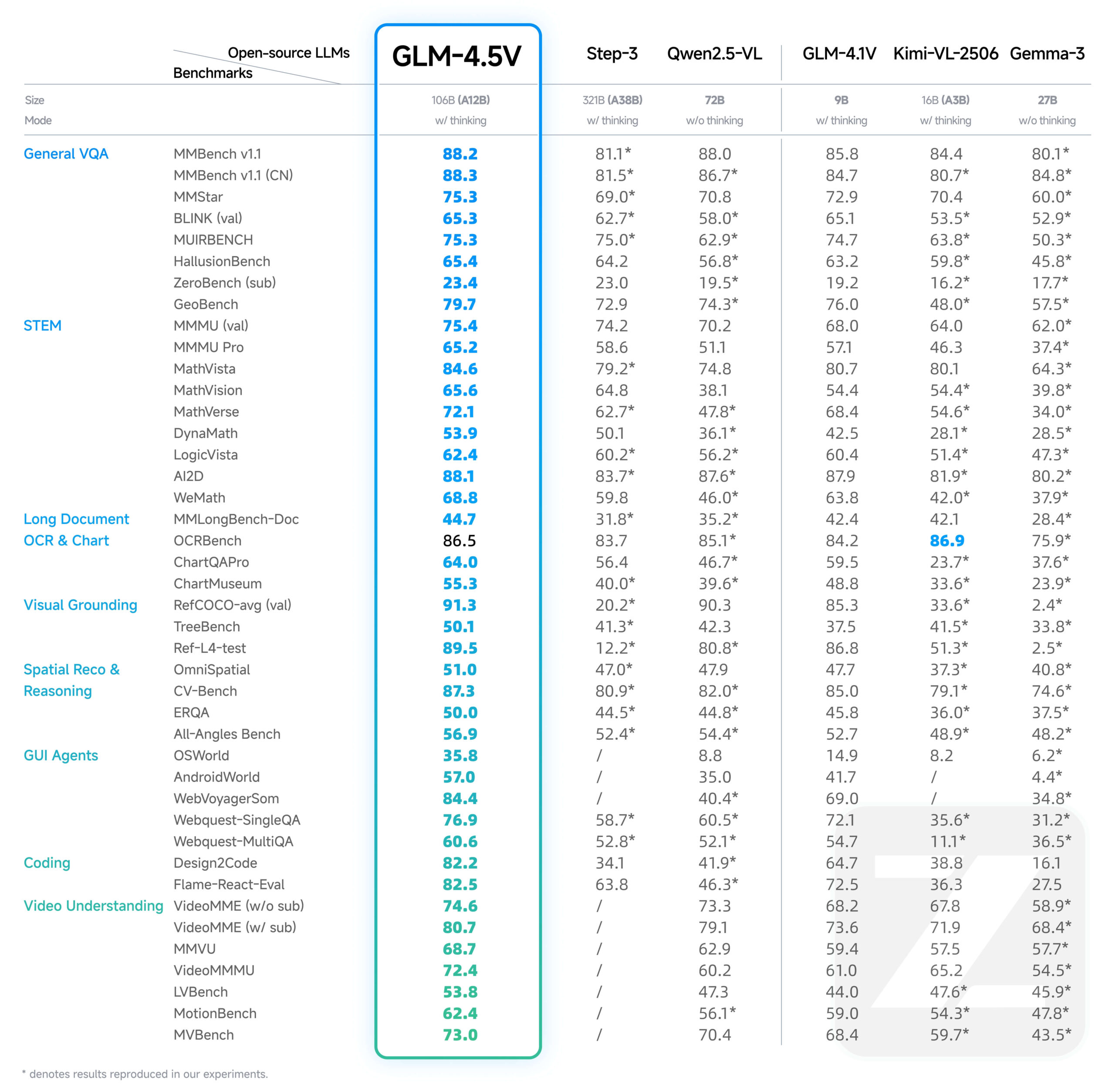

GLM-4.5V is a new generation of Visual Language Megamodel (VLM) developed by Zhi Spectrum AI (Z.AI). The model is built based on the flagship text model GLM-4.5-Air, which adopts MOE architecture, with a total number of 106 billion references, including 12 billion activation parameters.GLM-4.5V can not only process images and text, but also comprehend video content, and its core capabilities cover complex image reasoning, long video comprehension, document content parsing, and graphical user interface (GUI) operations, among other tasks. In order to balance efficiency and effectiveness in different scenarios, the model introduces a "Thinking Mode" switch that allows users to switch between tasks that require fast response or deep reasoning. The model supports a maximum output length of 64K tokens and is open-sourced on Hugging Face under the MIT license, allowing developers to use it commercially and for secondary development.

Function List

- Web Page Code Generation: Analyze screenshots or screen recordings of web pages to understand their layout and interaction logic, and directly generate fully usable HTML and CSS code.

- Grounding: Accurately locate and recognize specific objects in an image or video, and be able to recognize them in coordinate form (e.g., as a

[x1,y1,x2,y2]) return to the target location for scenarios such as security, quality control and content auditing. - GUI Intelligence: The ability to recognize and process screenshots, perform actions such as clicking, swiping and modifying content in response to commands, and provide support for intelligences to perform automated tasks.

- Interpreting long and complex documents: Deeply analyze dozens of pages of complex graphical reports, supporting content summarization, translation, chart extraction, and the ability to provide insights based on document content.

- Image Recognition and Reasoning: Strong scene understanding and logical reasoning capabilities, capable of inferring the contextual information behind an image based solely on its content, without relying on external search.

- Video comprehension: Parsing long video content to accurately identify time, people, events, and the logical relationships between them in the video.

- Disciplinary Questions and Answers:: Ability to solve complex problems combining graphics and text, especially suitable for solving and explaining exercises in K12 education scenarios.

Using Help

GLM-4.5V provides developers with a variety of ways to access and use it, including rapid integration through the official API and local deployment through Hugging Face.

1. Use through the Wisdom Spectrum AI open platform API (recommended)

Using the official API is the most convenient way for developers who want to quickly integrate models into their applications. This approach eliminates the need to manage complex hardware resources.

API Prices

- importation: ¥0.6 / million tokens

- exports: ¥1.8 / million tokens

invocation step

- Get API Key: Go to the Smart Spectrum AI Open Platform to register for an account and create an API Key.

- Installing the SDK:

pip install zhipuai - Write the calling code: The following is an example of an API call using the Python SDK, demonstrating how to send an image and text to ask a question.

from zhipuai import ZhipuAI # 使用你的API Key进行初始化 client = ZhipuAI(api_key="YOUR_API_KEY") # 请替换成你自己的API Key response = client.chat.completions.create( model="glm-4.5v", # 指定使用GLM-4.5V模型 messages=[ { "role": "user", "content": [ { "type": "text", "text": "这张图片里有什么?请详细描述一下。" }, { "type": "image_url", "image_url": { "url": "https://img.alicdn.com/imgextra/i3/O1CN01b22S451o81U5g251b_!!6000000005177-0-tps-1024-1024.jpg" } } ] } ] ) print(response.choices[0].message.content)

Enabling "Thinking Mode"

For complex tasks that require deeper reasoning by the model (e.g., analyzing complex charts), you can add thethinkingparameter to enable "think mode".

The following is a guide to using thecURLOfficial example of calling and enabling Thinking Mode:

curl --location 'https://api.z.ai/api/paas/v4/chat/completions' \

--header 'Authorization: Bearer YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"model": "glm-4.5v",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://cloudcovert-1305175928.cos.ap-guangzhou.myqcloud.com/%E5%9B%BE%E7%89%87grounding.PNG"

}

},

{

"type": "text",

"text": "桌子上从右数第二瓶啤酒在哪里?请用[[xmin,ymin,xmax,ymax]]格式提供坐标。"

}

]

}

],

"thinking": {

"type":"enabled"

}

}'

2. Local deployment through Hugging Face Transformers

For researchers and developers who require secondary development or offline use, models can be downloaded from the Hugging Face Hub and deployed in their own environment.

- Environmental requirements:: Local deployment requires strong hardware support, usually a high-performance NVIDIA GPU with large video memory (e.g. A100/H100).

- Installation of dependencies:

pip install transformers torch accelerate Pillow - Load and run the model:

import torch from PIL import Image from transformers import AutoProcessor, AutoModelForCausalLM # Hugging Face上的模型路径 model_path = "zai-org/GLM-4.5V" processor = AutoProcessor.from_pretrained(model_path, trust_remote_code=True) # 加载模型 model = AutoModelForCausalLM.from_pretrained( model_path, torch_dtype=torch.bfloat16, low_cpu_mem_usage=True, trust_remote_code=True ).to("cuda").eval() # 准备图片和文本提示 image = Image.open("path_to_your_image.jpg").convert("RGB") prompt_text = "请使用HTML和CSS,根据这张网页截图生成一个高质量的UI界面。" prompt = [{"role": "user", "image": image, "content": prompt_text}] # 处理输入并生成回复 inputs = processor.apply_chat_template(prompt, add_generation_prompt=True, tokenize=True, return_tensors="pt", return_dict=True) inputs = {k: v.to("cuda") for k, v in inputs.items()} gen_kwargs = {"max_new_tokens": 4096, "do_sample": True, "top_p": 0.8, "temperature": 0.6} with torch.no_grad(): outputs = model.generate(**inputs, **gen_kwargs) response_ids = outputs[:, inputs['input_ids'].shape[1]:] response_text = processor.decode(response_ids[0]) print(response_text)

application scenario

- Front-end development automation

Developers can provide a screenshot of a well-designed web page, and GLM-4.5V can directly analyze the UI layout, color scheme and component styles in the screenshot and automatically generate the corresponding HTML and CSS codes, which greatly improves the development efficiency from the design draft to the static page. - Intelligent Security and Surveillance

In security monitoring scenarios, the model can analyze real-time video streams and accurately mark the location of the target on the screen according to commands (e.g., "Please locate all the people wearing red in the picture"), which can be used for people tracking, abnormal behavior detection, and so on. - Office Automation Intelligence

In office software, users can accomplish complex operations by modeling natural language commands. For example, the user can say "Please change the data in the first line of the table on the fourth page of PPT to '89', '21', '900'", and the model will recognize the screen content and simulate mouse and keyboard operations to complete the modification. "The model will recognize the screen content and simulate mouse and keyboard operations to complete the modification. - Research and Financial Document Analysis

A researcher or analyst can upload a dozens of pages of research or financial reports in PDF format, and ask the model to "summarize the core ideas of this report and convert the key data in Chapter 3 into a Markdown table". The model reads in-depth and extracts the information, generating structured summaries and charts. - K12 education counseling

Students can take a picture of a math or physics application problem (containing diagrams and text) and ask the model questions. The model not only gives the correct answer, but also acts like a teacher, explaining step-by-step the ideas and formulas used to solve the problem and providing a detailed solution process.

QA

- What is the relationship between GLM-4.5V and GLM-4.1V?

GLM-4.5V is a continuation and iterative upgrade of the GLM-4.1V-Thinking technology line. It is constructed based on the more powerful plain text flagship model GLM-4.5-Air, which comprehensively improves the performance on all kinds of visual multimodal tasks on the basis of inheriting the capability of the predecessor model. - What is the cost of using the GLM-4.5V API?

The billing rate for using the model through the official API of Wisdom Spectrum AI is RMB 0.6 per million tokens on the input side and RMB 1.8 per million tokens on the output side. - What exactly does "Thinking Mode" (Thinking Mode) do?

"Thinking Mode is designed for complex tasks that require deep reasoning. When enabled, the model spends more time thinking logically and integrating information to produce higher quality, more accurate answers, but with longer response times. It is suitable for scenarios such as analyzing complex charts, writing code, or interpreting long documents. For simple questions and answers, a faster response non-thinking model can be used.