FireRedTTS-2 is a text-to-speech (TTS) system designed for generating long conversations with multiple speakers. It uses streaming technology to achieve low-latency speech output, making the dialog generation process both fast and natural. The core advantage of the system is its ability to handle conversations of up to several minutes in length and to support smooth switching between multiple virtual speakers while maintaining contextual rhythm and emotional coherence. fireRedTTS-2 supports multiple languages including Chinese, English, and Japanese, and is equipped with zero-shot sound cloning, which allows users to easily clone the sounds of any language, even between languages. The system supports multiple languages, including Chinese, English, and Japanese, and has a zero-shot sound cloning feature that allows users to easily clone the sound of any language and even switch between languages. In addition, the system also provides a random tone generation function, which can be used to generate a large number of diverse speech data. For the convenience of users, the project provides a simple Web UI, which allows users who are not familiar with programming to get started quickly.

Function List

- Long dialog generation: Support for dialog generation up to 3 minutes long and containing 4 different speakers, with the ability to extend the training data to support longer dialogs and more speakers.

- Multi-language support: Support for speech synthesis in English, Chinese, Japanese, Korean, French, German and Russian.

- Zero sample sound cloning: Clone specified sounds without training, supporting sound cloning across languages and mixed language scenarios.

- ultra-low latency: Enables inter-sentence streaming generation with first response latency as low as 140 milliseconds, based on a new 12.5Hz streaming speech disambiguator and dual Transformer architecture.

- high stability: Demonstrated high voice similarity and low error rates in both single monologue and multi-person dialog tests.

- Random Tone Generation: Can be used to rapidly generate diverse speech data suitable for training speech recognition models (ASR) or speech interaction systems.

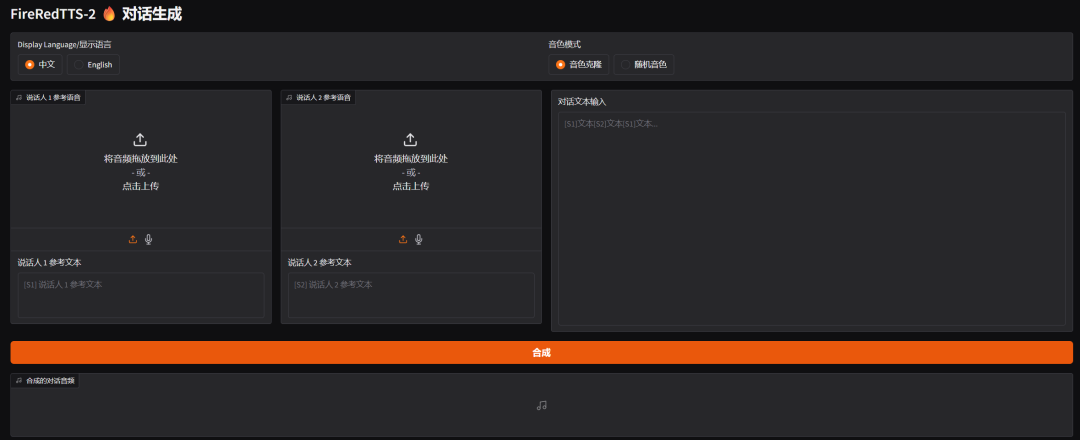

- Provide a web user interface: An easy-to-use web interface is built-in and supports both sound cloning and random tone modes, simplifying the operation process.

Using Help

The process of installing and using FireRedTTS-2 is relatively straightforward, and is divided into three main steps: environment configuration, model download, and execution of generation tasks. The following is a detailed operation guide.

1. Environmental installation

First you need to clone the project's codebase and create a separate Conda environment to manage the project's required dependencies to avoid conflicts with other Python projects on your system.

Step 1: Clone the code base

Open Terminal, go to the folder where you wish to store your project, and execute the following git command:

git clone https://github.com/FireRedTeam/FireRedTTS2.git

cd FireRedTTS2

Step 2: Create and activate the Conda environment

Python version 3.11 is recommended. Execute the following command to create a new Conda environment:

conda create --name fireredtts2 python=3.11

conda activate fireredtts2

Step 3: Install PyTorch

The project relies on a specific version of PyTorch and needs to match your version of CUDA. If your device supports CUDA 12.6, you can install it directly using the following command:

pip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 --index-url https://download.pytorch.org/whl/cu126

If you have a different version of CUDA, go to the PyTorch website to find the corresponding installation command.

Step 4: Install other dependencies

Finally, install the other Python libraries defined by the project:

pip install -e .

pip install -r requirements.txt```

### 2. 模型下载

FireRedTTS-2 的预训练模型存放在 Hugging Face 上,需要使用 `git lfs` 工具来下载。

首先确保你已经安装了 `git lfs`,然后执行以下命令:

```bash

git lfs install

git clone https://huggingface.co/FireRedTeam/FireRedTTS2 pretrained_models/FireRedTTS2

This command downloads the model files to the project root directory in the pretrained_models/FireRedTTS2 Inside the folder.

3. Functional operations

FireRedTTS-2 offers two main ways to use it: through the web user interface (Web UI) or by writing Python scripts.

Option 1: Using the Web UI

It's the easiest and most intuitive way for quick experiences and generating conversations.

In the terminal, make sure you are in the fireredtts2 Conda environment and located in the project root directory, then execute the following command:

python gradio_demo.py --pretrained-dir "./pretrained_models/FireRedTTS2"

After the program runs successfully, the terminal displays a local URL (usually the http://127.0.0.1:7860). Open this URL in your browser to see the interface. In the interface, you can enter the text of different speakers, upload audio samples for voice cloning, or choose to randomly generate voices and click the Generate button to get the synthesized audio of the conversation.

Way 2: Using Python Scripts

This approach is more flexible and suitable for integration into other projects.

1. Generating multi-person dialogues

Below is a sample code for generating a two-person dialog. You will need to prepare the text for each speaker, as well as an audio file for sound cloning and the corresponding text.

import torch

import torchaudio

from fireredtts2.fireredtts2 import FireRedTTS2

# 设置运行设备

device = "cuda"

# 初始化模型

fireredtts2 = FireRedTTS2(

pretrained_dir="./pretrained_models/FireRedTTS2",

gen_type="dialogue",

device=device,

)

# 定义对话文本列表,[S1] 和 [S2] 代表不同的说话人

text_list = [

"[S1]那可能说对对,没有去过美国来说去去看到美国线下。巴斯曼也好,沃尔玛也好,他们线下不管说,因为深圳出去的还是电子周边的会表达,会发现哇对这个价格真的是很高呀。",

"[S2]对,没错,我每次都觉得不不可思议。我什么人会买三五十美金的手机壳?但是其实在在那个target啊,就塔吉特这种超级市场,大家都是这样的,定价也很多人买。",

"[S1]对对,那这样我们再去看说亚马逊上面卖卖卖手机壳也好啊,贴膜也好,还包括说车窗也好,各种线材也好,大概就是七块九九或者说啊八块九九,这个价格才是卖的最多的啊。",

"[S2]那比如说呃除了这个可能去到海外这个调查,然后这个调研考察那肯定是最直接的了。那平时我知道你是刚才建立了一个这个叫做呃rean的这样的一个一个播客,它是一个英文的。",

]

# 提供用于克隆声音的音频文件路径

prompt_wav_list = [

"examples/chat_prompt/zh/S1.flac",

"examples/chat_prompt/zh/S2.flac",

]

# 提供克隆音频对应的文本

prompt_text_list = [

"[S1]啊,可能说更适合美国市场应该是什么样子。那这这个可能说当然如果说有有机会能亲身的去考察去了解一下,那当然是有更好的帮助。",

"[S2]比如具体一点的,他觉得最大的一个跟他预想的不一样的是在什么地方。",

]

# 生成音频

all_audio = fireredtts2.generate_dialogue(

text_list=text_list,

prompt_wav_list=prompt_wav_list,

prompt_text_list=prompt_text_list,

temperature=0.9,

topk=30,

)

# 保存生成的音频文件

torchaudio.save("chat_clone.wav", all_audio, 24000)

2. Generation of monologues

You can also use it to generate single sentence or single paragraph monologues with support for random timbre or voice cloning.

import torch

import torchaudio

from fireredtts2.fireredtts2 import FireRedTTS2

device = "cuda"

# 待合成的文本列表(支持多语言)

lines = [

"Hello everyone, welcome to our newly launched FireRedTTS2.",

"如果你厌倦了千篇一律的AI音色,那么本项目将会成为你绝佳的工具。",

"ランダムな話者と言語を選択して合成できます",

]

# 初始化模型

fireredtts2 = FireRedTTS2(

pretrained_dir="./pretrained_models/FireRedTTS2",

gen_type="monologue",

device=device,

)

# 使用随机音色生成

for i, text in enumerate(lines):

audio = fireredtts2.generate_monologue(text=text.strip())

torchaudio.save(f"random_speaker_{i}.wav", audio.cpu(), 24000)

# 使用声音克隆生成(需要提供 prompt_wav 和 prompt_text)

# for i, text in enumerate(lines):

# audio = fireredtts2.generate_monologue(

# text=text.strip(),

# prompt_wav="<path_to_your_wav>",

# prompt_text="<text_of_your_wav>",

# )

# torchaudio.save(f"cloned_voice_{i}.wav", audio.cpu(), 24000)

application scenario

- Podcast and audiobook production

Conversational podcast shows or audiobooks containing multiple characters can be quickly generated, and with the voice cloning feature, it is also possible to mimic the voice of a specific character for creative purposes. - Intelligent customer service and virtual assistants

Giving chatbots or virtual assistants a more natural and humanized voice provides a real-time voice interaction experience through low-latency streaming generation. - Game and animation dubbing

Quickly generate voiceovers for large numbers of characters in game development or animation, or create temporary placeholder tracks for developers and designers to debug. - voice data enhancement

Using the random tone generation function, diverse speech data can be manufactured on a large scale for training and improving automatic speech recognition (ASR) models.

QA

- What languages does FireRedTTS-2 support?

English, Chinese, Japanese, Korean, French, German and Russian are currently supported. - Can I use my own voice to generate audio?

Can. The system supports zero-sample voice cloning, you only need to provide a small sample of your voice (audio file) and the corresponding text, and then you can clone your timbre to generate a new voice. - Is the latency of generating speech high?

Designed for low-latency scenarios, the FireRedTTS-2 has a first response latency as low as 140 milliseconds, making it ideal for applications that require real-time voice feedback. - Is the program commercially available for free?

The project is based on the Apache-2.0 license, but the official statement specifically states that the sound cloning feature is for academic research purposes only and is strictly forbidden to be used for any illegal activities. The developer is not responsible for any misuse of the model.