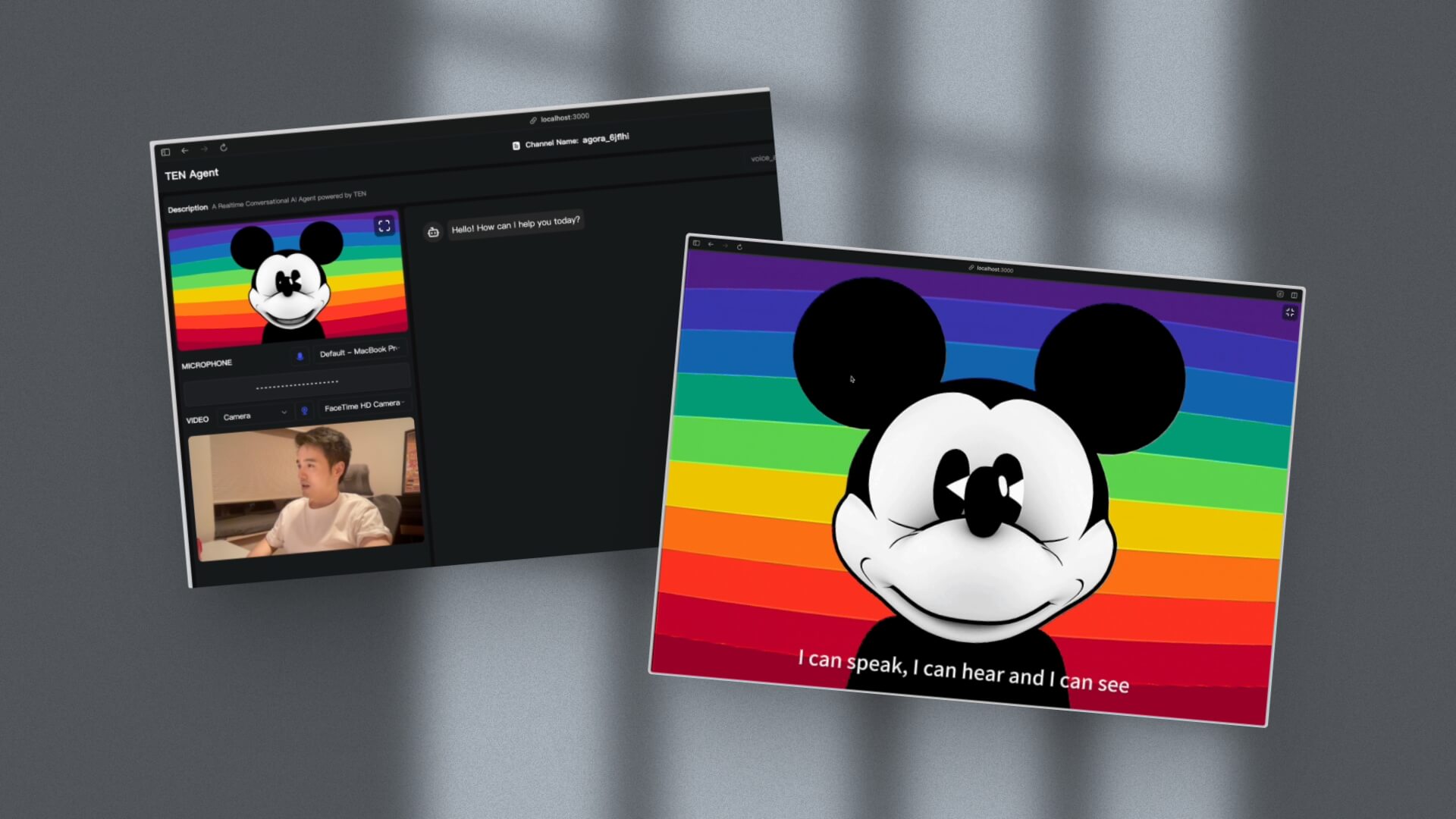

TEN Framework is an open source software platform focused on helping developers build real-time, multimodal, low-latency speech AI intelligences. It supports multiple programming languages including C, C++, Go, Python, JavaScript, and TypeScript.Developers can use the TEN Framework to quickly create speech, visual, and text...

wukong-robot is an open source Chinese voice conversation robot and smart speaker project, designed to help developers quickly build personalized smart speakers. It supports Chinese speech recognition, speech synthesis and multi-round dialog features , integrated with ChatGPT, Baidu, KDDI and other technologies. The project design is modular, plug-ins and features can be freely extended, suitable...

BAGEL is an open source multimodal base model developed by the ByteDance Seed team and hosted on GitHub.It integrates text comprehension, image generation, and editing capabilities to support cross-modal tasks. The model has 7B active parameters (14B parameters in total) and uses Mixture-of-Tra...

RealtimeVoiceChat is an open source project that focuses on real-time, natural conversations with artificial intelligence via voice. Users use the microphone to input speech, the system captures the audio through the browser, quickly converts it to text, generates a reply from a large language model (LLM), and then converts the text to speech output, the whole process is close to real-time. The project adopts ...

Stepsailor 是一个专为开发者打造的工具,核心是一个 AI 命令栏。开发者可以用它让自己的软件产品听懂用户的话,比如用户说“添加新任务”,软件就自动执行。它通过简单的 SDK 集成到 SaaS 产品中,不需要开发者懂 AI 技术。S...

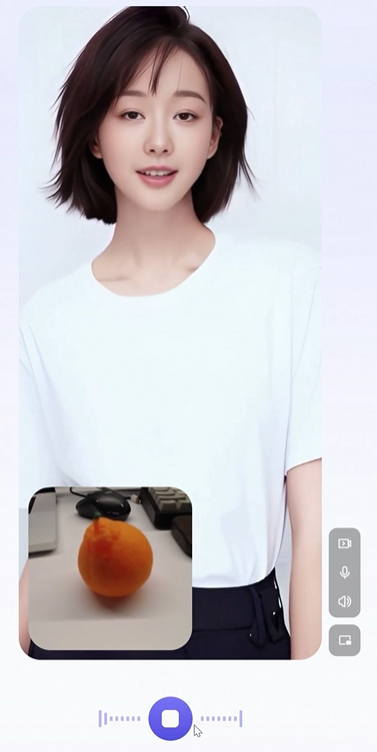

OpenAvatarChat is an open source project developed by the HumanAIGC-Engineering team and hosted on GitHub. It is a modular digital human conversation tool that allows users to run full functionality on a single PC. The project combines real-time video, speech recognition, and digital human technology...

VideoMind is an open source multimodal AI tool focused on inference, Q&A and summary generation for long videos. It was developed by Ye Liu of the Hong Kong Polytechnic University and a team from Show Lab at the National University of Singapore. The tool mimics the way humans understand video by breaking down the task into steps such as planning, positioning, verifying and answering, one by...

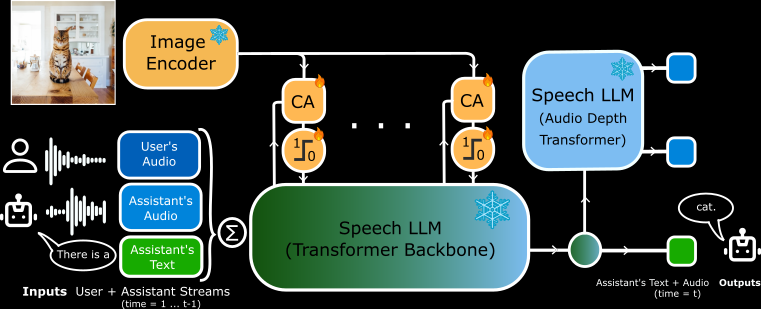

MoshiVis is an open source project developed by Kyutai Labs and hosted on GitHub. It is based on the Moshi speech-to-text model (7B parameters), with about 206 million new adaptation parameters and the frozen PaliGemma2 visual coder (400M parameters), allowing the model...

Qwen2.5-Omni is an open source multimodal AI model developed by Alibaba Cloud Qwen team. It can process multiple inputs such as text, images, audio, and video, and generate text or natural speech responses in real-time. The model was released on March 26, 2025, and the code and model files are hosted on GitHu...

xiaozhi-esp32-server is a tool to provide backend service for Xiaozhi AI chatbot (xiaozhi-esp32). It is written in Python and based on the WebSocket protocol to help users quickly build a server to control ESP32 devices. This project is suitable ...

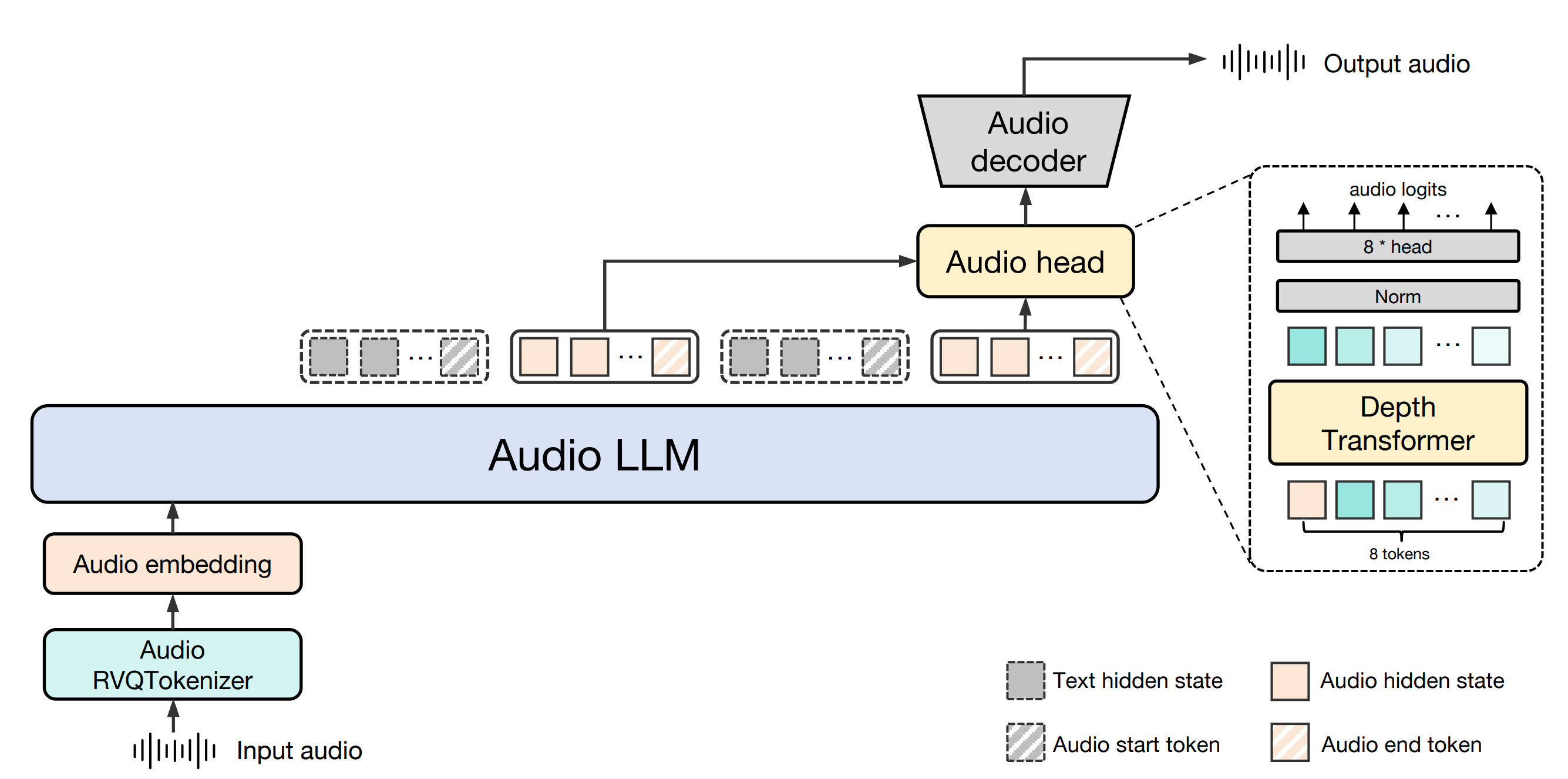

Baichuan-Audio 是由百川智能(baichuan-inc)开发的一个开源项目,托管于 GitHub 上,专注于端到端的语音交互技术。该项目提供了一个完整的音频处理框架,能够将语音输入转化为离散音频标记,再通过大模型生成对应的文本...

PowerAgents 是一个专注于网页自动化任务的AI智能体平台,用户可以通过它创建并部署能够点击、输入和提取数据的AI智能体。该平台支持将任务设置为按小时、天或周自动运行,用户还能实时观看智能体工作过程。它不仅提供自主构建功能,还拥有社...

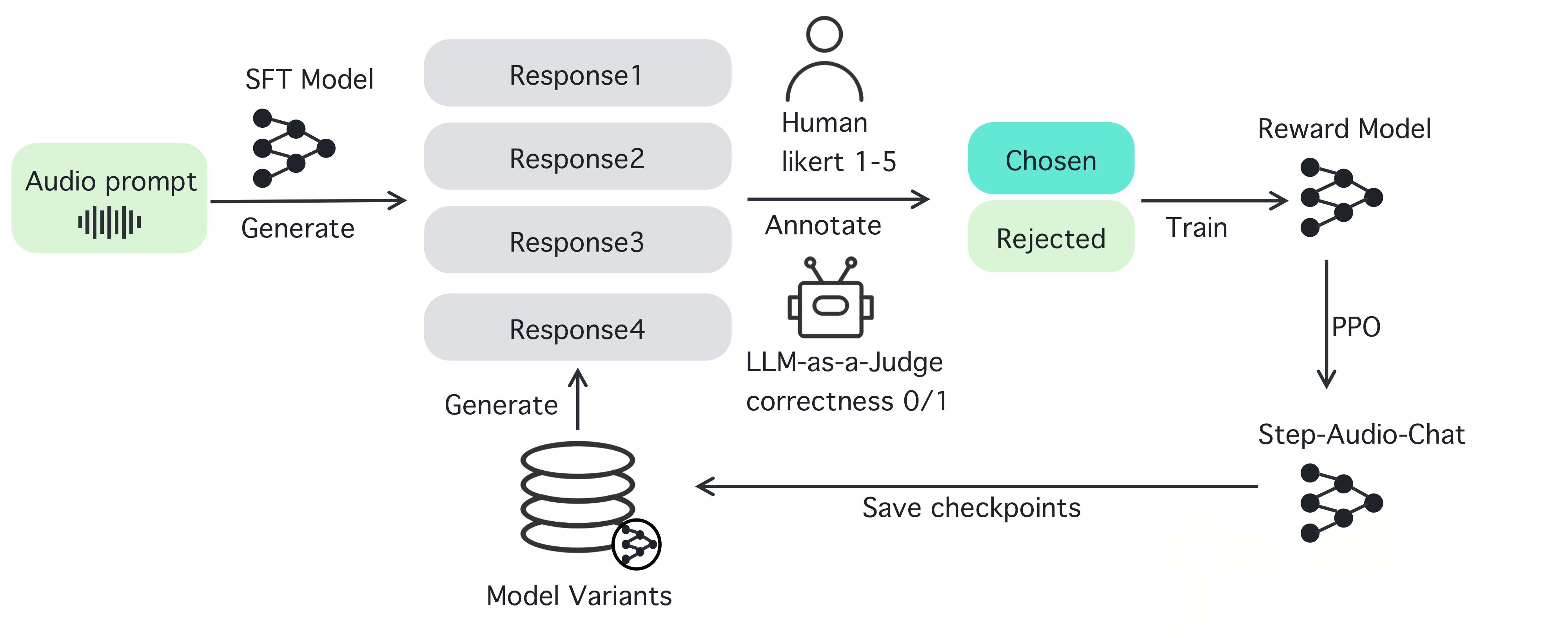

Step-Audio is an open source intelligent voice interaction framework designed to provide out-of-the-box speech understanding and generation capabilities for production environments. The framework supports multi-language dialog (e.g., Chinese, English, Japanese), emotional speech (e.g., happy, sad), regional dialects (e.g., Cantonese, Szechuan), and adjustable speech rate and rhythmic style (e.g., rap). step-...

Gemini Cursor is a desktop intelligent assistant based on Google's Gemini 2.0 Flash (experimental) model. It enables visual, auditory, and voice interactions via a multimodal API, providing a real-time, low-latency user experience. The project, created by @13point5, aims to pass...

DeepSeek-VL2 is a series of advanced Mixture-of-Experts (MoE) visual language models that significantly improve the performance of its predecessor, DeepSeek-VL. The models excel in tasks such as visual quizzing, optical character recognition, document/table/diagram comprehension, and visual localization.De...

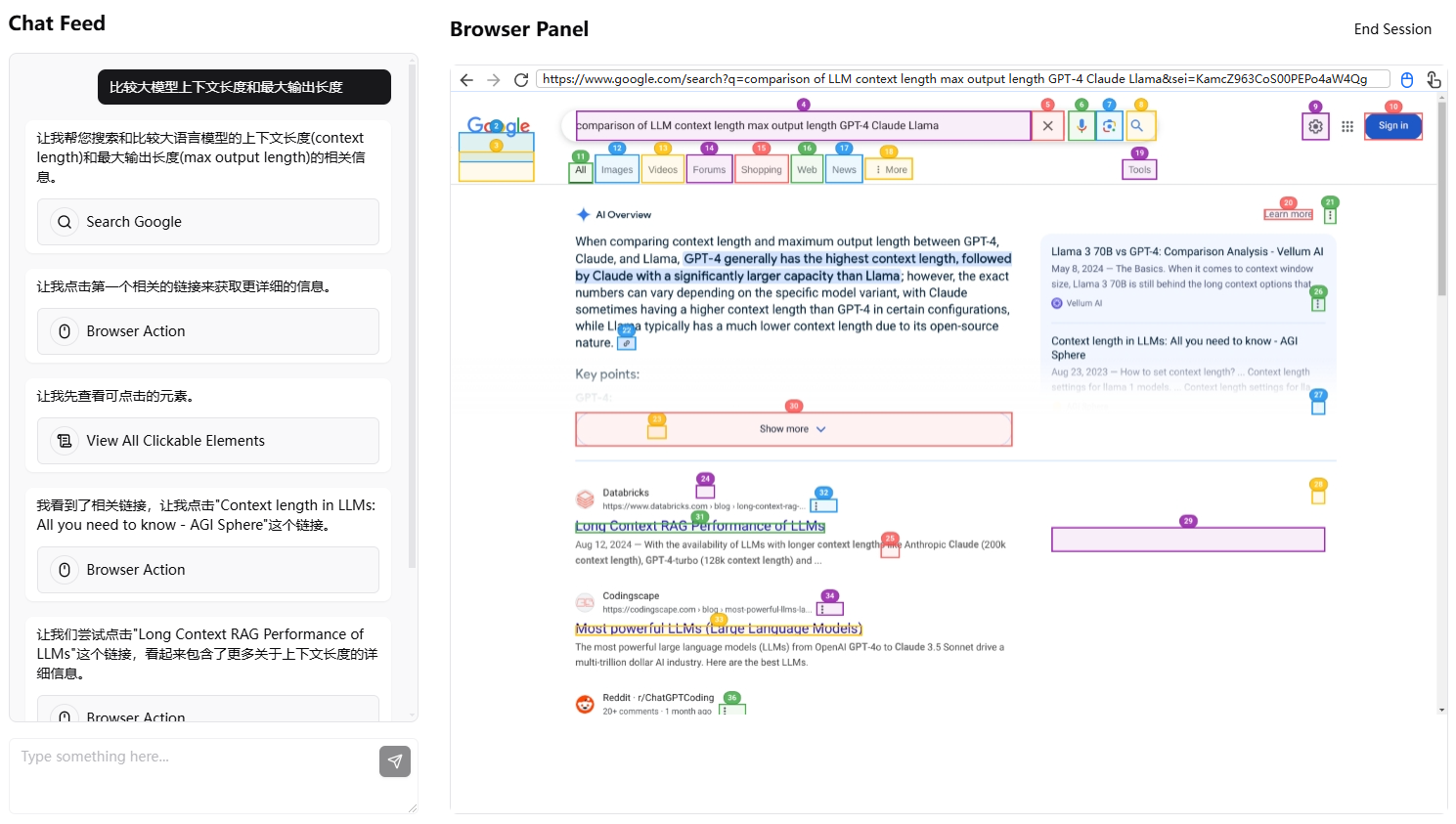

AI Web Operator is an open source AI browser operator tool designed to simplify the user experience in the browser by integrating multiple AI technologies and SDKs. Built on Browserbase and the Vercel AI SDK, the tool supports a variety of Large Language Models (LLM)...

SpeechGPT 2.0-preview is the first anthropomorphic real-time interaction system introduced by OpenMOSS, which is trained on millions of hours of speech data. SpeechGPT 2.0-previ...

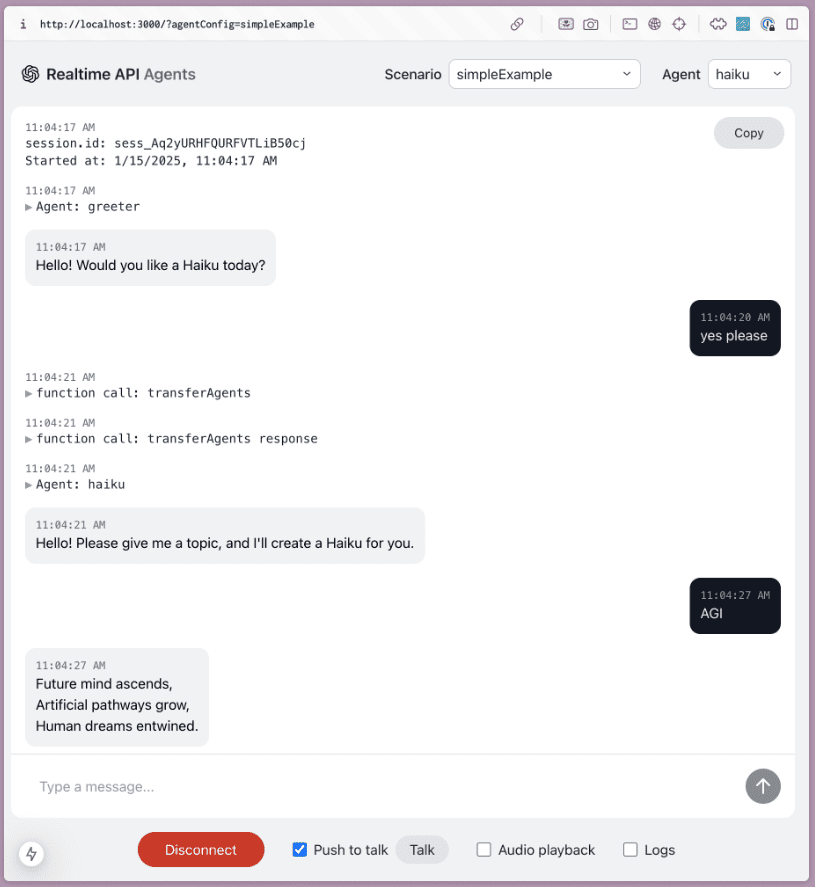

OpenAI Realtime Agents is an open source project that aims to show how OpenAI's real-time APIs can be utilized to build multi-intelligent body speech applications. It provides a high-level intelligent body model (borrowed from OpenAI Swarm) that allows developers to build complex multi-intelligent body speech systems in a short period of time. The project ...

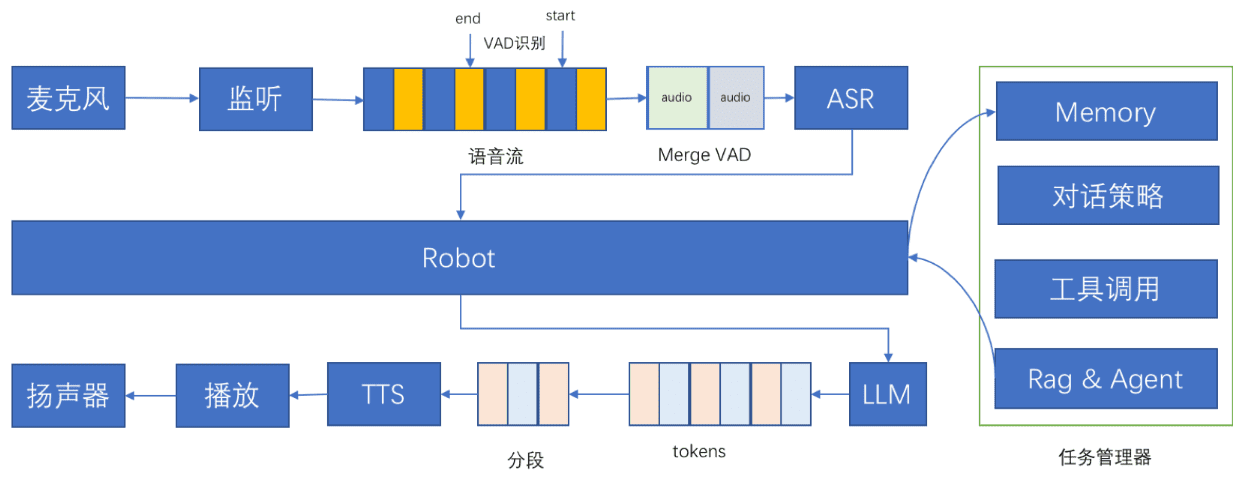

Bailing (Bailing) is an open-source voice conversation assistant designed to engage in natural conversations with users through speech. The project combines speech recognition (ASR), voice activity detection (VAD), large language modeling (LLM), and speech synthesis (TTS) technologies to implement a GPT-4o-like voice conversation bot. The end-to-end latency of BaiLing's ...

Top