DeepInfra is a cloud platform that provides developers with AI model calling services. It allows users to access and run a variety of mainstream open source AI models, including Meta Llama 3, Mistral AI, and Google Gemma, through a simple API interface. The most important feature of this platform is "Serverless", which means that users do not need to deploy and maintain complex server hardware, and can directly pay for the amount of use, greatly reducing the threshold of the development and operation and maintenance of AI applications. DeepInfra Chat is an intuitive demo page provided by the platform, on which users can directly talk to the various large language models deployed on the platform, and feel the performance and characteristics of different models. It also shows developers the real-world results of its API services, allowing them to quickly test and evaluate models for integration into their own applications.

Function List

- Multi-model support: The platform provides a rich selection of open-source large language models, such as Llama, Mistral, Gemma, and other families of models.

- Online Chat Interface: Provides a clean web chat window that allows users to interact directly with selected AI models for testing without registration or configuration.

- API Call Service: The core function is to provide developers with a stable and scalable API interface to easily integrate the capabilities of AI models into their own software or services.

- pay as needed: Adopting a per-use (usually per token count) billing model, users only pay for the computing resources they actually consume, which is cost-effective.

- Out of the box for production environments: provides a scalable, low-latency, production-grade infrastructure where developers don't have to deal with the complexities of Machine Learning Operations (ML Ops).

- Developer Tools Integration: Supports integration with mainstream AI development frameworks such as LangChain and LlamaIndex, simplifying the process of building complex AI applications.

Using Help

DeepInfra Chat is aimed at two types of users: general users who want to quickly experience and review different large language models; and developers who need to integrate AI modeling capabilities into their products. The following will introduce how to use them respectively.

1. Web-based chat function (quick experience)

For those who want to directly experience the AI model's conversational capabilities, it's very simple to use:

- Access to the website: Open the URL in the browser

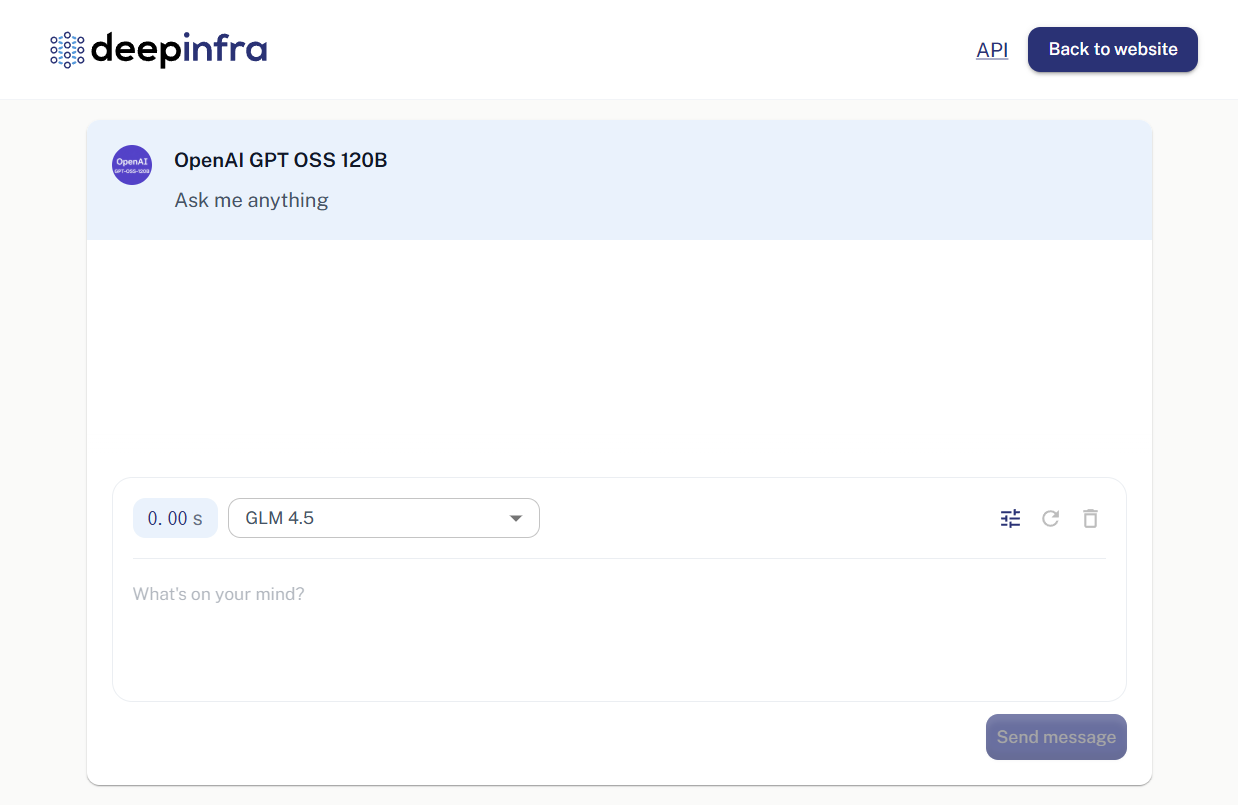

https://deepinfra.com/chat。 - Select Model: When the page loads, you will see a chat interface. At the top of the interface the name of the model currently being used is usually displayed (e.g. "OpenAI GPT OSS 120B"). You can click on the model name and switch by selecting another model you are interested in from the drop-down list, such as the Llama 3 or Mistral series.

- Starting a conversation: In the input box at the bottom of the page, type in any questions you want to ask or things you want to say, then press enter or click the send button.

- View Reply: Responses from the AI model are displayed directly in the chat window. You can continue to ask questions and have multiple rounds of conversations to test the model's logic, language, and knowledge capabilities.

This process does not require a login or any configuration and is perfect for quickly reviewing models.

2. API call service (used by developers)

For developers, the core value of DeepInfra is its powerful API services. You can integrate the capabilities of these AI models into your website, app, or backend service via the API.

Step 1: Create an account and get an API key

- Register/Login: Visit DeepInfra website

https://deepinfra.com/, you can usually log in directly using your GitHub account. - Generate API key: After logging in, go to your account Dashboard and find the API Keys management page (usually the path to the

https://deepinfra.com/dash/api_keys)。 - Creating a new key: Click the "New API Key" button, give your key a name (e.g. "MyTestApp") and click Generate.

- Save the key: The system generates a string that starts with

di-character at the beginning, which is your API key.Please copy it immediately and keep it safeBecause of security concerns, this complete key will only appear once.

Step 2: Call the API

DeepInfra's API endpoints are compatible with OpenAI's format, which makes migration and use very easy. Its core chat API endpoints are:

https://api.deepinfra.com/v1/openai/chat/completions

You can call it using any tool or programming language that supports HTTP requests.

utilization curl A simple example of the command:

Open your terminal and set the following command in the {YOUR_API_KEY} replacing it with the key you just generated.{MODEL_ID} Replace it with the model ID you want to call (e.g. meta-llama/Meta-Llama-3-8B-Instruct)。

curl -X POST https://api.deepinfra.com/v1/openai/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer {YOUR_API_KEY}" \

-d '{

"model": "{MODEL_ID}",

"messages": [

{"role": "user", "content": "你好,请用中文介绍一下你自己。"}

]

}'

Example of using Python:

You need to install the requests Library ( pip install requests )。

import requests

import json

# 替换成你的API密钥

api_key = "{YOUR_API_KEY}"

# 替换成你想使用的模型ID

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

url = "https://api.deepinfra.com/v1/openai/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"model": model_id,

"messages": [

{"role": "user", "content": "你好,请用中文介绍一下你自己。"}

],

"stream": False # 如果需要流式输出,可以设置为True

}

response = requests.post(url, headers=headers, data=json.dumps(data))

if response.status_code == 200:

result = response.json()

# 打印出模型返回的内容

print(result['choices'][0]['message']['content'])

else:

print(f"请求失败,状态码: {response.status_code}")

print(response.text)

You can find the official DeepInfra documentation for all supportedModel ID List。

application scenario

- AI Chatbot Development

Build customer service bots, intelligent assistants or domain-specific Q&A bots for websites, apps or in-house systems. Developers have the flexibility to choose different models based on cost and performance requirements. - Content creation assistance

Use the text generation capabilities of the model to assist in writing marketing copy, blog posts, code, emails, or for text touch-ups. - Education and Research

Researchers and students can use the platform to easily call and test a variety of cutting-edge open source AI models without incurring the high cost of hardware deployment. - Rapid Prototyping

Product managers and developers can utilize DeepInfra in the early stages of product development to quickly build a prototype that includes AI functionality for feature validation and demonstration of results.

QA

- Is DeepInfra Chat free?

The web version of the chat experience is free of charge, but has certain usage limitations and is mainly used for feature demonstrations and model reviews. If the call is made through the API, you need to pay according to the selected model and the number of requested tokens, and the platform will provide a certain amount of free trial computing resources. - What AI models can I use?

DeepInfra supports a wide range of mainstream open source large language models, including but not limited to Meta's Llama series (e.g. Llama 3), Mistral AI's models, Google's Gemma, and CodeLlama and other models focused on code generation. The specific list of supported models can be viewed on their official website. - Who are the main users of this platform?

It is primarily aimed at AI application developers, technology researchers, and organizations looking to integrate AI capabilities into their existing business. Also, its web chat feature is suitable for any regular user who is interested in AI technology and wants to experience different modeling capabilities. - Do I need to configure my own server to use the API?

DeepInfra is a "Serverless" platform that hosts, runs and elastically scales all the models. Developers only need to initiate requests via API keys, and don't need to worry about the underlying hardware and operations.