Preface: Building Your Own AI Agent Studio

Coze Studio is a low-code AI Agent development platform open-sourced by ByteDance. It provides a visual toolset that enables developers to rapidly build, debug, and deploy AI intelligences, applications, and workflows with a minimal amount of code. This approach not only lowers the technical threshold, but also provides a solid foundation for building highly customized AI products.

An AI Agent is an intelligent program that understands user intent and autonomously plans and executes complex tasks. Deploying locally Coze StudioThis means that you have complete control over your data and models, providing excellent privacy and flexibility for development and experimentation.

The back-end of the platform uses GolangThe front end is React + TypeScript The combination, overall based on microservices and domain-driven design (DDD) architecture, ensures the high performance and scalability of the system.

This article will guide you through Coze Studio Open Source Edition for local deployment and configure the connection to the local Ollama as well as OpenRouter of modeling services.

1. Installation of Ollama: have your own personal large model

Ollama is a lightweight, extensible framework for running native large language models. It greatly simplifies the task of running the Llama 3, Qwen 和 Gemma and other modeling processes. Having OllamaYou can utilize AI to process private data in a completely offline environment without relying on any third-party cloud services, balancing security and cost.

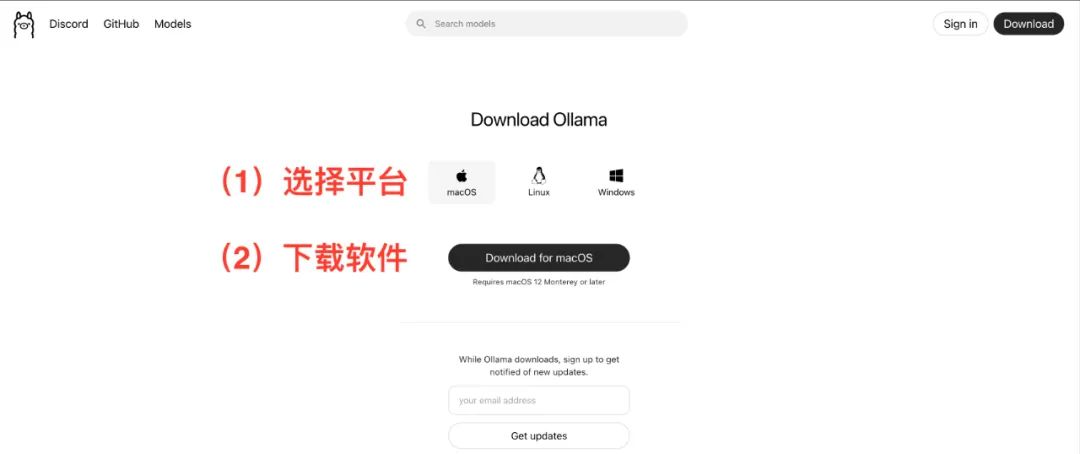

interviews https://ollama.com/Download and install the appropriate client for your operating system (macOS, Linux, Windows). The installation process is very intuitive, just follow the wizard.

pull model

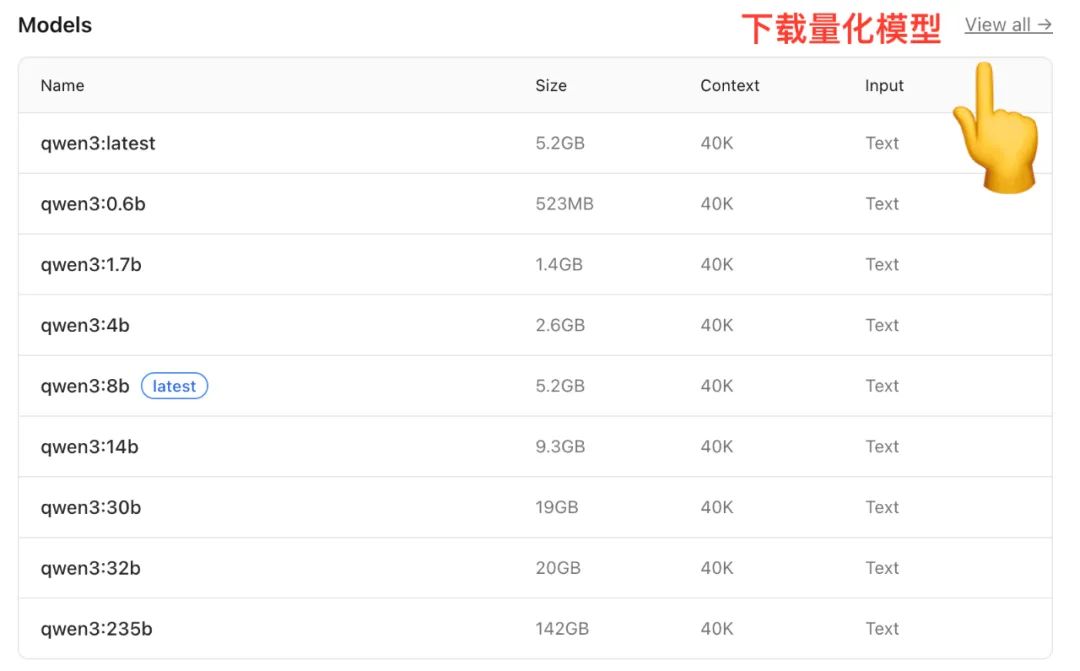

After the installation is complete, you will need to start from the Ollama to download a model locally from the model library of the Take the Qwen model as an example, and its model library provides versions with different parameter scales.

(Image source: https://ollama.com/library/qwen)

Models of different sizes have different memory (RAM) requirements, and this is a factor to consider when making a selection:

- 7B Model: Recommended 16GB RAM

- 14B Model: Recommended 32GB RAM

- 72B Model: Recommended 64GB RAM

Many models also provideQuantization Version. Quantization is a technique to reduce the size and memory footprint of a model by decreasing the precision of the weights while maintaining the performance of the model, allowing the model to run more efficiently on consumer-grade hardware.

Open a terminal (Terminal or Command Prompt) and enter the following command to pull a medium-sized model:

ollama run qwen:14b

This command will automatically download and decompress the specified model from the cloud. The exact time taken depends on the network conditions.

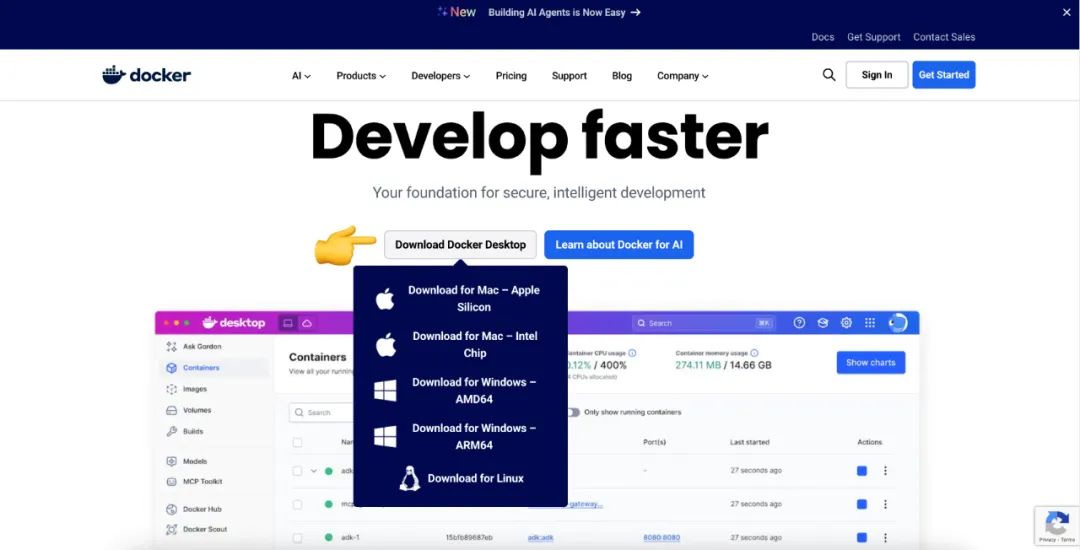

2. Installing Docker: a standardized tool for application deployment

Docker is a containerization technique that packages an application and all its dependencies into a standard, portable "container". In a nutshell.Docker It's like a standardized container, and Coze Studio It's the cargo we're going to load. By DockerWe can easily add a new version to any of our support programs. Docker on a machine that is running Coze StudioNo need to worry about complex environment configurations and dependency conflicts.

interviews https://www.docker.com/Download and install Docker DesktopIt also provides a graphical installation interface for macOS, Linux and Windows. It also provides a graphical installation interface for macOS, Linux and Windows.

3. Local deployment of Coze Studio

3.1 Environmental requirements

- software: Make sure the machine has at least a 2-core CPU and 4 GB of RAM.

- hardware: Advance Installation

Docker、Docker Composeand ensure thatDockerThe service has been started.

3.2 Obtaining the Coze Studio Source Code

If the machine has been installed GitImplementation git clone command is the most direct way to get the source code.

git clone https://github.com/coze-dev/coze-studio.git

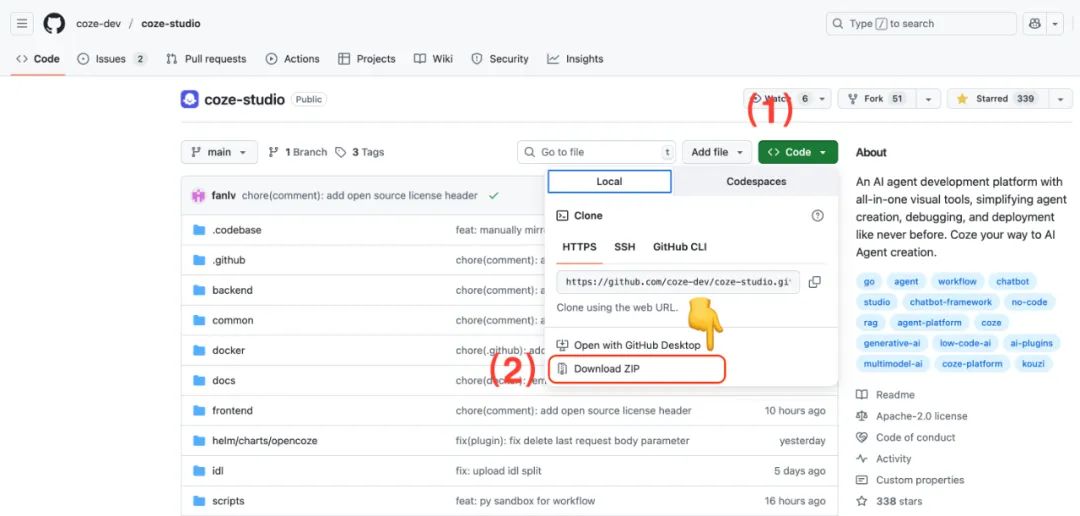

If not installed GitIt can also be directly downloaded from the GitHub page to download the ZIP archive.

3.3 Configuring Models for Coze Studio

Coze Studio Multiple modeling services are supported, including Ark(volcano ark)OpenAI、DeepSeek、Claude、Ollama、Qwen 和 Gemini。

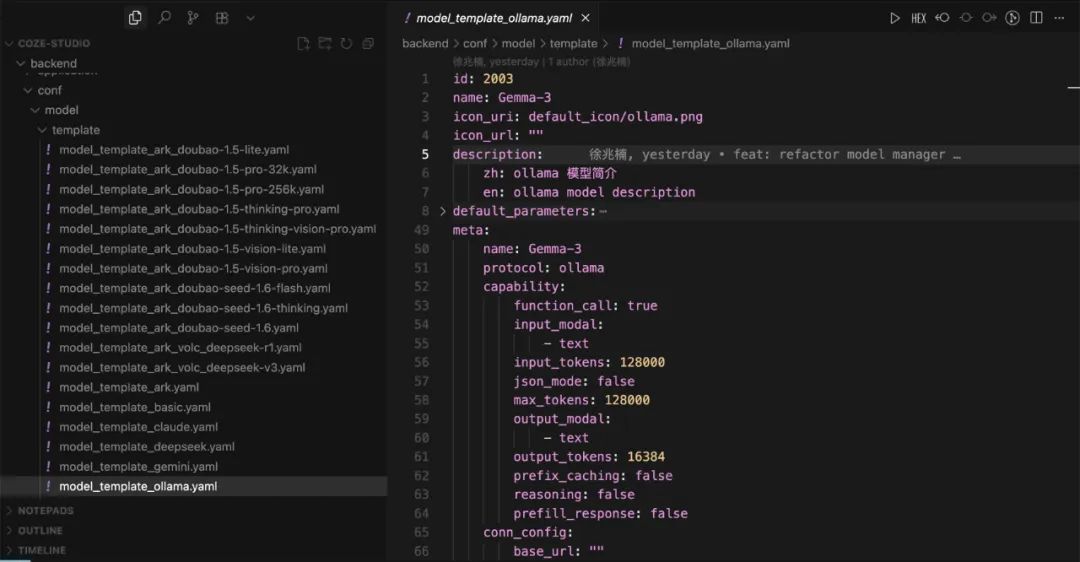

Use the code editor to open the coze-studio Project. In backend/conf/model/template directory holds configuration templates for the different model services.

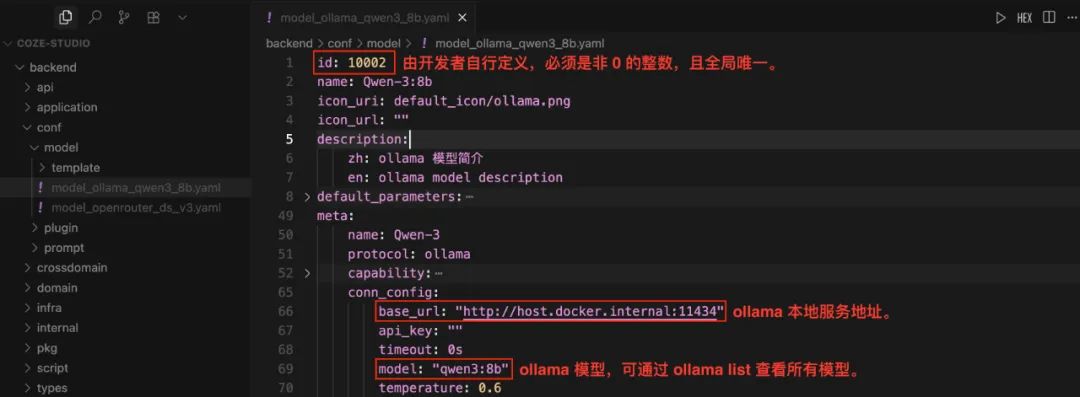

Configuring the Local Ollama Model

- 将

model_template_ollama.yamlThe template files are copied to thebackend/conf/modelCatalog. - Rename it, e.g.

model_ollama_qwen14b.yaml。 - Edit this file to configure the

qwen:14bmodel as an example:

Attention:id Fields must be globally unique non-zero integers. For models that are already online, do not modify their id, otherwise the call will fail. Before configuration, you can run ollama list command to see what models already exist locally.

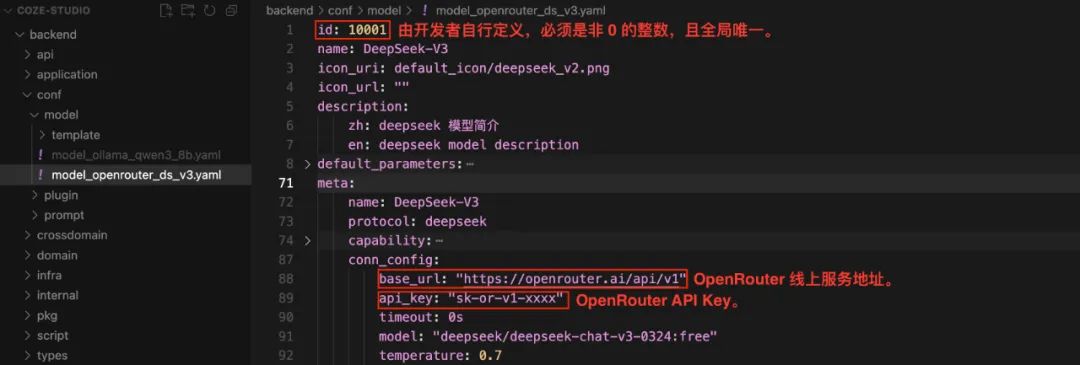

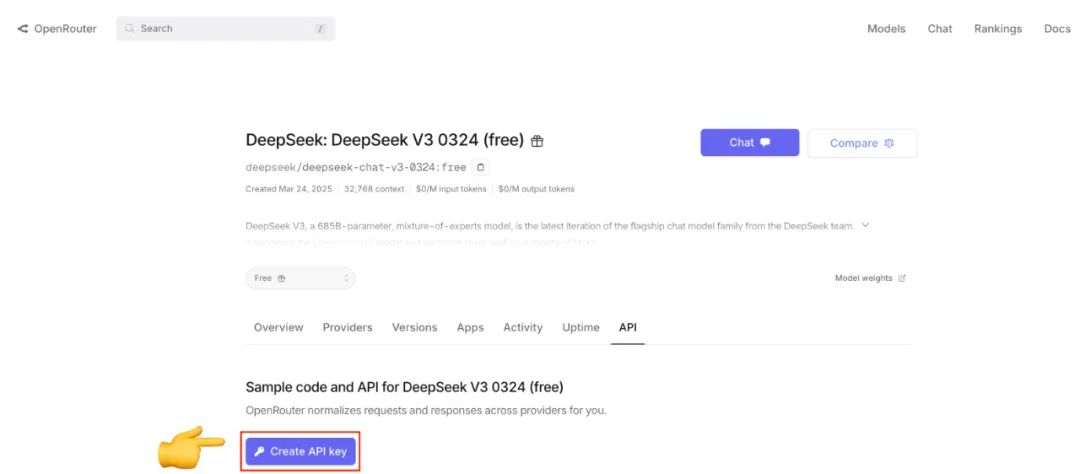

Configuring the OpenRouter Model

OpenRouter It is a model aggregation service that allows developers to call multiple models from different vendors through a unified API interface, simplifying the management of API keys and the model switching process.

- 将

model_template_deepseek.yamlThe template files are copied to thebackend/conf/modelCatalog. - rename

model_openrouter_ds_v2.yaml。 - Edit the file to configure the

DeepSeek-V2As an example, and fill in the blanks from theOpenRouteracquiredapi_key。

If not already OpenRouter API Key, you can visit their official website to register and create it.

3.4 Deploy and start services

After you have configured the model, go to the project's docker directory, and then run the following command:

cd docker

cp .env.example .env

docker compose --profile '*' up -d

--profile '*' parameter will ensure that the docker-compose.yml All services defined in the file (including optional services) are started. The first deployment pulls and builds the image, which takes a long time.

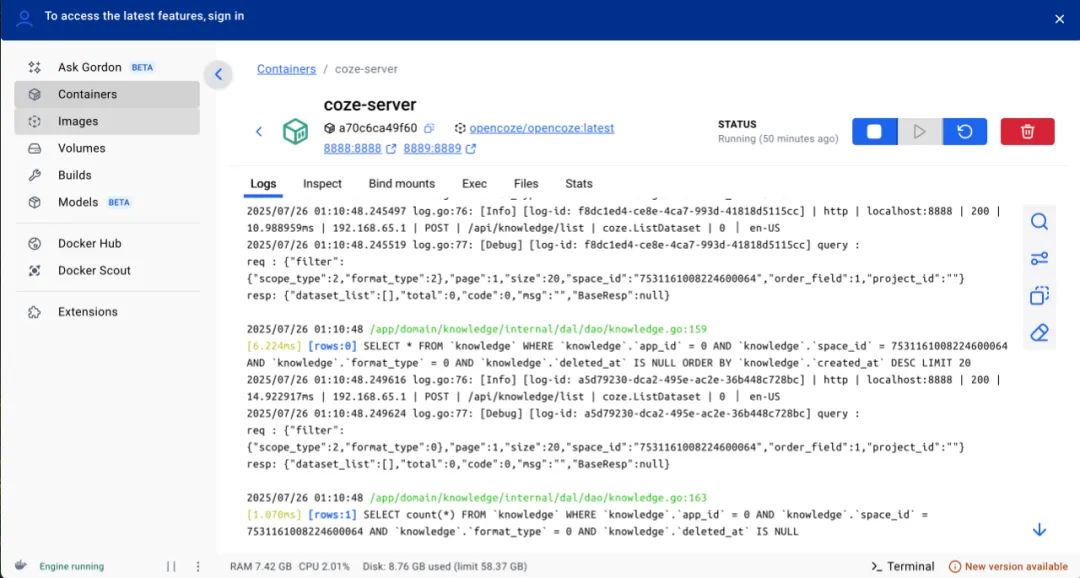

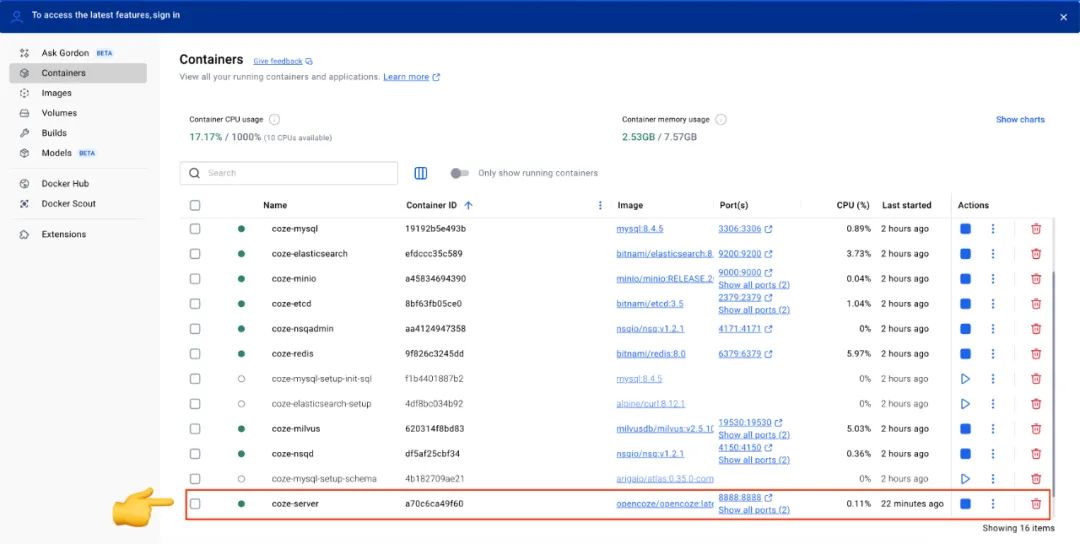

After startup, the Docker Desktop to view the status of all services in the interface of the When the coze-server The status indicator of the service turns green, which means that the Coze Studio has been successfully launched.

After each configuration file modification, you need to execute the following command to restart the service for the configuration to take effect:

docker compose --profile '*' restart coze-server

3.5 Using Coze Studio

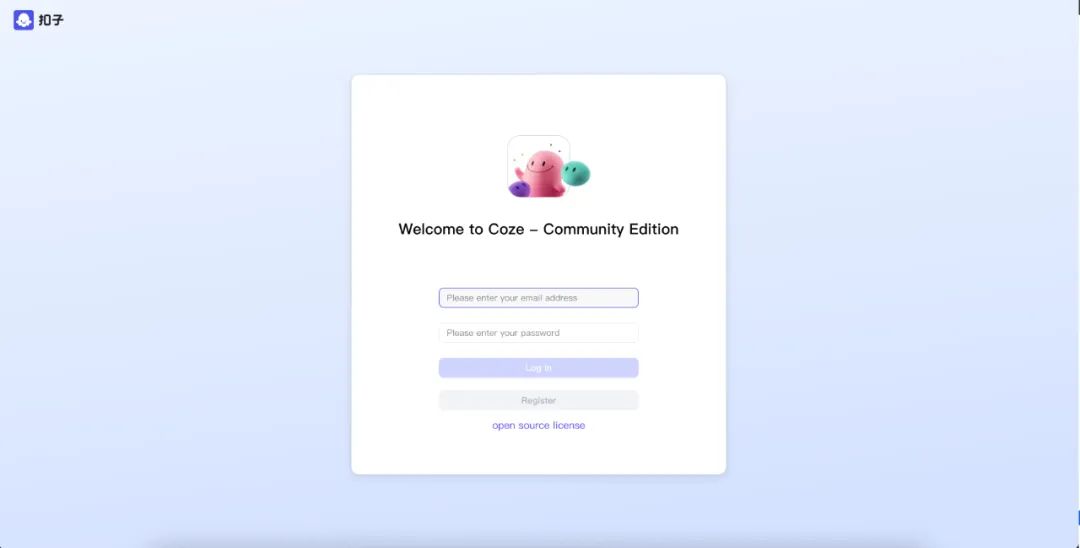

After the service has started, access it in your browser http://localhost:8888/。

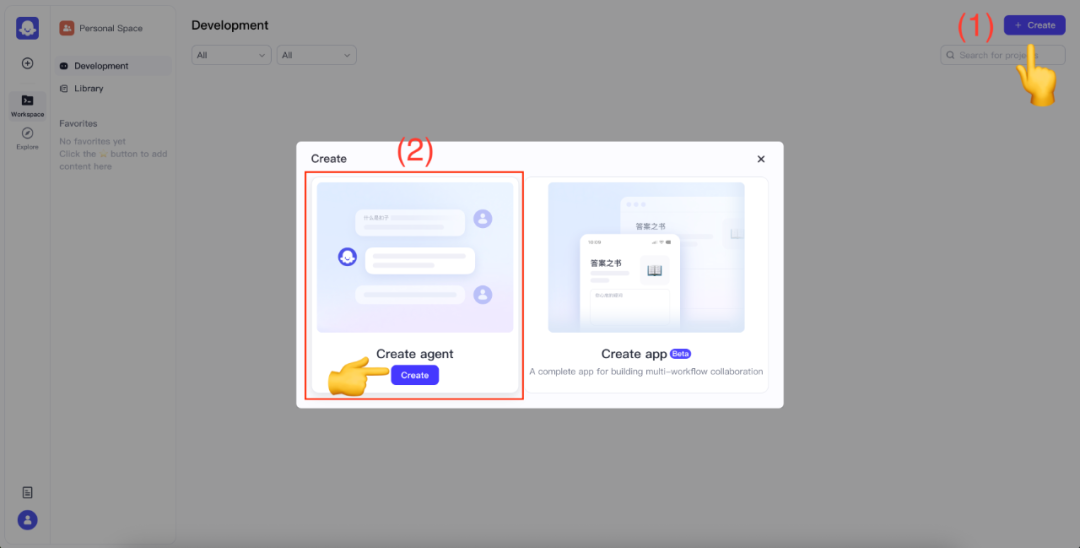

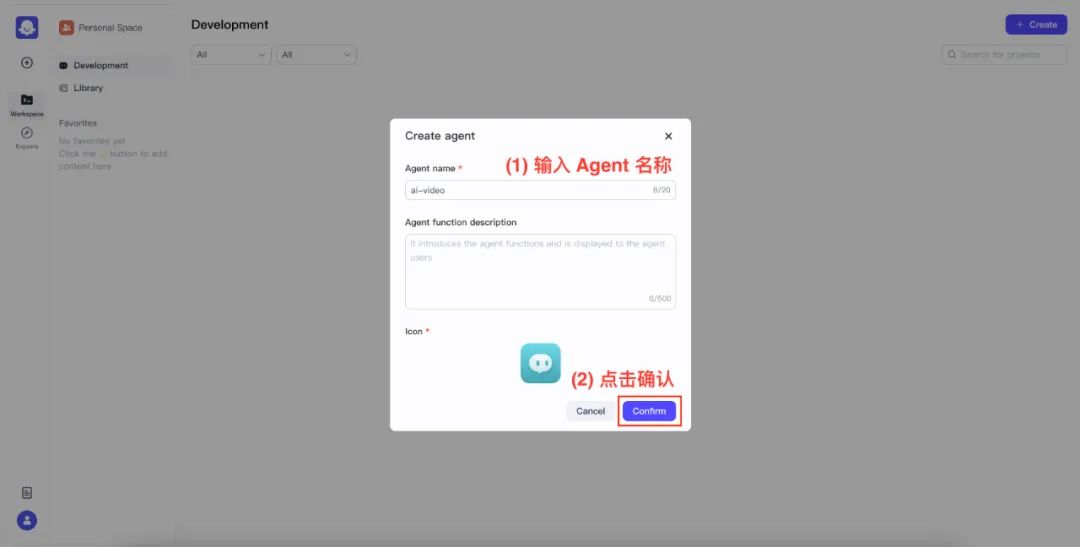

For the first time, enter your email and password to register as a new user. After successfully logging in, click the upper right corner of the Create button, select Create agent。

Name and confirm your Agent.

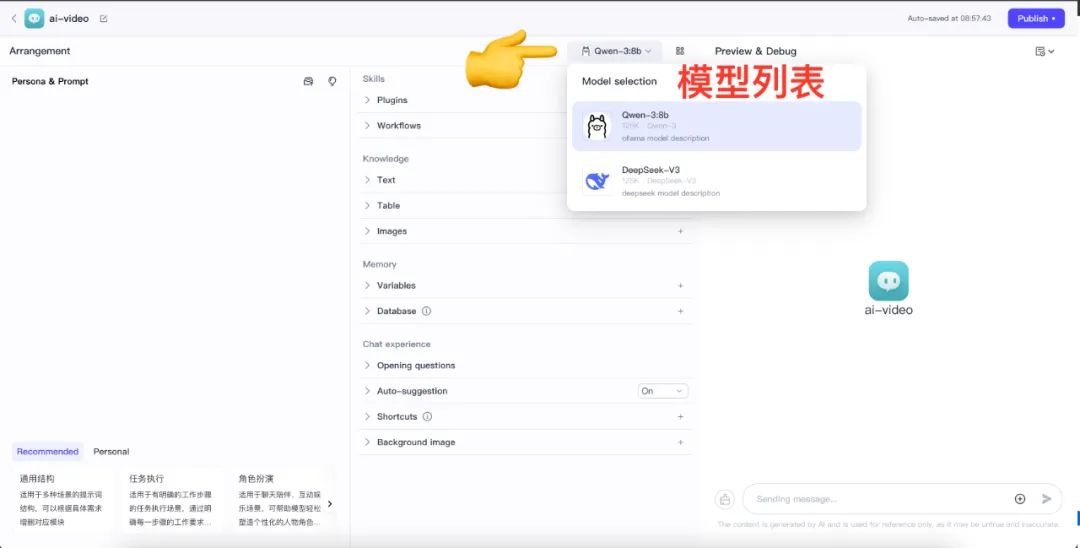

Once you are in the Agent Design screen, click on the Model List to see all the models that have been configured.

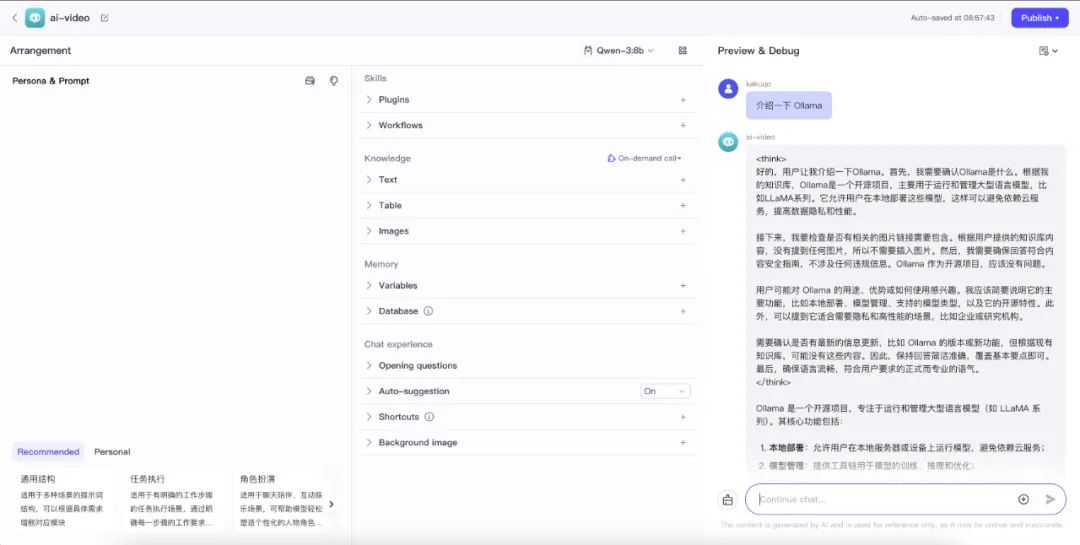

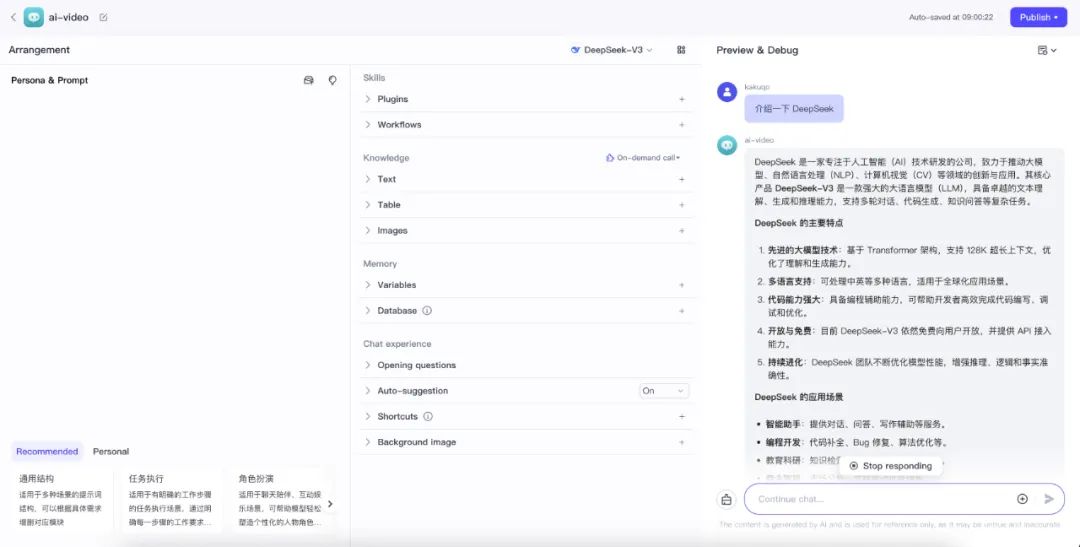

The model can now be tested to see if it is working properly by using the chat box at the bottom right.

- Test Local Ollama

qwen:14bservice

- Test the online OpenRouter

DeepSeek-V2service

If a call exception is encountered, the Docker Desktop ferret out coze-server container's logs to troubleshoot.