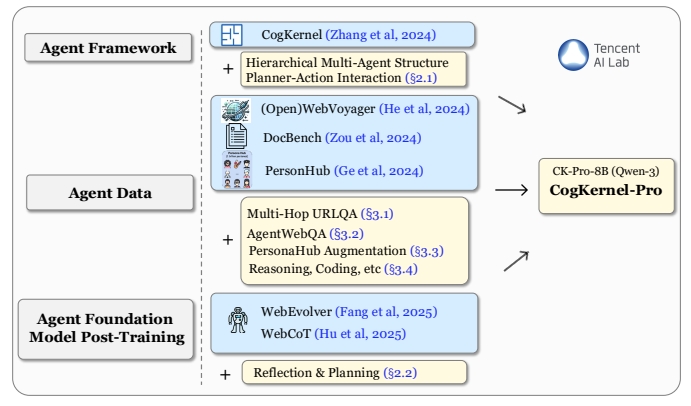

Cognitive Kernel-Pro is an open source framework developed by Tencent's AI Lab, designed to help users build deep research intelligences and train intelligent base models. It integrates web browsing, file processing and code execution to support users in complex research tasks. The framework emphasizes the use of free tools to ensure open source and accessibility. Users can deploy and run the intelligences locally with simple setup to handle tasks such as real-time information, file management, and code generation. The project has performed well in GAIA benchmarks, matching or even surpassing the performance of some closed-source systems. The code and model are fully open-sourced and hosted on GitHub to encourage community contributions and further research.

Function List

- Web Browsing: Intelligent bodies can obtain real-time web information by controlling the browser to perform operations such as clicking and typing.

- File Processing: Supports reading and processing local files to extract key data or generate reports.

- Code execution: Automatically generate and run Python code for data analysis or task automation.

- Modular design: Provides a multi-module architecture that allows users to customize the functional combinations of the intelligences.

- Open source model support: Integrate Qwen3-8B-CK-Pro and other open source models to reduce the cost of use.

- Task Planning: Intelligent bodies can generate task plans based on user instructions and break down complex tasks.

- Secure Sandbox Operation: Support executing code in a sandbox environment to ensure safe operation.

Using Help

Installation process

To use Cognitive Kernel-Pro, users need to complete the following installation and configuration steps in their local environment. Below is a detailed installation process to ensure that users can get started quickly.

1. Environmental preparation

First, make sure you have Python 3.8 or higher installed on your system, and that you have the Git tool. This is recommended for Linux or macOS systems; Windows users will need to install some additional dependencies. Here are the basic steps:

- Installation of dependencies: Run the following command to install the necessary Python packages:

pip install boto3 botocore openai duckduckgo_search rich numpy openpyxl biopython mammoth markdownify pandas pdfminer-six python-pptx pdf2image puremagic pydub SpeechRecognition bs4 youtube-transcript-api requests transformers protobuf openai langchain_openai langchain selenium helium smolagents - Installation of system dependencies(Linux system):

apt-get install -y poppler-utils default-jre libreoffice-common libreoffice-java-common libreoffice ffmpegThese tools support file conversion and multimedia processing.

- Setting the Python Path: Add the project path to the environment variable:

export PYTHONPATH=/your/path/to/CogKernel-Pro - cloning project: Clone the Cognitive Kernel-Pro repository from GitHub:

git clone https://github.com/Tencent/CognitiveKernel-Pro.git cd CognitiveKernel-Pro

2. Configuration model services

Cognitive Kernel-Pro Support vLLM or TGI Model Server to run open source models. The following is an example of vLLM:

- Installing vLLM:

pip install vllm - Starting the modeling service: Assuming you have downloaded the Qwen3-8B-CK-Pro model weights, run the following command to start the service:

python -m vllm.entrypoints.openai.api_server --model /path/to/downloaded/model --worker-use-ray --tensor-parallel-size 8 --port 8080 --host 0.0.0.0 --trust-remote-code --max-model-len 8192 --served-model-name ck

3. Configuring web browser services

The project uses Playwright to provide web browsing functionality. Run the following script to start the browser service:

sh ck_pro/ck_web/_web/run_local.sh

Ensure that the service is running on the default port localhost:3001。

4. Running the main program

After preparing the model and the browser service, run the main program to execute the tasks:

NO_NULL_STDIN=1 python3 -u -m ck_pro.ck_main.main --updates "{'web_agent': {'model': {'call_target': 'http://localhost:8080/v1/chat/completions'}, 'web_env_kwargs': {'web_ip': 'localhost:3001'}}, 'file_agent': {'model': {'call_target': 'http://localhost:8080/v1/chat/completions'}}}" --input /path/to/simple_test.jsonl --output /path/to/simple_test.output.jsonl |& tee _log_simple_test

--inputSpecifies the task input file in JSON Lines format, with each line containing a task description.--outputSpecifies the path to an output file to save the results of the smart body execution.

5. Cautions

- safety: It is recommended to run in a sandboxed environment with sudo privileges disabled to avoid potential risks:

echo "${USER}" 'ALL=(ALL) NOPASSWD: !ALL' | tee /etc/sudoers.d/${USER}-rule chmod 440 /etc/sudoers.d/${USER}-rule deluser ${USER} sudo - debug mode: can add

-mpdbparameter to enter debug mode for troubleshooting.

Main Functions

web browser

Cognitive Kernel-Pro's web browsing functionality allows intelligences to interact with web pages like humans. The user provides a task description (e.g., "Find the latest commits to a repository on GitHub"), and the intelligence automatically opens a browser and performs clicking, typing, etc. to obtain the desired information. Here's how it works:

- Define tasks in the input file, for example:

{"task": "find the latest commit details of a popular GitHub repository"} - The smart body generates a task plan, invokes the Playwright browser, and visits the target web page.

- Extracts web page content and returns the results, saving them to an output file.

Documents processing

The file processing function supports reading and analyzing files in multiple formats (e.g. PDF, Excel, Word). Users can specify file paths and tasks, such as extracting tabular data from PDFs:

- Prepare the input file, specifying the file path and task:

{"task": "extract table data from /path/to/document.pdf"} - The smart body calls the file processing module to parse the file and extract the data.

- The results are saved to an output file in a structured format such as JSON or CSV.

code execution

The Code Execution feature allows intelligences to generate and run Python code to accomplish data analysis or automation tasks. For example, analyzing data in a CSV file:

- Enter a description of the task:

{"task": "analyze sales data in /path/to/sales.csv and generate a summary"} - Intelligentsia generates Python code to process the data using libraries such as pandas.

- The code is executed in a sandbox environment and the results are saved to an output file.

Mission planning

The intelligence will generate an execution plan based on the complexity of the task. For example, for "Find and summarize the contents of a paper":

- Intelligentsia decompose the task into sub-steps: search for papers, download PDFs, extract key information, and generate summaries.

- Each sub-step calls the appropriate module (web browsing, file handling, etc.) for execution.

- The final result is output in a user-specified format.

Featured Function Operation

Open Source Modeling Support

Cognitive Kernel-Pro integrates the Qwen3-8B-CK-Pro model with excellent performance in GAIA benchmarks. Users can download the model weights directly, eliminating the need to rely on closed-source APIs and reducing cost of ownership. The model supports multimodal tasks (e.g., image processing), and users can configure VLM_URL to use multimodal functions.

Security sandbox operation

To prevent risks associated with code execution, the framework is recommended to run in a sandbox environment. Users can deploy via Docker to ensure code isolation:

docker run --rm -v /path/to/CogKernel-Pro:/app -w /app python:3.8 bash -c "pip install -r requirements.txt && python -m ck_pro.ck_main.main"

application scenario

- academic research

With Cognitive Kernel-Pro, users can search for academic papers, extract key information and generate summaries. For example, if you type in "find the latest papers in the field of AI and summarize them", the intelligent body will automatically search arXiv, download the PDF of the papers, extract the abstract and conclusions, and generate a concise report. - data analysis

Business users can use the framework to analyze local data files. For example, processing CSV files of sales data to generate statistical charts and trend analysis reports is suitable for generating quick business insights. - Automated tasks

Developers can use intelligences to automate repetitive tasks, such as batch downloading web data, organizing file contents or performing code tests, to increase productivity. - Open Source Community Development

Community developers can participate in the continuous improvement of the framework by customizing intelligences based on the framework, extending functional modules such as adding new file parsers or integrating other open source models.

QA

- Does Cognitive Kernel-Pro require a paid API?

No need. The framework uses fully open-source tools and models (e.g. Qwen3-8B-CK-Pro) with no dependency on paid APIs. users can simply download the model weights and deploy them locally. - How to ensure safe code execution?

It is recommended to run in a sandboxed environment (e.g. Docker), disable sudo permissions, and restrict network access. The project documentation provides detailed security configuration guidelines. - What file formats are supported?

Supports common formats such as PDF, Excel, Word, Markdown, PPTX, etc. Text, table or image data can be extracted by the file processing module. - How do you handle complex tasks?

The intelligent body will break down complex tasks into subtasks, generate execution plans, and invoke web browsing, file processing, or code execution modules to complete them step by step. - Are multimodal tasks supported?

Yes, multimodal tasks such as image processing or video analysis are supported. User needs to configure VLM_URL and provide multimodal model support.