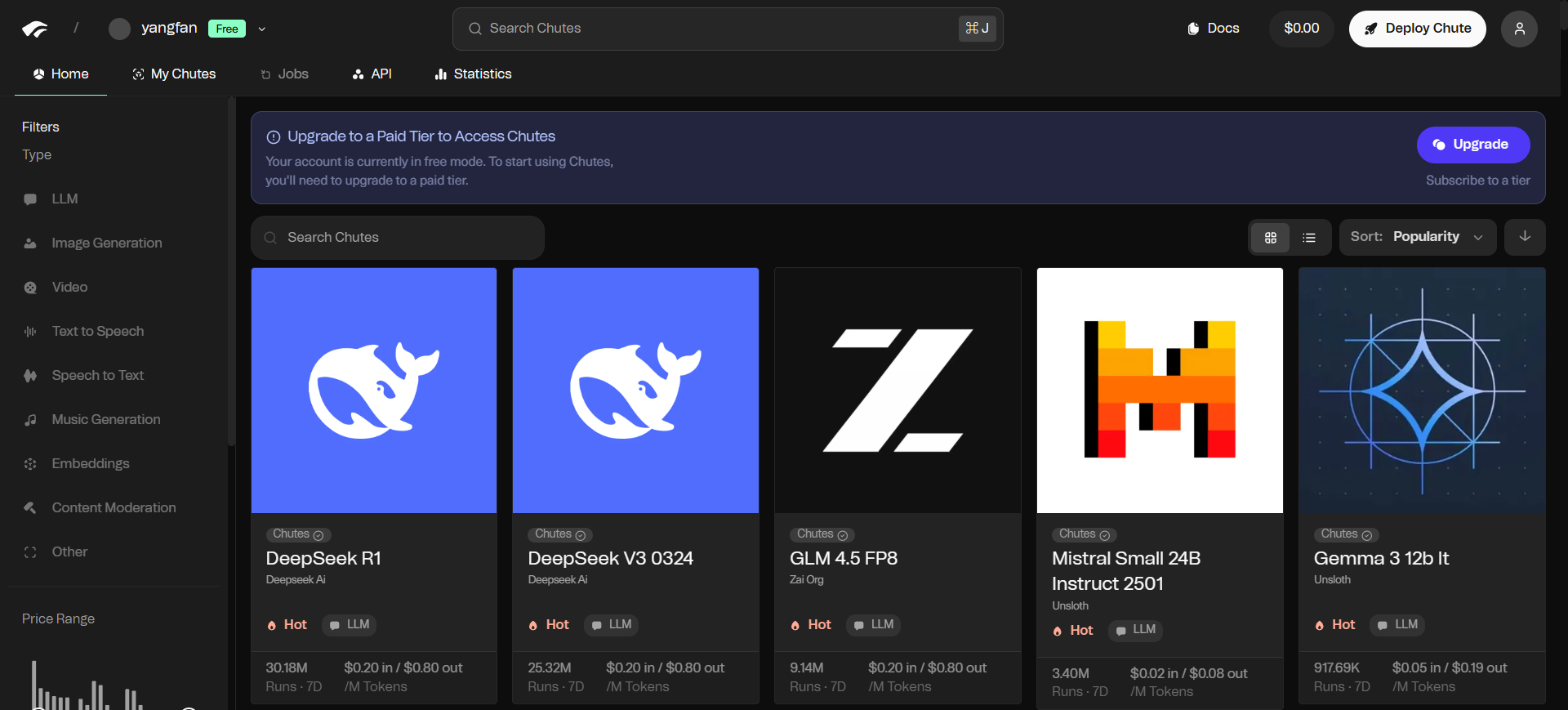

Chutes is an AI model computing platform for developers. It is based on a decentralized open source architecture and users do not need to manage complex servers themselves. Using this platform, developers can quickly deploy and run a variety of open source AI models, such as large language models or image generation models. At its core, Chutes.ai provides a serverless computing environment, meaning that users simply upload their code and the platform handles the rest of the running and scaling tasks. It has a pay-per-use model, where you pay for what you use, which is a cost-saving way for startups and individual researchers who need flexible computing resources. The platform provides stable and efficient compute support for AI inference tasks by consolidating GPU resources worldwide.

Function List

- Serverless deployment: Users can deploy any AI model without server configuration and management.

- Rapid Expansion:: The system can automatically adjust computing resources based on workload demands.

- Support for multiple models: The platform is not limited to Large Language Models (LLMs), but also supports a wide range of AI applications such as images, videos, speech, music and 3D models.

- Customized environments: Users can upload their own code or use Docker containers to run any open source model.

- API Priority: Provides a clean API interface for easy integration of its functionality into any application or system.

- model library: The platform provides the latest open source models and often goes live soon after the models are released.

- pay as needed: Payment is based on the actual volume of computing requests and different levels of subscription plans are available.

- decentralized network: Built on top of the Bittensor network, it utilizes distributed GPU resources to provide computational services.

Using Help

The Chutes.ai platform simplifies the process of deploying and using AI models, allowing developers without specialized knowledge of cloud service management to get up to speed quickly. At its core, it provides a serverless environment where users only need to focus on the models and the code itself.

Registering and Obtaining API Keys

- Visit the official Chutes.ai website and create an account.

- Once logged in, find the API Management section in your account dashboard or settings page.

- Generate a new API key. This key is your credential for accessing the platform's computing resources, so keep it safe.

How to use the model through the API

Chutes.ai is compatible with OpenAI's API format, which makes switching and integration very easy. If you have used OpenAI's API before, you will need to make very few code changes.

Step 1: Setting the request address

Replace the destination URL requested in your code with the proxy address provided by Chutes.ai:

https://llm.chutes.ai/v1/chat/completions

Step 2: Configure the API key

Authenticate your API key as a Bearer Token in the request's headers (Headers).

Authorization: Bearer YOUR_CHUTES_API_KEY

Step 3: Specify the model

In the JSON data of the request body (Body), themodelfield specifies the model you want to use. For example, if you want to use one of DeepSeek's models, you can set it to:

"model": "deepseek-ai/DeepSeek-V3"

The following is an example of sending a request using cURL:

curl -X POST https://llm.chutes.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_CHUTES_API_KEY" \

-d '{

"model": "deepseek-ai/DeepSeek-V3",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello! Can you tell me a joke?"

}

]

}'

You need to change the code in theYOUR_CHUTES_API_KEYReplace the key with your own.

Use in third-party applications

Chutes.ai can also be easily integrated into third-party applications that support custom model interfaces, such as Janitor AI or KoboldAI.

The setup process is usually as follows:

- In the Settings option of the app, find the API or Model Configuration portal.

- option

自定义或兼容OpenAIThe type of API. - In the API Address or Proxy URL field, fill in the Chutes request address:

https://llm.chutes.ai/v1/chat/completions。 - In the API Key field, fill in the key generated by your Chutes.ai account.

- In the Model Name field, enter the model identifier on the Chutes platform you wish to use, for example

deepseek-ai/DeepSeek-V3。

Once the settings are saved, the app runs the AI model through Chutes.ai's computational network.

Deploy your own model

For advanced users who need to run custom models, Chutes.ai allows uploading your own code or Docker containers. You can find detailed instructions on how to package and deploy custom "Chutes" (containers) in their official documentation. This process usually requires you to package the model and related dependencies into a standard container image, which can then be uploaded and deployed through the platform's tools.

application scenario

- Rapid prototyping of AI applications

For startups or developers who need to quickly validate an idea, Chutes.ai provides an environment where they don't have to care about the underlying facilities. Developers can focus entirely on application logic and user experience, and quickly build a functional prototype using the platform's existing open source models. - Extend AI capabilities in existing applications

If an existing website or app needs to integrate AI functionality (such as smart customer service, content recommendations, or text summarization), developers can easily add powerful modeling capabilities to their products by calling Chutes.ai's API interface without incurring high hardware and maintenance costs. - Academic research and experimentation

Researchers often need to test different AI models or algorithms. chutes.ai's pay-as-you-go model and flexible model support facilitates academic research. Researchers can call on the latest open source models for experimentation at any time, at a manageable cost, and without spending time on environment configuration. - Handling large-scale data tasks

For tasks that require large-scale text embedding, data analysis, or image processing, Chutes.ai's upcoming "Long Jobs" feature can be used. Users can submit batch tasks and utilize the platform's distributed computing resources to complete their work efficiently.

QA

- How is Chutes.ai different from the AI services offered by traditional cloud providers (e.g. AWS, Google Cloud)?

Traditional cloud service providers usually require users to configure and manage virtual machines, container instances, and networks on their own, which is a complicated process. chutes.ai provides serverless computing services that encapsulate all the underlying technical details. Users can directly deploy and invoke the model, and the platform will automatically handle the allocation and expansion of resources, greatly reducing the threshold of use. - What does "decentralized" mean on the platform?

"Decentralized" means that Chutes.ai's computing resources do not come from a single data center, but rather consist of a network of GPU providers distributed around the world. This structure improves the stability and resilience of the system, while theoretically providing more cost-effective computing services through competitive mechanisms. - Is it safe to run data from models on Chutes.ai?

Chutes.ai secures users' code by executing it in an isolated environment. The platform is also planning to introduce a Trusted Execution Environment (TEE) based confidential computing feature, which will provide a higher level of data privacy protection for users working with sensitive data. - What if the model I want to use is not available on the platform?

Chutes.ai allows users to add their own models. You can package any open source model into a Docker container and deploy it to run on the platform.