LMCache is an open source key-value (KV) cache optimization tool designed to improve the efficiency of Large Language Model (LLM) reasoning. It significantly reduces inference time and GPU resource consumption by caching and reusing the intermediate computation results (key-value caching) of the model, which is especially suitable for long context scenarios.LMCache works with inference engines such as vLLM...

FastDeploy is an open source tool developed by the PaddlePaddle team focused on rapid deployment of deep learning models. It supports a variety of hardware and frameworks , covering image , video , text and speech and more than 20 scenarios , including more than 150 mainstream models.FastDeploy provides production environments out-of-the-box part...

Web is an open source macOS browser project developed by nuance-dev and hosted on GitHub. It is based on Apple's WebKit engine, using the SwiftUI and Combine frameworks, and follows the MVVM architecture.The core feature of Web is the set of ...

Transformers is an open source machine learning framework developed by Hugging Face focused on providing advanced model definitions to support inference and training for text, image, audio and multimodal tasks. It simplifies the process of using models and is compatible with many mainstream deep learning frameworks such as PyTorch, Tensor...

Local LLM Notepad is an open source offline application that allows users to run Local Large Language Models on any Windows computer via a USB device without internet connection and without installation. Users simply copy a single executable file (EXE) and a model file (e.g. in GGUF format) to a USB flash drive, which can be used with...

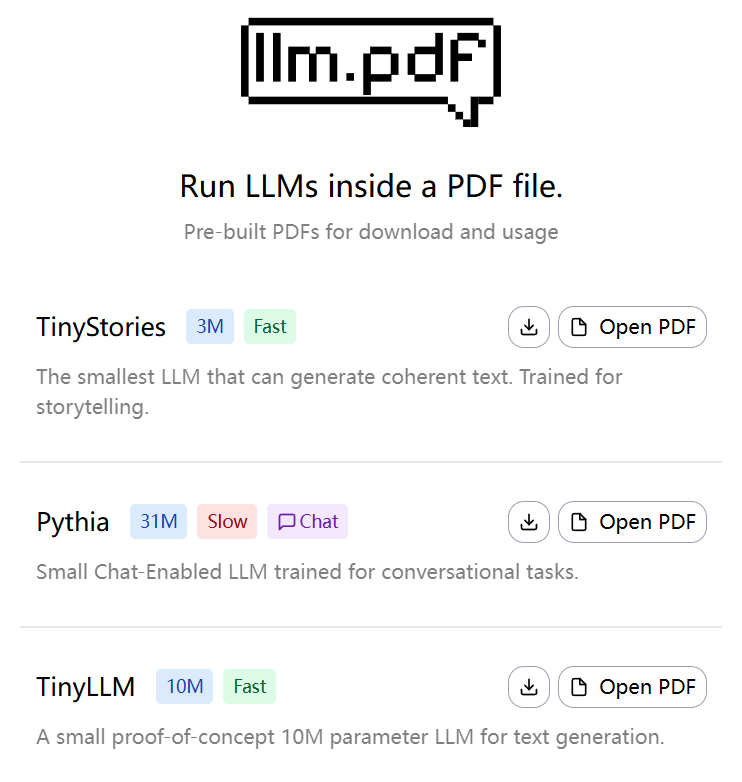

llm.pdf is an open source project that allows users to run Large Language Models (LLMs) directly in PDF files. This project, developed by EvanZhouDev and hosted on GitHub, demonstrates an innovative approach: compiling llama.cpp via Emscripten as ...

Aana SDK is an open source framework developed by Mobius Labs, named after the Malayalam word ആന (elephant). It helps developers quickly deploy and manage multimodal AI models, supporting processing of text, images, audio and video, and other data.Aana SDK is based on the Ray Distributed Computing Framework ...

BrowserAI is an open source tool that lets users run native AI models directly in the browser. It was developed by the Cloud-Code-AI team and supports language models like Llama, DeepSeek, and Kokoro. Users can complete text generation through the browser without a server or complex setup...

LitServe is an open source AI model service engine from Lightning AI, built on FastAPI and focused on rapidly deploying inference services for general-purpose AI models. It supports a wide range of scenarios from large language models (LLMs), visual models, audio models to classical machine learning models, providing batch...

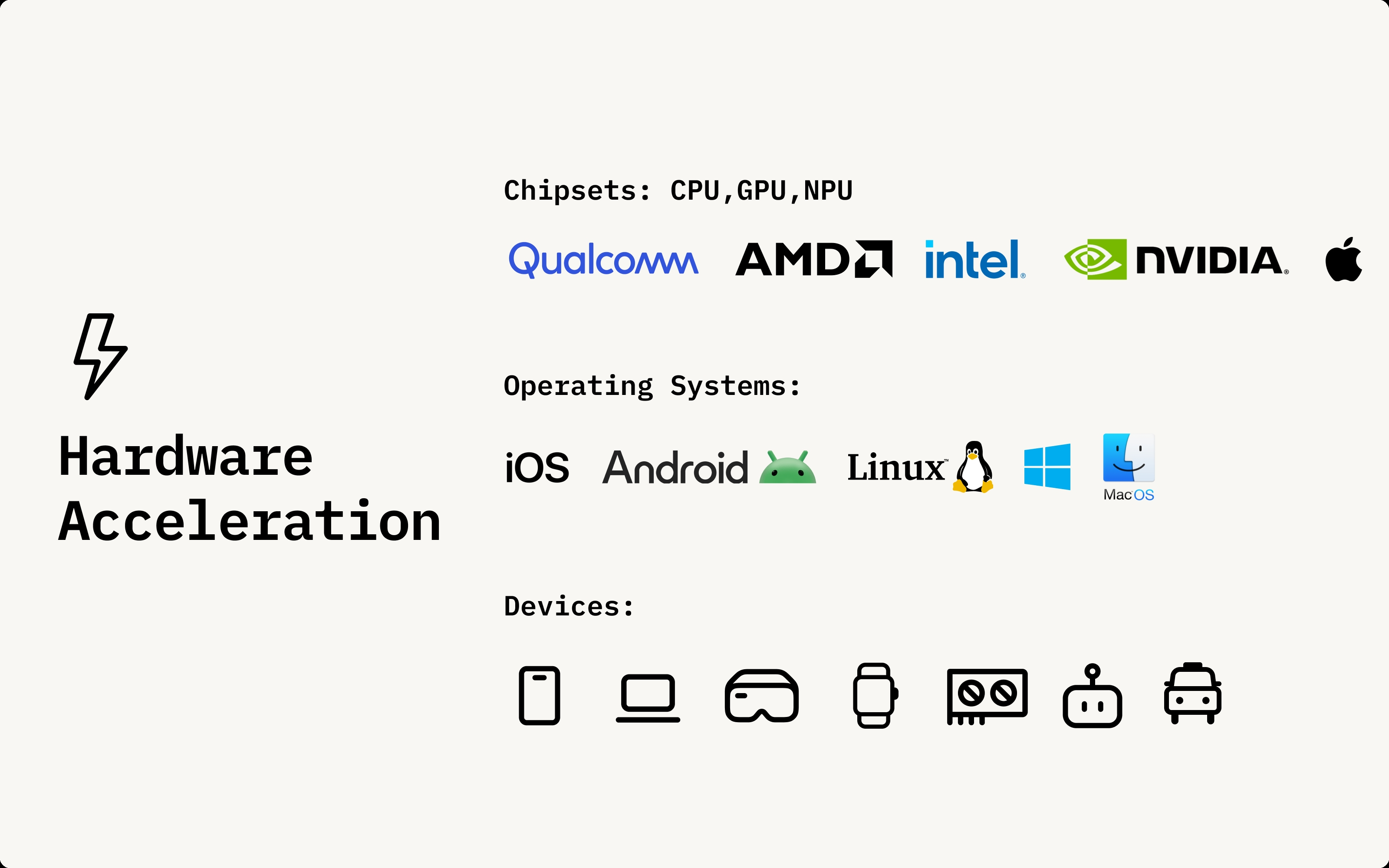

Nexa AI is a platform focused on multimodal AI solutions that run locally. It offers a wide range of AI models including Natural Language Processing (NLP), Computer Vision, Speech Recognition and Generation (ASR and TTS), all of which can be run locally on devices without relying on cloud services. This not only improves data privacy and security...

vLLM is a high-throughput and memory-efficient reasoning and service engine designed for Large Language Modeling (LLM). Originally developed by the Sky Computing Lab at UC Berkeley, it has become a community project driven by academia and industry. vLLM aims to provide fast, easy-to-use, and cost-effective L...

Transformers.js is a JavaScript library provided by Hugging Face designed to run state-of-the-art machine learning models directly in the browser without server support. The library is compatible with Hugging Face's Python version of transformer...

Harbor is a revolutionary containerized LLM toolset focused on simplifying the deployment and management of local AI development environments. It enables developers to launch and manage all AI service components including LLM backend, API interfaces, and front-end interfaces with a single click through a concise command line interface (CLI) and supporting applications. As an open source project, H...

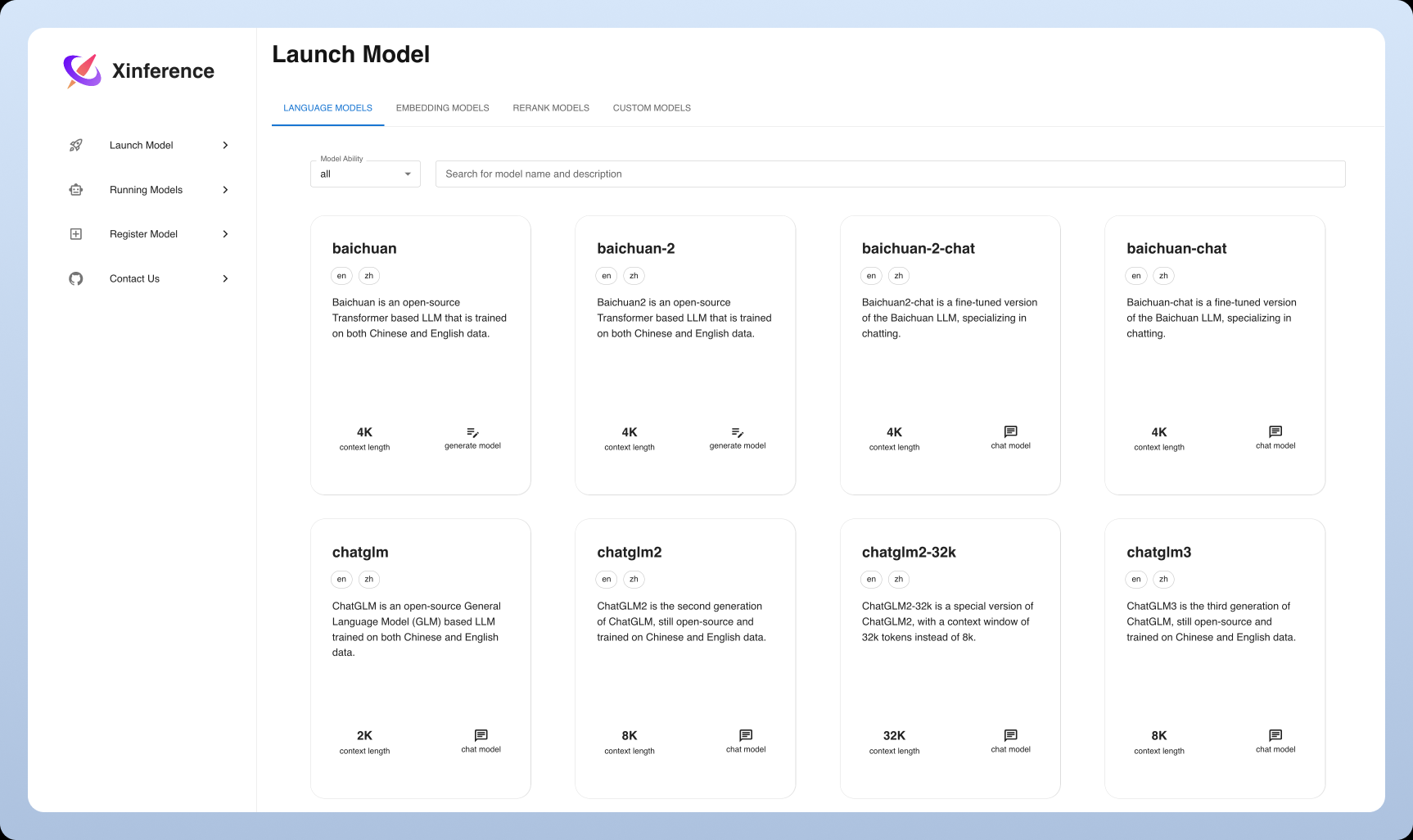

Xorbits Inference (Xinference for short) is a powerful and versatile library focused on providing distributed deployment and serving of language models, speech recognition models, and multimodal models. With Xorbits Inference, users can easily deploy and serve their own models or built-in advanced models,...

AI Dev Gallery is an AI development tools application from Microsoft (currently in public preview) designed for Windows developers. It provides a comprehensive platform to help developers easily integrate AI features into their Windows applications. The most notable feature of the tool is that it offers over 25...

LightLLM is a Python-based Large Language Model (LLM) inference and service framework known for its lightweight design, ease of extension, and efficient performance. The framework leverages a variety of well-known open source implementations, including FasterTransformer, TGI, vLLM, and FlashAtten...

Transformers.js is a JavaScript library developed by Hugging Face to enable users to run state-of-the-art machine learning models directly in the browser without server support. The library is compatible with Hugging Face's Python trans...

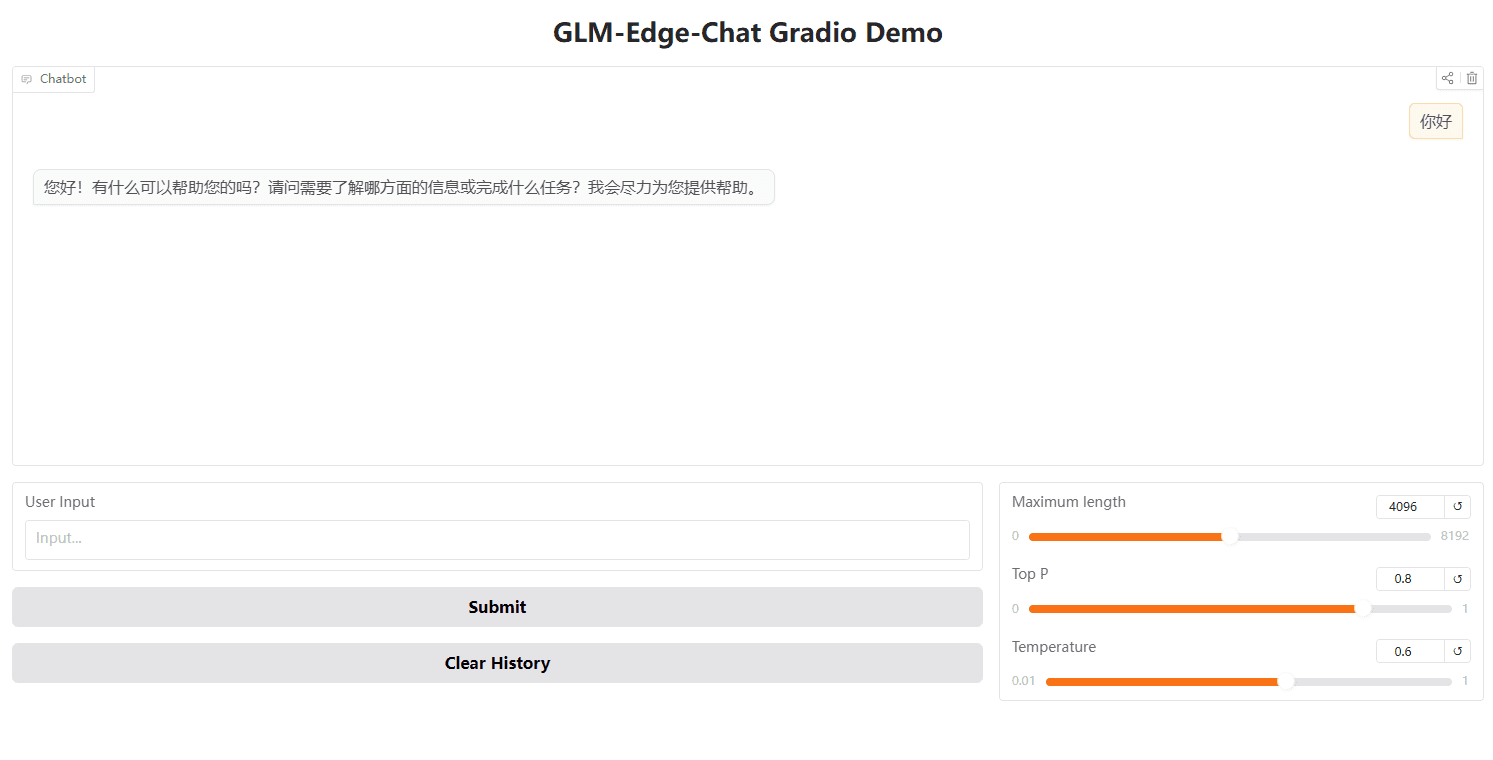

GLM-Edge is a series of large language models and multimodal understanding models designed for end-side devices from Tsinghua University (Smart Spectrum Light Language). These models include GLM-Edge-1.5B-Chat, GLM-Edge-4B-Chat, GLM-Edge-V-2B and GLM-Edge-V-5...

Exo is an open source project that aims to run its own AI cluster using everyday devices (e.g. iPhone, iPad, Android, Mac, Linux, etc.). Through dynamic model partitioning and automated device discovery, Exo is able to unify multiple devices into a single powerful GPU, supporting multiple models such as LLaMA, Mistral...

Top