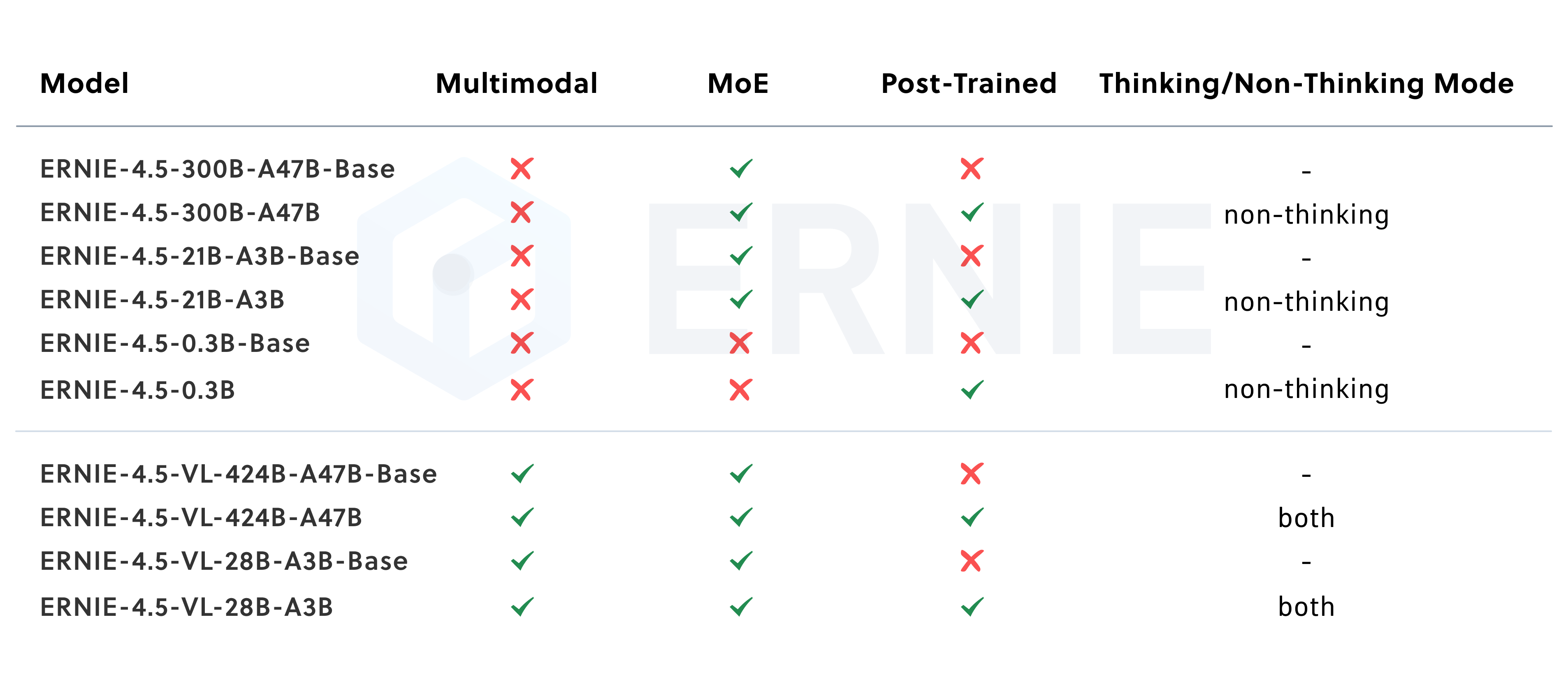

Baidu announced on June 30th that it has officially open sourced its latest ERNIE 4.5 A family of large-scale multimodal models. The family consists of 10 variants at different scales, fully embracing the Mixed Expertise (MoE) architecture and providing a full-stack solution from models, to development kits, to deployment tools, all on the Apache 2.0 Open under license.

Technology Core: Heterogeneous MoE Architecture Designed for Multimodality

Unlike the MoE model, which is common in the industry, theERNIE 4.5 One of the distinguishing features of MoE is its innovative "Multimodal Heterogeneous MoE" pre-training approach. The architecture is designed to allow the model to share common parameters when processing multimodal information such as text and images, while retaining independent parameters specialized for specific modalities.

To achieve this goal, the R&D team designed a variety of techniques, including modality-isolated routing, router orthogonal loss, and multimodal token-balanced loss. The core idea of these designs is to ensure that the learning of both textual and visual modalities can promote each other instead of interfering with each other during joint training, thus significantly enhancing the multimodal comprehension of the model without sacrificing the performance of the textual task.

In addition to model architecture, Baidu also highlighted its efficiency optimizations in training and inference infrastructure. With techniques such as in-node expert parallelism, memory-efficient pipeline scheduling, FP8 mixed-precision training, and fine-grained recomputation, its largest language model pre-training achieved a model FLOPs utilization (MFU) of 47%. On the inference side, theERNIE 4.5 Algorithms such as multi-expert parallel collaboration and convolutional coded quantization were used to achieve 4-bit/2-bit low-bit quantization.

Performance: Multi-dimensional comparison with the industry's top models

According to the benchmarking results published by BaiduERNIE 4.5 Strong competitiveness in several dimensions.

Pre-trained base model performance

ERNIE-4.5-300B-A47B-Base model outperforms on 22 out of 28 benchmarks with the DeepSeek-V3-671B-A37B-Base. This demonstrates the model's leadership in generalization, reasoning, and knowledge-intensive tasks.

Model performance after command fine-tuning

After the command is fine-tuned ERNIE-4.5-300B-A47B The model achieved top scores on tasks such as Instruction Followed (IFEval) and Knowledge Quiz (SimpleQA).

At the same time, the smaller parameter size of ERNIE-4.5-21B-A3B model, whose total number of ginsengs is about Qwen3-30B of 70%, but outperforms the latter on math and inference benchmarks such as BBH and CMATH, demonstrating high parameter efficiency.

Multimodal model performance

ERNIE 4.5 The Visual Language Model (VLM) supports both "thinking mode" and "non-thinking mode".

In non-thinking mode, the model focuses on efficient visual perception, document and diagram comprehension. And in thinking mode, the model maintains strong perceptual capabilities while significantly enhancing the ability to MathVista、MMMU Reasoning ability on complex benchmarks such asERNIE-4.5-VL The lightweight version of the program also performs well enough on multiple benchmarks to match or even exceed the performance of the much larger number of parameters of the Qwen2.5-VL-32B。

Ecology and Toolchain:ERNIEKit 和 FastDeploy

ERNIE 4.5 The model family is based on PaddlePaddle deep learning framework training and also provides PyTorch Compatible versions. Baidu has released two core tools aimed at lowering the barrier to use for developers.

- ERNIEKit: model fine-tuning and alignment

ERNIEKitis an industrial-grade development toolkit that supports supervised fine tuning (SFT), direct preference optimization (DPO), andLoRA, quantization-aware training (QAT) and post-training quantization (PTQ), and a range of other mainstream training and compression techniques.Developers can download models and perform SFT or DPO tasks via command line tools.

# 下载模型 huggingface-cli download baidu/ERNIE-4.5-300B-A47B-Base-Paddle \ --local-dir baidu/ERNIE-4.5-300B-A47B-Base-Paddle # 监督微调 (SFT) erniekit train examples/configs/ERNIE-4.5-300B-A47B/sft/run_sft_wint8mix_lora_8k.yaml \ model_name_or_path=baidu/ERNIE-4.5-300B-A47B-Base-Paddle - FastDeploy: Efficient Model Deployment

FastDeployis an efficient deployment toolkit designed for large models with an API compatible with thevLLM和OpenAIprotocol that supports one-click servitization deployment. It provides an easy way forERNIE 4.5s MoE model provides an industrial-grade, multi-computer deployment solution with integrated acceleration techniques such as low-bit quantization, context caching, and speculative decoding.The sample local inference code is as follows:

from fastdeploy import LLM, SamplingParams prompt = "Write me a poem about large language model." sampling_params = SamplingParams(temperature=0.8, top_p=0.95) llm = LLM(model="baidu/ERNIE-4.5-0.3B-Paddle", max_model_len=32768) outputs = llm.generate(prompt, sampling_params)Servitized deployments, on the other hand, can be started with a single line of command:

python -m fastdeploy.entrypoints.openai.api_server \ --model "baidu/ERNIE-4.5-0.3B-Paddle" \ --max-model-len 32768 \ --port 9904

Open Source License and Citation

ERNIE 4.5 The model follows Apache License 2.0 agreement, the license allows for commercial use.

To cite in your research, use the following BibTeX Format:

@misc{ernie2025technicalreport,

title={ERNIE 4.5 Technical Report},

author={Baidu ERNIE Team},

year={2025},

eprint={},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={}

}