Any LLM in Claude Code is an open-source proxy tool hosted on GitHub, developed by chachako and forked from CogAgent/claude-code-proxy. it allows users to Claude Code LiteLLM supports any language model (e.g., models from OpenAI, Vertex AI, xAI) without a Pro subscription. With simple environment variable configuration, users can assign different models to complex tasks (sonnet) and auxiliary tasks (haiku) to optimize performance and cost. The project is based on Python and LiteLLM, uses uv to manage dependencies, and is easy to install for AI developers, Claude Code users, and open source enthusiasts. The documentation is clear, the community is active, and detailed configuration and debugging guides are provided.

Function List

- Support for using any LiteLLM-compatible language model (e.g. OpenAI, Vertex AI, xAI) in Claude Code.

- Provides independent routing configurations for large models (sonnet) and small models (haiku) to optimize task allocation.

- Supports custom API keys and endpoints, compatible with multiple model providers.

- Use LiteLLM to automatically transform API requests and responses to ensure that they are compatible with the Anthropic API format compatibility.

- Integrated uv tools automate the management of project dependencies and simplify the deployment process.

- Provide detailed logging functions to record the contents of requests and responses for easy debugging and prompt engineering analysis.

- Supports local model servers and allows configuration of custom API endpoints.

- Open source project that supports users to modify the code or contribute functionality.

Using Help

Installation process

The following installation and configuration steps are required to use Any LLM in Claude Code, based on the official documentation to ensure clarity and maneuverability:

- cloning project

Run the following command in the terminal to clone the project locally:git clone https://github.com/chachako/freecc.git cd freecc

2. **安装 uv 工具**

项目使用 uv 管理 Python 依赖。若未安装 uv,运行以下命令:

```bash

curl -LsSf https://astral.sh/uv/install.sh | sh

The uv will be based on the pyproject.toml Automatically installs dependencies without the need to do it manually.

3. Configuring Environment Variables

Project adoption .env file to configure model routing and API keys. Copy the example file:

cp .env.example .env

Use a text editor (such as nano) to open the .env, configure the following variables:

- Model Routing Configuration:

BIG_MODEL_PROVIDER: Large model providers (e.g.openai、vertex、xai)。BIG_MODEL_NAME: The name of the big model (e.g.gpt-4.1、gemini-1.5-pro)。BIG_MODEL_API_KEY: API key for the big model.BIG_MODEL_API_BASE: (Optional) Custom API endpoints for large models.SMALL_MODEL_PROVIDER: Miniatures provider.SMALL_MODEL_NAME: The name of the mini-model (e.g.gpt-4o-mini)。SMALL_MODEL_API_KEY: API key for miniatures.SMALL_MODEL_API_BASE: (Optional) Custom API endpoints for small models.

- Global Provider Configuration(as a backup or direct request):

OPENAI_API_KEY、XAI_API_KEY、GEMINI_API_KEY、ANTHROPIC_API_KEY: API key for each provider.OPENAI_API_BASE、XAI_API_BASEetc.: Custom API endpoints.

- Vertex AI Specific Configurations:

VERTEX_PROJECT_ID: Google Cloud Project ID.VERTEX_LOCATION: Vertex AI regions (e.g.us-central1)。- Configure Google Apps Default Credentials (ADC):

gcloud auth application-default loginor set an environment variable

GOOGLE_APPLICATION_CREDENTIALSPoints to the credentials file.

- Log Configuration:

FILE_LOG_LEVEL: Log files (claude-proxy.log) level (default)DEBUG)。CONSOLE_LOG_LEVEL: Console log level (default)INFO)。LOG_REQUEST_BODY: set totrueRecord the content of the request for easy analysis of the prompting project.LOG_RESPONSE_BODY: set totrueRecord the content of the model response.

typical example .env Configuration:

BIG_MODEL_PROVIDER="vertex"

BIG_MODEL_NAME="gemini-1.5-pro-preview-0514"

BIG_MODEL_API_KEY="your-vertex-key"

VERTEX_PROJECT_ID="your-gcp-project-id"

VERTEX_LOCATION="us-central1"

SMALL_MODEL_PROVIDER="openai"

SMALL_MODEL_NAME="gpt-4o-mini"

SMALL_MODEL_API_KEY="sk-xxx"

SMALL_MODEL_API_BASE="https://xyz.llm.com/v1"

FILE_LOG_LEVEL="DEBUG"

LOG_REQUEST_BODY="true"

- Operations Server

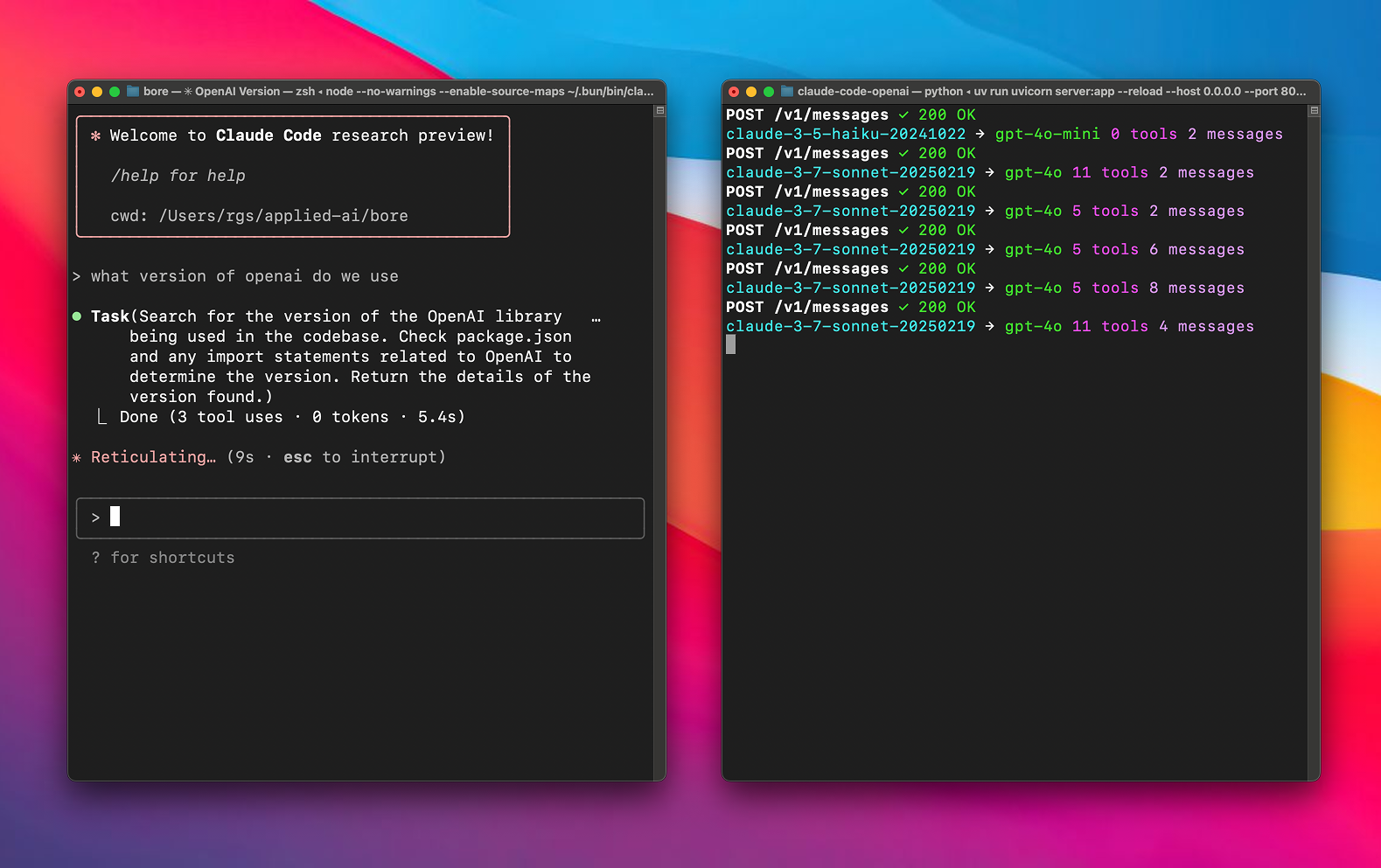

Once the configuration is complete, start the proxy server:uv run uvicorn server:app --host 127.0.0.1 --port 8082 --reloadparameters

--reloadOptional, for automatic reloading during development. - Connections Claude Code

Install Claude Code (if not already installed):npm install -g @anthropic-ai/claude-codeSetting environment variables and connecting to the agent:

export ANTHROPIC_BASE_URL=http://localhost:8082 && claude

Function Operation

The core of Any LLM in Claude Code is to route Claude Code requests to user-configured models through a proxy. Below is a detailed description of how this works:

- Model Routing

The project supports Claude Code'ssonnet(main tasks such as complex code generation) andhaiku(auxiliary tasks such as quick grammar checking) configure different models. For example:- configure

BIG_MODEL_PROVIDER="vertex"和BIG_MODEL_NAME="gemini-1.5-pro"willsonnetRouting to high-performance models. - configure

SMALL_MODEL_PROVIDER="openai"和SMALL_MODEL_NAME="gpt-4o-mini"willhaikuRoute to lightweight models to reduce costs.

Users can access the.envFlexible switching of models and providers for documents.

- configure

- API Request Conversion

The project implements the API format conversion through LiteLLM with the following flow:- Claude Code sends Anthropic-formatted requests (e.g., calls to the

claude-3-sonnet-20240229)。 - Agent based on

.envconfiguration, which converts the request to the target model format (e.g. OpenAI'sgpt-4.1)。 - Inject the configured API key and endpoint to send the request.

- Converts the model response back to Anthropic format and returns it to Claude Code.

There is no need for manual intervention by the user and the conversion process is fully automated.

- Claude Code sends Anthropic-formatted requests (e.g., calls to the

- Logging and Debugging

The project provides detailed logging capabilities:- set up

LOG_REQUEST_BODY="true"The content of Claude Code's request is recorded to make it easier to analyze the prompting project. - set up

LOG_RESPONSE_BODY="true", record the model response and check that the output is correct. - The logs are saved in the

claude-proxy.logByFILE_LOG_LEVEL和CONSOLE_LOG_LEVELControl log level.

If you encounter problems, check the logs or verify.envThe key and endpoints in the

- set up

- Local Model Support

Support for local model servers. For example, configure theSMALL_MODEL_API_BASE="http://localhost:8000/v1"It can behaikuRoute to a local model (e.g. LM Studio). Local models do not require API keys and are suitable for privacy-sensitive scenarios.

caveat

- Make sure the network connection is stable and the API key is valid.

- Vertex AI users need to be properly configured

VERTEX_PROJECT_ID、VERTEX_LOCATIONand ADCs. - Visit the GitHub project page (https://github.com/chachako/freecc) regularly for updates or community support.

- Logs may contain sensitive information, it is recommended to turn them off after debugging.

LOG_REQUEST_BODY和LOG_RESPONSE_BODY。

application scenario

- Enhancing Claude Code Flexibility

Users do not need a Claude Pro subscription to use high-performance models such as the Gemini 1.5 Pro) or low-cost models (e.g., gpt-4o-mini) that extend the context window or reduce costs. - Model Performance Comparison

Developers can quickly switch models (e.g., OpenAI, Vertex AI) through the agent, test the performance of different models in Claude Code, and optimize the development process. - Local Model Deployment

Enterprises or researchers can configure local model servers to protect data privacy, suitable for localized AI application scenarios. - Open Source Community Engagement

As an open source project, developers can submit code via GitHub to optimize functionality or fix issues for beginners learning Python and AI development.

QA

- What models does Any LLM in Claude Code support?

Support for all LiteLLM-compatible models, including OpenAI, Vertex AI, xAI, Anthropic, etc., via the.envConfiguration models and providers. - How do I handle configuration errors?

ferret outclaude-proxy.loglogs, check API keys, endpoints, and model names. Make sure that theFILE_LOG_LEVEL="DEBUG"Get detailed logs. - Need a Claude Pro subscription?

Not required. The project supports free users to use other models by proxy, bypassing subscription restrictions. - Can it be deployed on a remote server?

Can. Will--hostset to0.0.0.0to ensure that the port (e.g.8082) Open. - How do I contribute code?

Visit https://github.com/chachako/freecc to submit a Pull Request, and it is recommended that you read the contribution guidelines first.