Today, when cross-lingual communication has become the core demand of globalization, simultaneous interpretation has always been the most challenging peak in the field of machine translation. Recently, the Byte Jump Seed team released a program named Seed LiveInterpret 2.0 The end-to-end simultaneous interpretation model provides a reliable technical solution for real-time cross-language communication.

Lower latency, more natural experience

Most of the traditional machine simultaneous interpretation systems adopt a cascaded scheme, i.e., the three-step model of "speech recognition (ASR) → text translation (MT) → speech synthesis (TTS)". Although this model is mature, each link will generate delay accumulation, and errors will be transmitted in the link to amplify, resulting in the final translation effect and real-time greatly reduced.

Seed LiveInterpret 2.0 End-to-End (E2E) speech-to-speech (S2S) modeling was used to integrate the above three steps into a single model. This architecture enables the model to achieve full-duplex speech understanding and generation, resulting in a better balance between translation accuracy and latency.

According to officially published data, in speech-to-text (S2T) scenarios, theSeed LiveInterpret 2.0 The average first word delay is only 2.21 seconds; in more complex speech-to-speech (S2S) scenarios, the output delay is only 2.53 seconds. This average latency of 2-3 seconds is very close to the performance of a professional human simultaneous interpreter, which greatly improves the smoothness of the conversation.

Zero sample sound reproduction and precise understanding

In addition to low latency, the model also has Zero-shot voice replication capability. This means that it can replicate the voice qualities of a speaker in real time without prior training, preserving his or her unique timbre and identity, effectively avoiding confusion due to voice uniformity in multi-person conversations.

In complex translation scenarios, such as dealing with tongue twisters, poems, food culture, etc., the model demonstrates its ability to deeply understand the context and cultural background, and realizes natural and accurate Chinese-English translation.

Model Evaluation Data

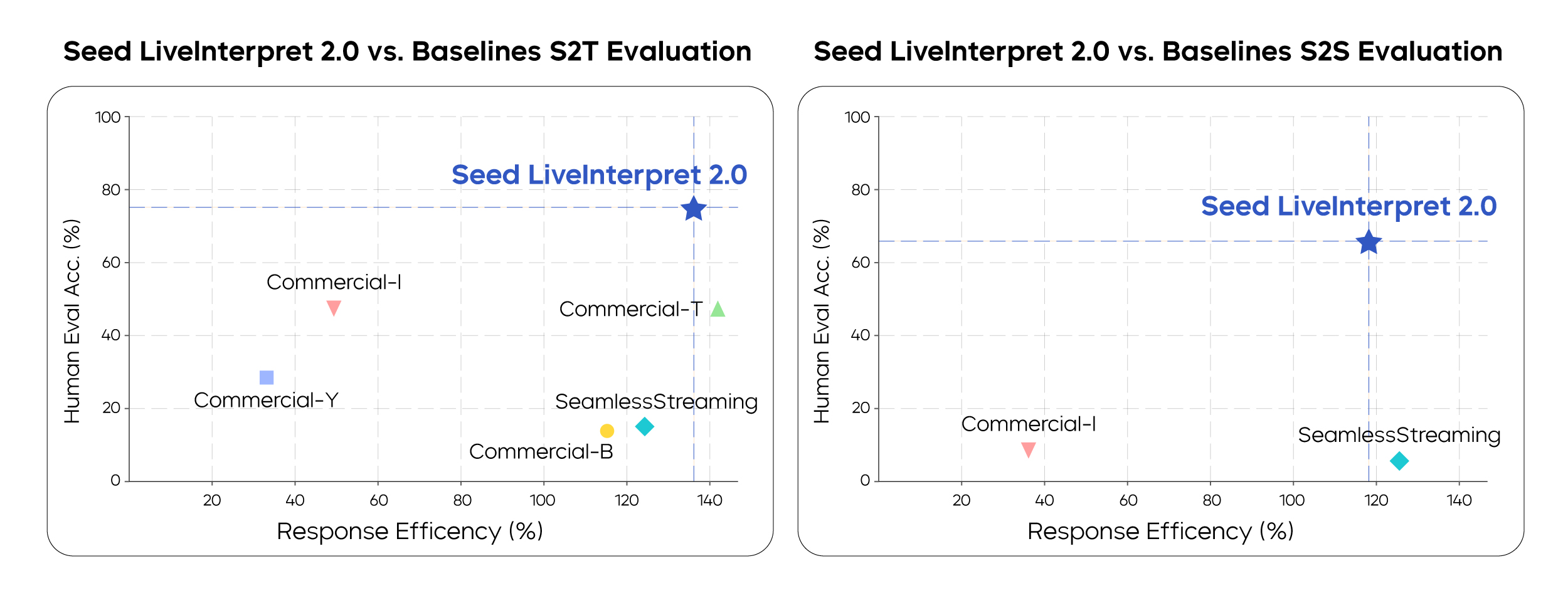

In a manualized assessment, theSeed LiveInterpret 2.0 's bi-directional speech-to-text (S2T) simultaneous interpreting quality score of 74.8 out of 100 out of 100 exceeded the industry's second-ranked baseline system (47.3) by 581 TP3T.

Among the systems that support speech-to-speech (S2S) translation, the model achieves an average Chinese-English bidirectional translation quality score of 66.3 (the evaluation dimensions include translation accuracy, latency, speech rate, pronunciation, and fluency), which is far superior to other baseline systems. It is worth noting that most of the systems involved in the comparison do not even support the sound reproduction feature yet.

The emergence of this technology is not just another iteration of translation tools, it signals that a more natural and immersive way of cross-language communication is becoming a reality. Whether it's an international meeting, business negotiation or overseas travel, language will no longer be a barrier to connection when the machine interpreter is able to "hear the voice as if it were a human being".