AI Sheets is an open source tool from Hugging Face. Users can build, enrich and transform datasets with AI models without writing code. It supports local deployment or running on the Hugging Face Hub. The tool connects to thousands of open-source models in the Hugging Face Hub and accesses them through Inference Providers. Users can also use local models, including OpenAI's gpt-oss. the interface is as simple as a spreadsheet. Users create new columns by writing prompts, and can quickly experiment with small datasets before scaling to large pipelines. The tool emphasizes iteration, with users editing cells or validating results to teach the model. At its core, it uses AI to process data, from categorization to generating synthetic data. Ideal for testing models, improving cues, and analyzing datasets. Exports results to the Hub and also generates configurations to scale data generation.

Function List

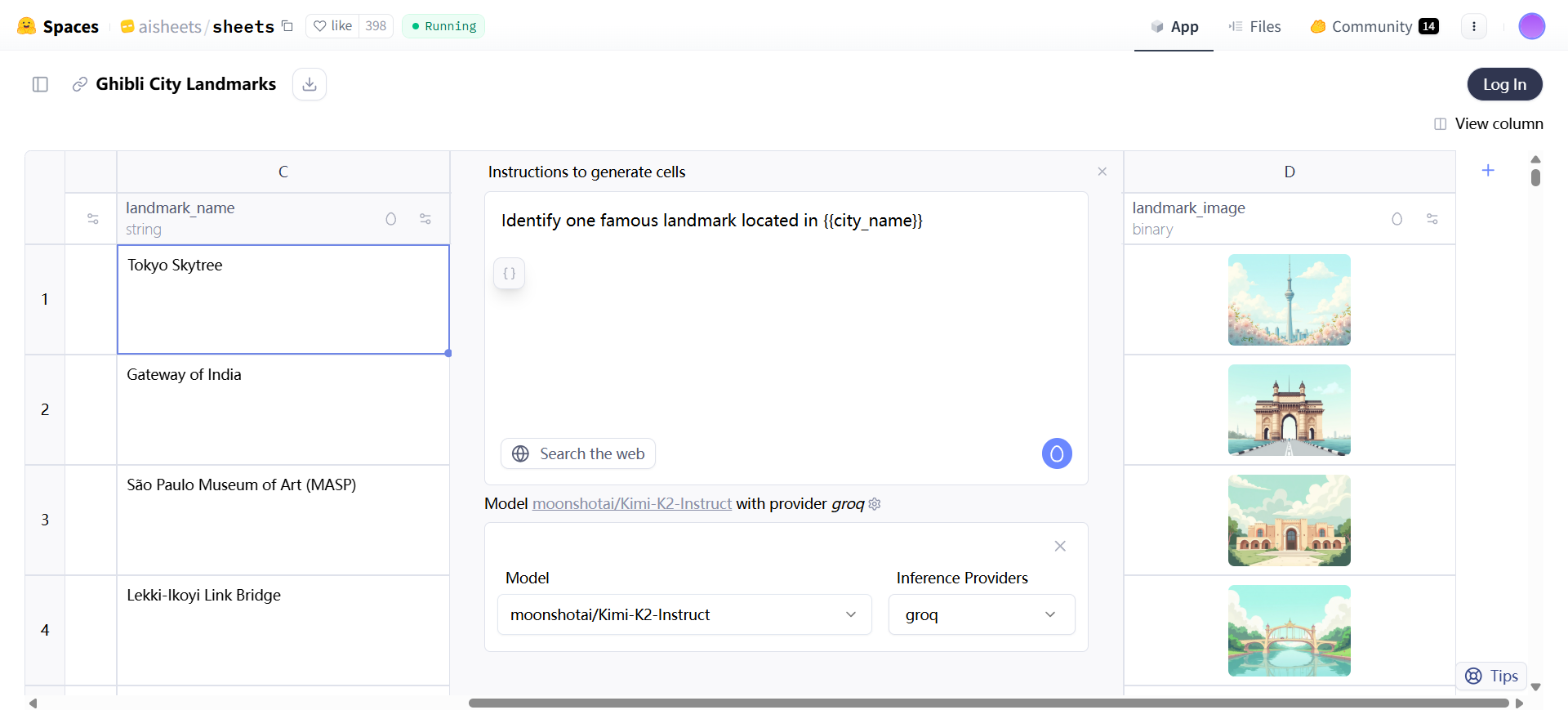

- Datasets are generated from scratch: input natural language descriptions and AI automatically creates structures and sample rows, e.g. generating city lists including countries, landmark images.

- Data Import and Processing: Upload XLS, TSV, CSV or Parquet files, support up to 1000 rows, unlimited columns, ability to edit and expand data.

- Add AI Columns: Create new columns with prompts such as Extract Information, Summarize Text, Translate Content, or Custom Actions, referencing existing columns such as {{column}}.

- Model Selection and Switching: Select a model and provider from Hugging Face Hub, such as meta-llama/Llama-3.3-70B-Instruct or openai/gpt-oss-120b, to test different performance.

- Feedback mechanism: manually editing cells or liking good results, these are used as few-shot examples to improve the output when regenerated.

- Web search switch: when enabled the model gets the latest information from the web, e.g. to find the zip code of an address; when disabled only model knowledge is used.

- Data Extension: Drag and drop columns to generate more rows without regeneration; for fixing errors or adding data.

- Export to Hub: Saves the dataset and configuration YAML file for reusing prompts or generating a larger dataset through scripting.

- Model Comparison and Evaluation: create multiple columns to test different models and compare the quality of the outputs using LLM as the judging column.

- Synthetic Data Generation: Create virtual datasets such as professional emails based on biographies.

- Data Conversion and Cleaning: Remove punctuation or standardized text with prompts.

- Data classification and analysis: categorize content or extract key ideas.

- Data enrichment: Supplement missing information, such as adding a zip code or generating a description.

- Image generation: Create image columns with models such as black-forest-labs/FLUX.1-dev, supporting specific styles.

Using Help

The use of AI Sheets begins at startup. Users have two ways to access it: online trial or local installation. The online trial requires no installation. Go to https://huggingface.co/spaces/aisheets/sheets. Log in to your Hugging Face account. Get HF_TOKEN from https://huggingface.co/settings/tokens. When the interface appears, choose to generate a new dataset or import existing data.

Generating a dataset from scratch is suitable for first-time tool familiarization, brainstorming, or quick experiments. Click on the Generate option. Enter a description in the prompt area, such as "A list of cities in the world, including the country and Ghibli-style images of each landmark" AI Sheets creates the schema and 5 sample rows. The result has columns such as city, country, and image. Drag the bottom of the columns to generate more rows, up to 1000. Modify the prompt to regenerate the structure. Manually enter cells and drag and drop to complete other columns.

Importing a dataset is recommended for most situations. The upload file format is XLS, TSV, CSV, or Parquet. the file should have at least one column name and one row of data. After uploading, the data is displayed in a table. Manually add entries to empty cells. The interface is like a spreadsheet and the imported cells can be edited, but they cannot be changed by AI. AI generated cells can be reproduced.

Adding a new column is the core operation. Click the + button. Choose recommended actions such as extract information, summarize text, translate, or customize prompts such as "Remove extra punctuation from {{text}}". {{text}} references an existing column. Configure the model, e.g. select meta-llama/Llama-3.3-70B-Instruct, with a provider such as groq. Switch Search: Enable pulling web data, e.g. "Find the zip code of {{address}}". After generating the columns, view the results.

Refine datasets through feedback and configuration. Manually edit AI cells to provide examples of preferred output. Tap Good Results with thumbs-up. these serve as few-shot examples. Click regenerate to apply to all columns. Adjust prompts, e.g. change "Categorize {{text}}" to a more specific version. Switch models to test performance, e.g. from groq Change cerebras. different models are suitable for different tasks such as creative or structured output. Providers affect speed and context length.

Extend data with drag and drop. Pull down from the last cell of a column to instantly generate a new row. No regeneration required. This method is also used to fix wrong cells.

Export to Hub to save your work. Click Export. The dataset and config.yml file are generated. The file contains column configurations, prompts, and model details. The example config has columns keys that list modelName, userPrompt, etc. for each column. Once uploaded, it can be viewed in the Hub, e.g. https://huggingface.co/datasets/dvilasuero/nemotron-personas-kimi-questions.

Generate larger datasets with HF Jobs. config and scripts are required. Commands like: hf jobs uv run -s HF_TOKEN=$HF_TOKEN https://huggingface.co/datasets/aisheets/uv-scripts/raw/main/extend_dataset/script.py -config https://huggingface.co/datasets/dvilasuero/nemotron-personas-kimi-questions/raw/main/config.yml -num -rows 100 nvidia/Nemotron-Personas dvilasuero/nemotron-kimi-qa-distilled. specify the number of rows or leave it blank to generate all of them.

Local install from GitHub. clone https://github.com/huggingface/aisheets. setup HF_TOKEN. with Docker: export HF_TOKEN=your_token_here; docker run -p 3000:3000 -e HF_ TOKEN=$HF_TOKEN huggingface/sheets. visit http://localhost:3000. with pnpm: install pnpm, clone repository, export HF_TOKEN, pnpm install, pnpm dev. visit http:// localhost:5173. production builds: pnpm build, pnpm serve.

Start with a custom LLM such as Ollama. Ollama server: export OLLAMA_NOHISTORY=1; ollama serve; ollama run llama3. set MODEL_ENDPOINT_URL=http://localhost:11434, MODEL_ENDPOINT_NAME=llama3 Run the app. Customizations need to conform to the OpenAI API. Image generation is fixed using the Hugging Face API.

Advanced configuration uses environment variables.OAUTH_CLIENT_ID for authentication.DEFAULT_MODEL to change the default model.NUM_CONCURRENT_REQUESTS to control concurrency, default 5.SERPER_API_KEY to enable search.DATA_DIR to set the data directory.

Example of an operational procedure: test the model. Import the dataset with prompts. Add columns like "Response: {{prompt}}" and select different models. Add judgment columns like "Evaluate {{prompt}} for response 1: {{model1}}, response 2: {{model2}}". Check manually or use LLM to optimize.

Categorized dataset: upload text dataset. Add column "Categorize {{text}}'s main topics". Edit bad results, point good, regenerate.

Synthesize data: Generate person bio column "Write a short description of a professional in a pharmaceutical company". Add email column "Write real professional email from {{person_bio}}".

Analyze: Add column "Extract key ideas from {{text}}".

Enrichment: add column "Find zip code of {{address}}" to enable search.

These steps make it straightforward for users to get started. The tool emphasizes experimentation and iteration to ensure data quality.

application scenario

- Model testing and comparison

Users want to try the latest model on their own data. Import dataset containing questions. Create multiple columns, each answered with a different model. Add judgment columns to compare quality with LLM. Good for developers to choose the best model. - Cue Optimization

Build a customer request auto-answer application. Load sample request dataset. Iterate over different prompts and models to generate responses. Edit cells to provide feedback and automatically add few-shot examples. Ideal for building efficient prompts. - Data cleansing and conversion

Dataset columns have stray text. Add new columns with prompts to remove punctuation or normalize. Fast processing of large volumes of data. Good for data scientists to preprocess. - Data classification

Categorize content as in the question topic. Add columns categorized with prompts. Manual validation and regeneration improves accuracy. Ideal for analyzing Hub datasets. - Data analysis and extraction

Extract the main ideas of the text. Add columns to summarize or extract with prompts. Enable search to pull real-time information. Ideal for research projects. - Data richness

Add missing items such as address and zip code. Add columns with hints, enable web search. Ensure accurate additions. Suitable for complete datasets. - Synthetic data generation

Privacy-preserving virtual data such as emails. Generate bio columns and generate content based on it. Good for testing or prototyping.

QA

- What models does AI Sheets support?

Open source models supporting Hugging Face Hub are available through Inference Providers, as are local models such as gpt-oss or a custom LLM that conforms to the OpenAI API. - How to generate image columns?

Use a prompt like "Generate an isometric icon for {{object_name}}", select an image model like black-forest-labs/FLUX.1-dev. Fixed with Hugging Face API. - How does feedback work?

Edit AI cells or likes. These become few-shot examples. Apply to columns on regeneration. - How do I expand after exporting?

Run HF Jobs with config.yml and a script. specify the number of rows to generate a larger dataset. - Is a subscription required?

Online free trial. Get 20x usage with PRO subscription for local or more reasoning. - What is the data cap?

Upload or generate up to 1000 rows. Unlimited columns. Expand even more with Jobs. - How do I enable web search?

In the column configuration switch toggle search. the model pulls the latest information.