在 Manus At the beginning of the project, the team was faced with a critical decision: whether to train an end-to-end agent model based on open-source models, or to utilize the powerful "context learning" capabilities of cutting-edge models to build the agent?

Rewind a decade and developers didn't even have a choice in the field of natural language processing. In that area that belongs to the BERT era, any model must be fine-tuned and evaluated for weeks for new tasks. For an application that seeks to iterate quickly and has yet to find product market fit (PMF), this slow feedback loop can be fatal. This was a hard lesson learned from the last startup, when models were trained from scratch for open information extraction and semantic search, but as the GPT-3 和 Flan-T5 of the emergence of those self-developed models was eliminated almost overnight. Ironically, it was these new models that ushered in the era of contextual learning and pointed the way to a whole new path.

This lesson has taught Manus The choice becomes clear: bet on contextual engineering. This reduces the product improvement cycle from weeks to hours, and keeps the product itself "orthogonal" to the development of the underlying big model - if the model's progress is a rising tide, then the product itself will be "orthogonal" to the development of the underlying big model, and the product itself will be "orthogonal" to the development of the underlying big model.Manus To be a boat on the water, not a post anchored to the sea bed.

However, contextual engineering is far from smooth. It is more like an experimental science.Manus The agent framework has been completely rebuilt four times, each time because the team discovered a better way to shape context. This manual process, a mix of architectural search, cue word debugging, and empirical guesswork, has been dubbed Stochastic Graduate Descent by the team, and is inelegant but effective.

This post will share the local optimal solution that the team achieved through "SGD". If you're building your own AI agent, hopefully these principles will help you converge faster.

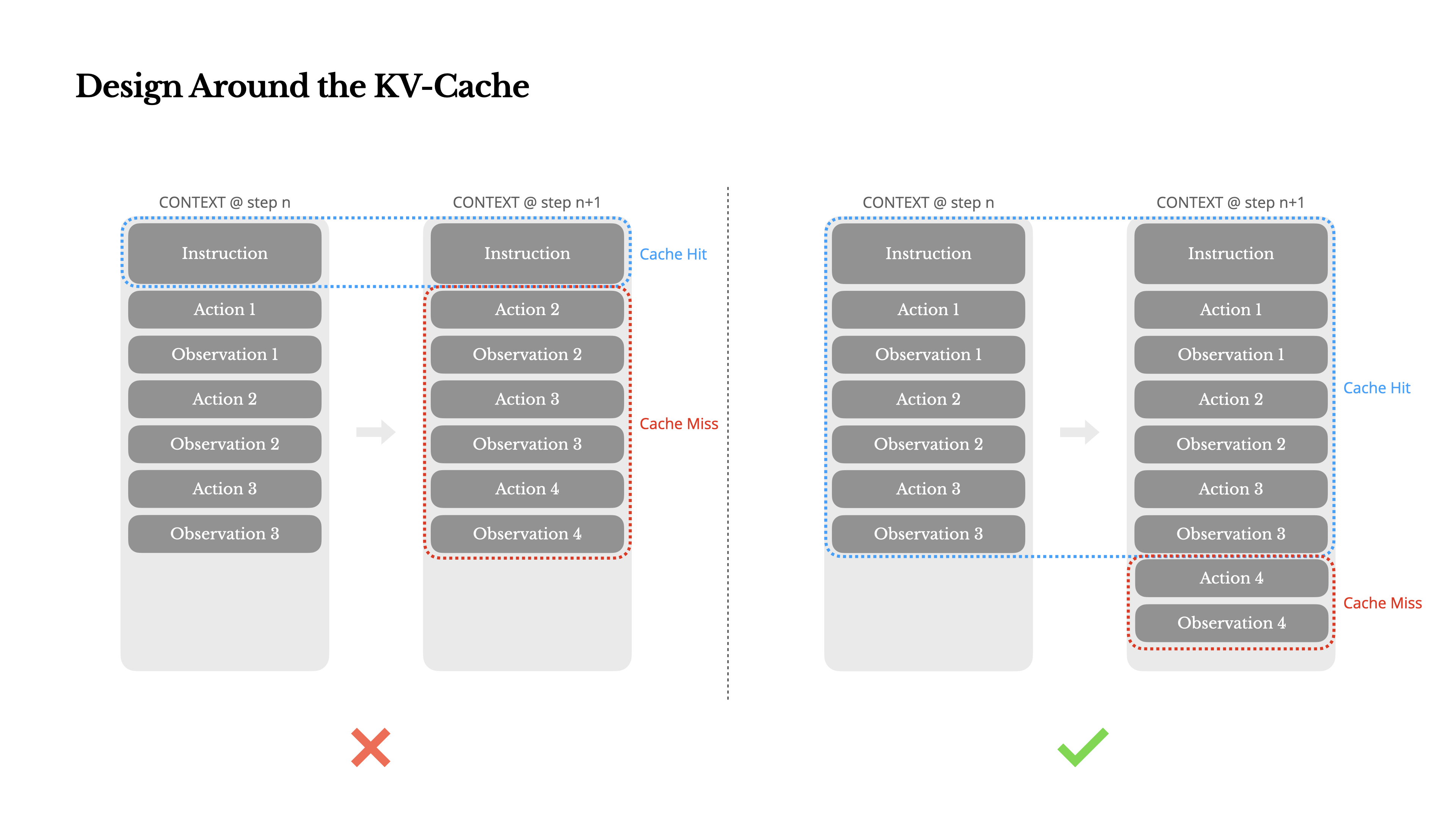

Designing around the KV cache

If only one indicator can be selected, then KV Cache hit rate is undoubtedly the most important metric for measuring production-grade AI agents, as it directly impacts latency and cost. To understand this, you first need to understand how a typical agent operates.

Upon receiving user input, the agent accomplishes the task through a series of tool calls. In each iteration, the model selects a behavior from a predefined action space based on the current context, which is defined in the environment (e.g., the Manus (the virtual machine sandbox) in which the behavior is executed produces an observation. Subsequently, the behavior and the observation are appended to the context and become the input for the next iteration. This loop continues until the task is completed.

Obviously, the context grows at each step, while the output - usually a structured function call - is relatively short. This leads to a severe imbalance in the ratio of input to output Token for the agent, in contrast to a regular chatbot. In the Manus The ratio averages about 100:1.

Fortunately, contexts with the same prefix can utilize the KV Caching mechanism.KV The cache significantly reduces the computational overhead of re-processing the same prefixes by storing the computed Key-Value pairs, thus significantly reducing the first Token Generation time (TTFT) and inference costs. This can lead to huge cost savings whether you use a self-hosted model or call an inference API. Take Claude Sonnet For example, the cost of a cached input token is $0.30 per million tokens, while the cost of an uncached input token is $3 per million tokens, a tenfold difference.

From a contextual engineering perspective, improving KV There are several key practices to follow for caching hits:

1. Maintain stability of cue word prefixes. Due to the autoregressive nature of the large language model, a difference in even one Token can cause all caches after that Token to be invalidated. A common mistake is to include timestamps down to the second at the beginning of system hints. While this allows the model to tell you the current time, it also completely destroys cache hits.

2. Making the context "additive". Avoid modifying previous behavior or observations. Also, ensure that the serialization process is deterministic. Many programming languages and libraries do not guarantee a stable order of keys when serializing JSON objects, which can silently break the cache.

3. Explicitly mark cache breakpoints where necessary. Some model vendors or inference frameworks do not support automatic incremental prefix caching and require manually inserting cache breakpoints in the context. When setting breakpoints, take into account the possible expiration time of the cache and at least make sure that the breakpoint contains the end of the system prompt.

In addition, if you use the vLLM framework self-hosted models such as this, make sure that prefix/hint caching is enabled and that requests are consistently routed across distributed worker nodes using techniques such as session IDs.

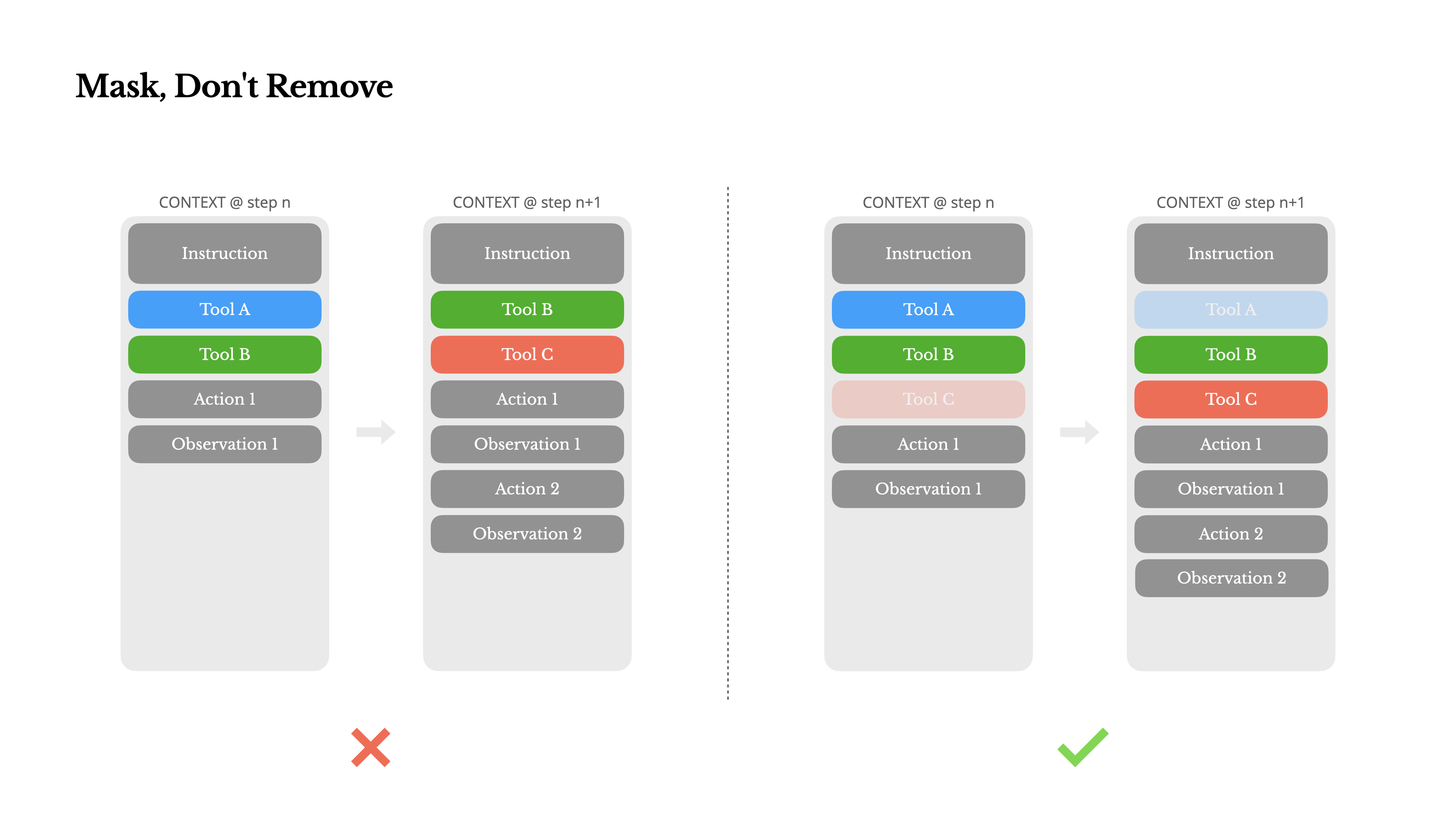

Mask instead of remove

As agents become more and more capable, their behavioral space naturally becomes more and more complex, and to put it bluntly, the number of tools explodes. The recent popularity of the Model Context Protocol (MCP) has added fuel to the fire. Once you allow users to configure tools, there will always be hundreds of weird and wacky tools crammed into your carefully curated behavioral space. As a result, models are more likely to choose the wrong tool or take an inefficient path, and a heavily armed agent becomes "dumber".

One immediate response is to design a dynamic behavioral space, for example by using something like the RAG(Retrieval Enhancement Generation) technology on-demand loading tool.Manus This approach was also tried, but the conclusion from the experiments is clear: dynamic addition and removal of tools in the middle of an iteration should be avoided unless absolutely necessary. There are two reasons for this:

1. Tool definitions are usually located at the front of the context. In most large language models, tool definitions are serialized to immediately follow system hints. Therefore, any changes will result in all subsequent behaviors and observations of the KV Cache invalidation.

2. Models can be confusing. Models can get confused if previous behaviors and observations refer to tools that no longer exist in the current context. In the absence of constraint decoding, this often leads to formatting errors or the model hallucinating non-existent tool calls.

In order to address this issue.Manus does not remove the tool, but uses a context-aware state machine to manage tool availability. It prevents or forces the model to choose certain actions based on the current state by masking (masking) the logits of a specific Token during the decoding phase.

In practice, most model vendors and inference frameworks support some form of response pre-population, which allows constraining the behavioral space without modifying the tool definition. Take NousResearch 的 Hermes format as an example, there are usually three function call modes:

- Automatic (Auto): The model can decide for itself whether or not to call the function. By pre-populating the

<|im_start|>assistantRealization. - Required: The model must call a function, but there is no restriction on exactly which one. By pre-populating the

<|im_start|>assistant<tool_call>Realization. - Specified: The model must call some function in the specified subset. This is achieved by prepopulating to the beginning of the function name, for example

<|im_start|>assistant<tool_call>{"name": “browser_。

Using this mechanism, it is straightforward to constrain action selection by masking Token logits. For example, when the user provides new input, theManus It is important to reply immediately rather than execute the action. The team also deliberately designed action names with consistent prefixes, for example all browser tools start with browser_ starts with the command line tool that starts with shell_ start. This makes it easy to force an agent to select only from a certain toolset in a given state without having to use a stateful logits handler.

These designs ensure that even with model-driven architectures, theManus The cycle of proxies remains stable.

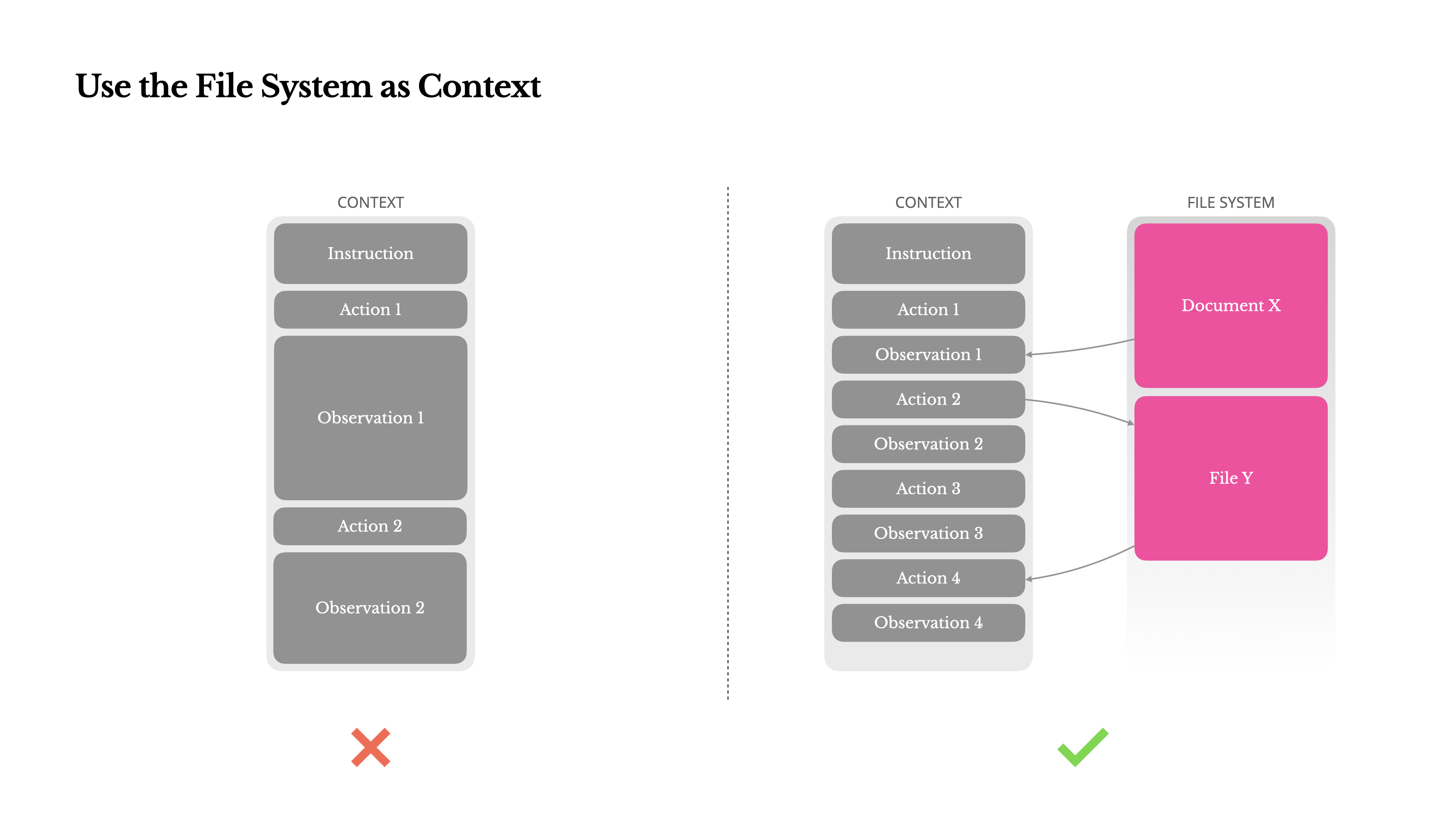

Using the file system as a context

Modern cutting-edge large language models provide 128K or even longer context windows, but in real agent application scenarios this is often not enough, and sometimes even becomes a burden. There are three main pain points:

1. Observations can be very large. When agents interact with unstructured data such as web pages, PDFs, etc., it is easy to exceed the context length limit.

2. Model performance decreases with increasing context length. Even if technically supported, the model's performance tends to degrade beyond a certain length.

3. High cost of long inputs. Even with prefix caching, developers still have to pay for transmitting and pre-populating each Token.

To cope with these problems, many proxy systems employ context truncation or compression strategies. But overly aggressive compression inevitably leads to information loss. This is a fundamental problem: It is in the nature of an agent to predict its next move based on all previous states, and you cannot reliably predict which observation ten steps ahead will become critical later. Logically, any irreversible compression comes with risk.

Therefore.Manus Think of the filesystem as the ultimate context: it has infinite capacity, is inherently persistent, and can be directly manipulated by agents. The model learns to read and write files on demand, using the filesystem not only as storage but as structured external memory.

Manus 's compression strategy is always designed to be recoverable. For example, web page content can be discarded from context as long as the URL is preserved; document content can be omitted as long as the file path remains in the sandbox. This allows the Manus Context length can be effectively reduced without permanently losing information.

An interesting thought while developing this feature was: how does a state space model (SSM) work efficiently in an agent environment? With the Transformer Unlike SSMs, SSMs lack global attention and struggle to handle long backtracking dependencies. But if they can master file-based memory - externalizing long-term state rather than keeping it in context - then their speed and efficiency may open up a whole new class of agents. At that point, agentized SSMs might become the true successors to Neural Turing Machines.

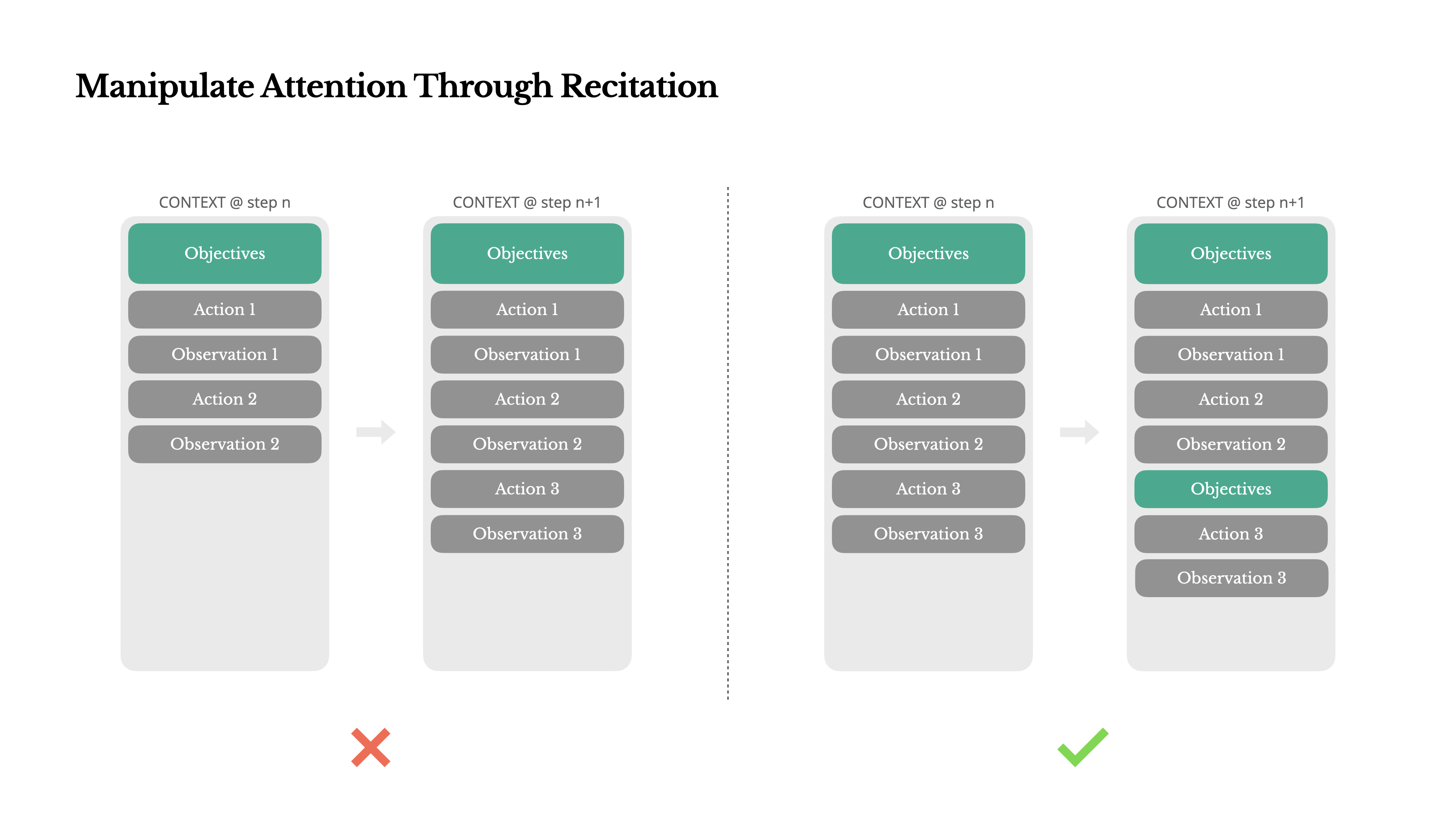

Manipulating attention through "retelling".

If you have used Manus, may notice an interesting phenomenon: when dealing with complex tasks, it tends to create a todo.md document and progressively update and check off completed items as the task progresses.

This is not simply anthropomorphic behavior, but an elaborate mechanism of attention manipulation.

在 Manus in which a typical task requires about 50 tool calls on average, which is a fairly long cycle. Since Manus Relying on large language models for decision making, it is easy to get sidetracked or forget early goals in long contexts or complex tasks.

By constantly rewriting the to-do list, theManus This is equivalent to "restating" the core goal at the end of the context. This pushes the global plan into the model's immediate attention span, effectively avoiding the "lost-in-the-middle" problem and reducing goal drift. In effect, this uses natural language to direct the model's own attention toward the task goal without any special architectural changes.

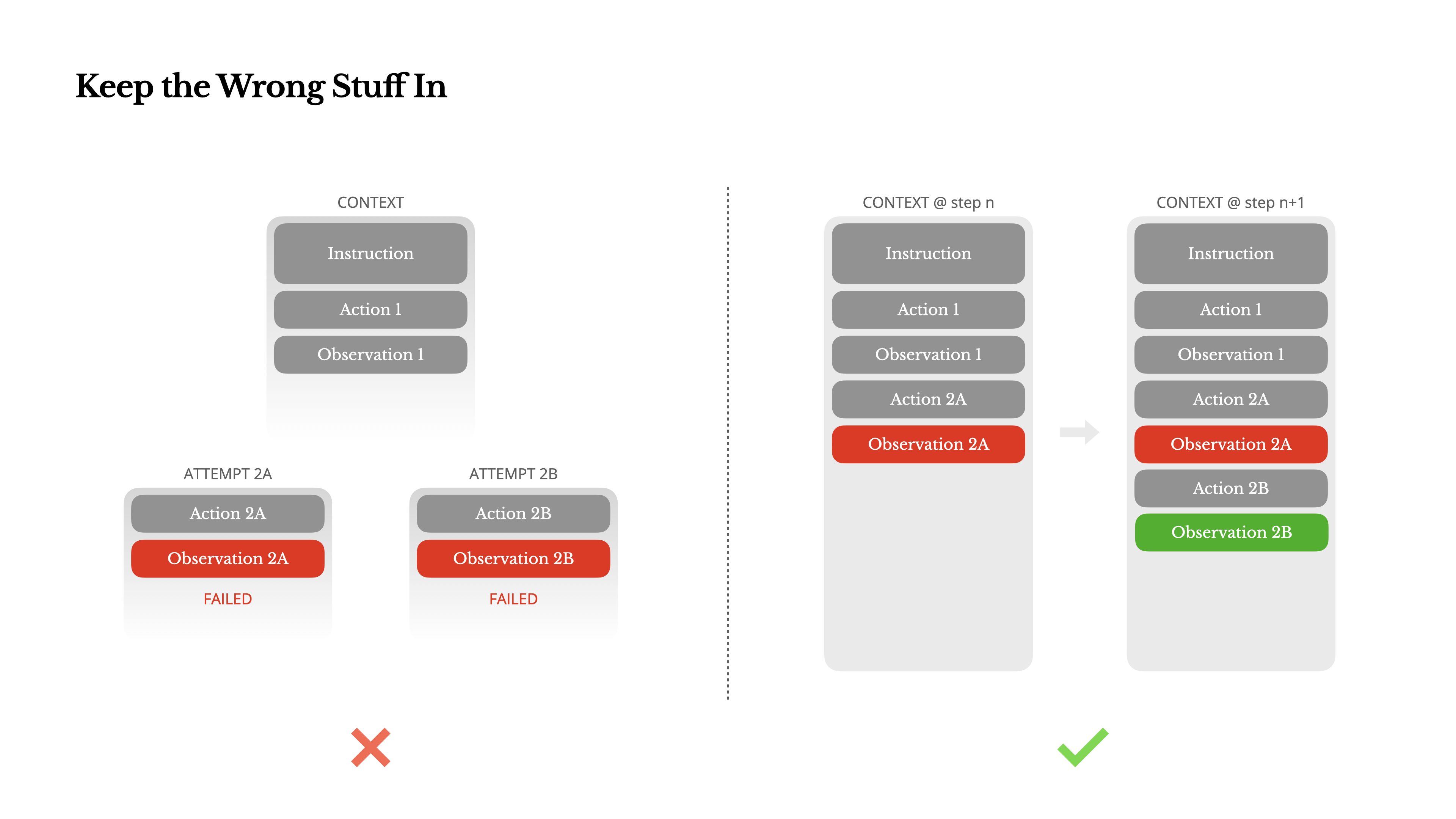

Keeping records of failures

Agents make mistakes. This is not a flaw, it's a fact. Language models can hallucinate, environments can return errors, external tools can malfunction, and all sorts of unexpected edge cases abound. In multi-step tasks, failure is not the exception, it's part of the cycle.

However, a common impulse among developers is to hide these errors: clean up the traces, retry the action, or reset the model state, and hope that a magic "temperature" parameter will fix the problem. This feels safer and more controllable, but at a cost: erase the failure, and you erase the evidence. Without evidence, the model cannot adapt and learn.

ground Manus In our experience, one of the most effective ways to improve agent behavior is surprisingly simple: keep incorrect attempts in context. When the model sees a failed action and the resulting observation or stack trace, it implicitly updates its internal "beliefs", thus reducing its preference for similar behaviors and decreasing the probability of repeating the mistake.

Indeed, the ability to recover from errors is one of the clearest indicators of true agent intelligence. However, this is still grossly underestimated in most academic studies and public benchmark tests, which tend to focus only on task success under ideal conditions.

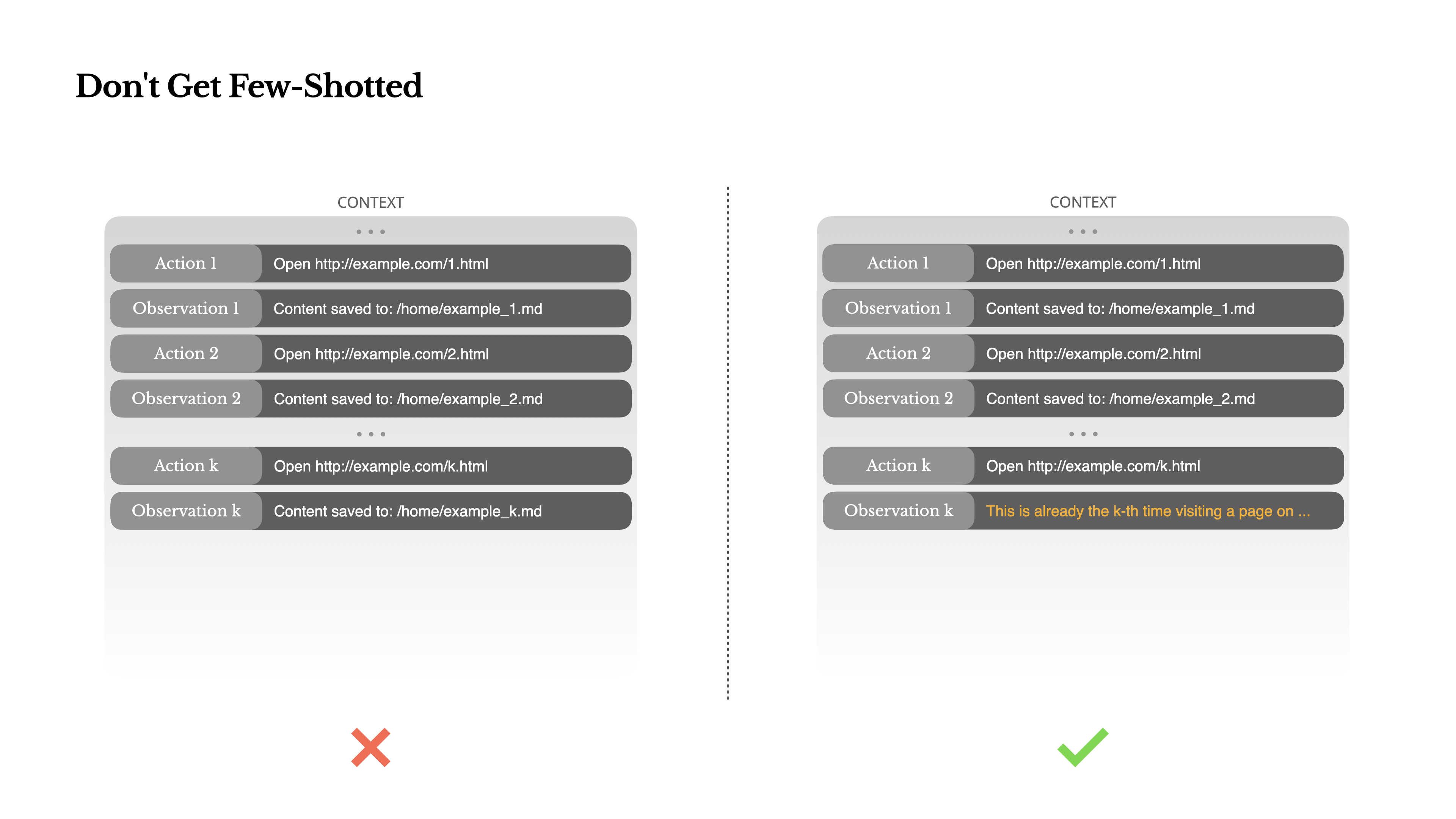

Beware of the "sample less" trap

"Few-shot prompting" is a common technique for improving the quality of output from large language models, but it can backfire in subtle ways in agent systems.

Language models are excellent mimickers, and they will mimic the behavioral patterns in their context. If your context is full of similar "behavior-observation" pairs, the model will tend to follow that pattern, even if it is no longer the best choice.

This can be very dangerous in tasks that involve repetitive decisions or actions. For example, when using the Manus When reviewing 20 resumes in batch, agents often fall into a rhythm - repeating similar actions over and over again simply because they see them in context. This can lead to behavioral drift, overgeneralization, and sometimes even hallucinations.

The solution is to increase diversity.Manus A small number of structured variants were introduced into the behaviors and observations, such as the use of different serialization templates, alternative wording, and small noises in the order or format. This controlled randomness helps to break solidified patterns and adjust the model's attention.

In other words, do not allow yourself to fall into a "sample less" path dependency. The more uniform the context, the more vulnerable the agent's behavior.

Contextual engineering is still an emerging science, but for agent systems it is already a cornerstone of success. Models may get stronger, faster, and cheaper, but no increase in raw power can replace careful design of memory, context, and feedback. How you shape the context ultimately determines how your agent acts: how fast it runs, how resilient it is, and how far it scales. The future of the agent will be constructed from one carefully designed context after another.